We usually think of journal growth as a good thing. Growth means you are attracting more manuscripts, more authors, and more attention. But journal growth can have a negative effect on citation performance measures, especially on the Journal Impact Factor (JIF). This blog post explains why.

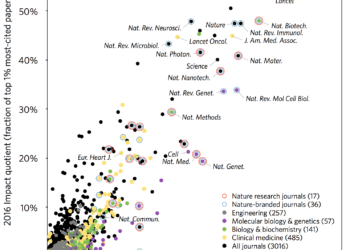

The JIF is one of many citation indicators that measure the performance of journals. Clarivate Analytics will release 2017 JIF scores as part of its Journal Citations Reports sometime in the middle of June 2018. While the JIF has been the target of scorn by vocal members of the scientific and publishing communities, authors are sensitive to the JIF and other measures of prestige when considering where to submit their manuscripts. A journal moving from first to second place in its field may mean fewer high-quality submissions. This is not just an issue for high JIF subscription journals, however. Joerg Heber, Editor-in-Chief of PLOS ONE noted recently that he witnessed a drop in submissions in several fields after the release of their last JIF.

Why Journal Growth Depresses Journal Impact Factors

The JIF is based on measuring, in any given year, the performance of papers published in the previous two years. The much anticipated 2017 JIF will measure citations received in 2017 to papers published both in 2015 and 2016.

For most journals, in most fields, papers tend to receive fewer citations in their second year of publication compared to their third.

Consequently, if a journal grows, its JIF calculation becomes unbalanced with a larger group of underperforming 2-year old papers and a relatively smaller group of 3-year old papers. The overall result is a decline the JIF score. Conversely, a journal that shrinks can expect an artificial boost in its JIF, all other factors remaining the same.

A Helpful Analogy

Consider a regional track-and-field competition for elementary school children. While this annual event hosts a variety of competitions for students enrolled in grades one through five, we will focus on the 100m dash for second- and third-graders, all of whom compete at a single event.

Now, most parents know that third-graders, on average, are much faster runners than second-graders. This does not mean that every third-grader will run faster than every second-grader. The overall winner of the 100m dash may indeed be a second-grader. Nevertheless, we can expect third-graders, as a cohort, will outcompete second-graders. In most games involving children, age makes a lot of difference.

As a result, if your school has a large cohort of second-graders, you can expect that your school will perform worse than a competing school with a larger cohort of third-graders. This age-performance principle works the same for journal articles as it does for children. If you want to increase team performance, it’s better to stack it with older children.

A Journal Example

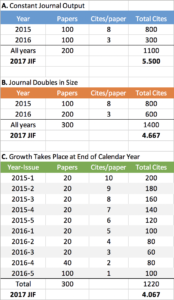

Consider the following journal example. The Journal of Steadfast Output publishes 100 papers per year. In 2017, papers published in 2015 (having completed their third year of publication) received a total of 800 citations, or 8 cites per paper. Papers published in 2016 (having completed their second year of publication) received a total of 300 citations, or 3 cites per paper. Overall, the journal received a JIF score of 5.500.

Now, consider a scenario (B) where the journal increased output, doubling its size to 200 papers in 2016. If we assume that quality and citation rates didn’t change, this journal should receive more citations overall; however, its overall performance (its JIF) will drop by 15% to 4.667. This is not a huge drop in either relative or absolute terms; nevertheless, members of its editorial board are likely to notice a drop in submissions as its JIF dips below 5.

In scenario C, we consider how the timing of journal growth affects JIF scores. In 2015, our journal published five issues per year, each composed of 20 papers, for an annual total of 100 papers. In the fourth issue of 2016, the journal doubled output to 40 papers. In the fifth issue, it jumped to 100 papers. While this may seem like unrealistic growth, consider that issue 4 is a special issue with invited papers and issue 5 includes papers presented at the society’s annual meeting held in June. Output fluctuations like this are actually pretty common.

In scenario C, we only changed the timing of output, not total output. In 2016, the journal still published a total of 300 papers. The overall citation rate for each annual cohort also remained the same: 8 cites/paper for 2015 and 3 for 2016. However, by publishing one-third of total output at the end of the year we reduced the JIF of this journal by another 13%. Why so much?

A paper published in the December issue of a journal has aged only 13 months when it has completed its second year of publication. In contrast, a paper published the January issue is 25 months old — almost a year older. Using our school grade analogy, children who are born just before the age cut-off are the youngest in their class, whereas children born just after the cut-off can be nearly a year older. For children and papers alike, age can make a lot of difference on performance in their early years.

Managing Journal Growth

Given that older papers tend to outperform younger papers, publishers and editorial boards that wish to grow their journal should consider growing strategically as not to artificially depress future journal citation scores. By strategically, I mean growth that takes place at, or near the beginning, of each calendar year. Unfortunately, this strategy can be taken to extremes. I have seen an example of a journal that cancelled publication of its December issue altogether in order to publish a double-issue in January. Not surprisingly, this change was met with contempt by some competing journal editors as the purpose for this change was readily apparent.

Nevertheless, editors do have some flexibility for scheduling publication of papers that are commissioned, for example, a special issue on a particular topic or a review series.

The timing of publication needs to be balanced with the aims and production schedule of the journal, as rapid changes in growth can cause chaos for the editorial staff and publisher. More importantly, publication timing needs to respect the needs of authors, many of whom are driven by external pressures — by their colleagues, institutions, and their funders — to publish quickly. The needs and expectations of authors are far more important than attempting to manufacture relatively small changes in JIF scores.

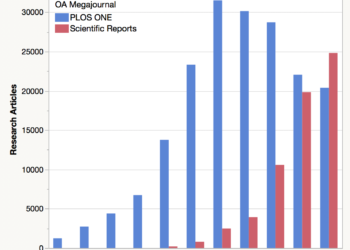

Last, it may be very difficult to manage journal output for an open access journal using an APC model and novelty-free acceptance criteria. For example, the journal Medicine, published by Wolters Kluwer published 30 papers in 2013 before it converted to an OA model in 2014. In 2015, it published nearly 2000 papers and more than 3000 papers in 2016. Its JIF dropped from a high of 5.646 in 2014 to a low of 1.804 in 2016.

In a saturated market of multidisciplinary OA megajournals with nearly identical scopes, editorial structures, and prices, a precipitous JIF drop can drive authors to competing titles. Indeed, megajournals like PLOS ONE and Scientific Reports, appear to be fighting for a limited market of author manuscripts.

For closely contested races, publication timing can mean the difference between winning and placing second.

Discussion

13 Thoughts on "Journal Growth Lowers Impact Factor"

Addendum: The publication date-citation problem can be solved by moving from an annual metric to a rolling metric. This change is unlikely, as it would require Clarivate to completely rebuild its systems. For the foreseeable future, we are stuck with a legacy system that was designed to generate a single report.

Phil, Thanks for a great analogy to explain this phenomenon.

Worth noting that the reverse applies as well. Journal shrinkage (mathematically) leads in the same way to a short-term boost in JIFs. You mention this in the post: “If you want to increase team performance, it’s better to stack it with older children.”

I mention this explicitly because being “more selective” (often meaning publish fewer [new, younger] articles) will boost the JIF typically. People might see this as a proof that it is a good strategy to improve the JIF, but at least in the short term it is just the IF formula at work. If that improvement is sustained, then the editors might have something to crow about.

Hello Phil, Thank you for this interesting insight in the effect of article growth on the journal citation impact. Your current analysis is based on JIF for which the most recent available data are the 2016 numbers. I would like to point your attention to the annual 2017 CiteScore values that have already been released, almost two weeks ago on May 30 and are (freely) available for all of the 23 thousand titles covered in Scopus: https://www.scopus.com/sources

Obviously, similar observations of the effect of article growth can be observed with CiteScore, although slightly different due to the different citation window of three years and the data being more recent by more than a year. For example, the CiteScore trend for the journal Medicine that you have used above is available here: https://www.scopus.com/sourceid/18387.

In addition, with CiteScore we also calculate a monthly tracker which allows you to monitor the values building up during the year. Although it is not a rolling year as you suggest, CiteScore Tracker can provide more insight in the aging effect of documents receiving more citations the older they get. By the way, with the methodology of CiteScore and the structure of the Scopus database it would be possible to calculate rolling year metrics. However, when testing different varieties of the metric prior to launch, users found the rolling year metric to be confusing and preferred the static, annual ones.

Thanks for pointing out the relationship between the Journal Growth and JIF.

Some journals stack up their issues quite early on to gain additional time for citations. The December 2018 issue is already in the works for some journals. Similarly, several journals with high JIF delay print publication/pagination after acceptance of the manuscripts (the accepted manuscripts remain on-line during this period).

Another major difference between Cite Score and JIF is the denominator. CiteScore considers all published papers (peer reviwed + editorial matter) in the denominator and JIF considers all citations in the numerator but only peer reviewed articles in the denominator. Thus journals with large editorial content have significantly lowed CiteScore than JIF

I have been telling people this for years. If a journal wants to instantly improve its impact rating, just reduce the number of papers published by 25%. Then, it will shoot up, you will get more highly cited submissions, and your prestige will go up. It is the classic using the mean to describe a nonparametric distribution. median cites w quartile ranges say way more about a journal’s citations than the mean.

In my view you are hitting the main issue: using the arithmetic mean for describing a very scewed distribution. This is absolute nonsense. And a citation window of three years doesn’t really improve the situation.

All other exercises around those ratio-based metrics are futile theory producing air bubbles.

I wonder when this will stop and scientometrics will start to use sensible statistical methods.

So the method of choice to maximize the JIF is to cease publications. If, starting from scenario A, the number of papers in 2016 is 0, then the JIF(2017) will be 8. A funny indicator…

So no mention about quality? A journal can grow with high quality articles and still keep up the IF pace.

There must be a threshold to this kind of pattern when a reverse trend starts. When stepping into causal analysis, it is better to avoid anecdotal or rudimentary evidence.

Perhaps its time to rely on Scientometric scholars for such analyses.

I did some research last year to test the Journal growth leads to a dip in IF idea. Logic suggests journal growth leads to an IF decrease. However across our (T&F) portfolio 55% of journals with an issue increase received an increased IF (for the first year affected by the issue increase) compared to 60% of journals with no issue increase. After 2 years this difference had largely disappeared and if anything the journals that had grown previously were actually slightly ahead of the journals that hadn’t grown. The numbers with issue increases were too small to get into robust subject specific analysis but the above held true with only one area negatively affected after 2 years (although we were only looking at 1-2 journals in some subject areas). So what’s going on? Does overall growth in citations/WoS mask any negative effects? Does the fact that a journal is attracting papers enough to grow mean that it can actually be more selective on the quality after the initial growth “hit”? Are the marketing team doing a great job? This was just looking at issue increases rather than page increases and the decision on how and when to grow a journal is based on a variety of factors so a page increase analysis might reveal a different trend.

Thank you for yet another nail in the impact factor’s coffin. Its real, manipulative, nature to orient researchers-as-authors’ publishing choices is becoming ever clearer. What information funding agencies, universities and research managers are hearing back from such “bearings” boggles the mind, but it has precious little to do with quality.

Increasingly, the IF appears in its naked reality: a totally arbitrary form of metric structuring a competition,. It itself answers the requirements of a mangement style based on competition. This conceit, of course, totally neglects the important elements of collaboration and exchange that also live within scholarly communities. Thereby, it reveals its deeply ideological roots.

The comparison with racing two- and third-graders racing is perfectly appropriate and it also demonstrates the inanity of the process. More and more, science is being run like a soccer league, and this is detrimental to the whole ambition of building a truly open systen of distributed intelligence in our species.

Hello Phil, thank you for this post and demonstration. You write “For most journals, in most fields, papers tend to receive fewer citations in their second year of publication compared to their third.” What is the trend in the fourth year for most journals? And is there an academic paper describing this trend, year by year? Thanks

Dear Phil, A very interesting read. As a counter example, please see the journal growth and JIF increases of the Journal of Cleaner Production. It seems that if journal growth is high enough, the JIF can increase with journal growth. One would assume that this kind of growth is unsustainable in the long run, and puts a huge strain on editors and reviewers…

This example also relates to your remark about “unrealistic growth”. Apparently, much faster growth than in your hypothetical example does occur in reality.