Tweeting by a network of journal social media editors increases citations and Altmetric scores, reports a new paper published in the Annals of Thoracic Surgery. Not surprisingly, it is also getting a lot of tweets.

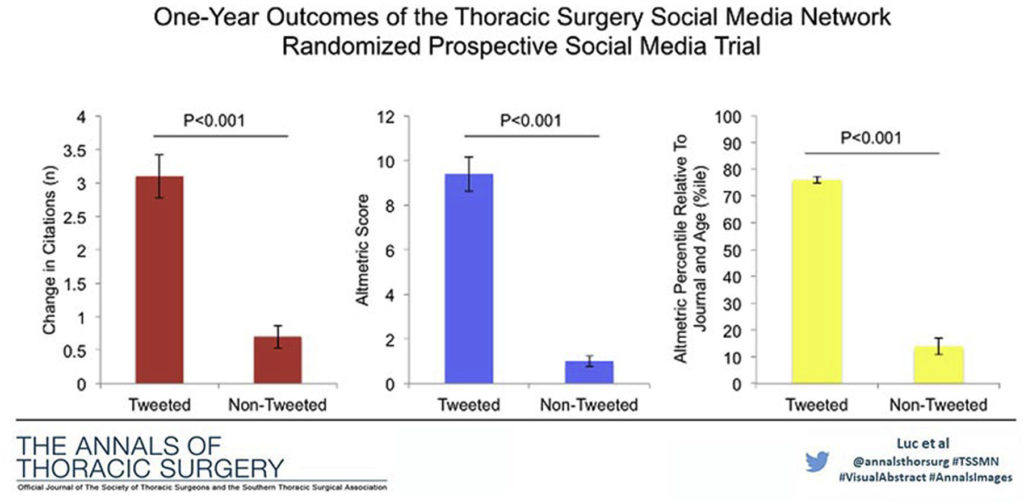

The study is based on a randomized controlled trial, in which 112 original research papers were assigned to either the intervention arm (receiving tweets and retweets by members of the journals’ social media network delegates) or no intervention at all. The authors measured each paper’s citations and Altmetric scores after one year.

Yet, a closer reading of this paper yields substantive questions and concerns about its veracity, including the source of citations, measured outcomes, and an inability to validate or recreate the dataset. Neither the authors, nor the editorial office, have been willing to explain or defend this paper.

While the authors report a sizable difference in citation performance after just one year (an average of 3.1 additional citations to the treatment papers vs. just 0.7 citations to the control papers), the authors don’t state their source of citations. This is curious, as the results may vary widely depending on whether they used data from the Web of Science, Scopus, CrossRef, Dimensions, PubMed, or Google Scholar.

The authors also count Altmetric scores for each paper in their trial, but there are two glaring problems here: First, Altmetric scores are composites of many social media events that include tweets. So, if the intervention was tweeting, Altmetric scores of tweeted papers will increase ipso facto. Second, both journals use PlumX Metrics not Altmetric, so it’s not clear how they got article-level Altmetric scores.

There are other statistical oddities in this paper that made me curious about its veracity, such reporting the Odds Ratio (OR) on continuous variables like citation frequency.

I contacted Mara Antonoff, corresponding author of this paper and Assistant Professor at the MD Anderson Cancer Center on June 10, with the above questions and was promptly sent a response that she, or the first author, Jessica Luc, Cardiac Surgery Resident at the University of British Columbia, would get back to me shortly with answers. One week later, with no response, I sent a reminder. Another week later, I contacted the editorial office of the journal and asked for their involvement plus a copy of the authors’ dataset. The office responded with an invitation to submit a formal letter to the editor, which may, or may not, be accepted for publication. Both Luc and Antonoff are listed on the editorial board of the journal and all authors of this paper are listed on the journal’s list of Thoracic Surgery Social Medial Network (TSSMN) Delegates. Yet, there is no author disclosure section to this paper.

Given a lack of response from the authors and limited involvement from the journal’s editorial office, I attempted to recreate the authors’ dataset. According to their methods, 56 articles were randomized into the treatment (tweeted) arm of the study. Given that tweets are public, I looked into the tweet history for Antonoff, Luc, and other members of the TSSMN social media delegates but could only identify 23 tweeted articles made during the period described in their paper. I asked the authors about this discrepancy and Antonoff responded by email, “As we stated in the manuscript, the study included 112 articles, among which 56 were tweeted.” She ignored my second request for their dataset. The Annals has no stated data availability policy. I asked the editorial office a second time for their involvement and was told to submit a letter-to-the-editor.

Authors of scientific papers should be able to explain and defend their research and provide any additional evidence to support their claims. In turn, the editor of the journal has authority over published papers and can take action if authors are unwilling to comply. Such actions may include an Editorial Expression of Concern and, if necessary, a retraction. The Annals of Thoracic Medicine is a member of the Committee on Publication Ethics (COPE), which does provide direction on how to respond to concerns sent directly to to the editor. Reminding the journal editor of their COPE membership did not change his position how to communicate my concerns.

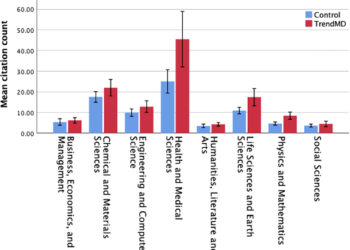

In sum, this paper makes bold claims about the effect of collaborative tweeting on the performance of medical papers. Not surprisingly, this paper has been widely tweeted, shared on social media, and is used as strong evidence, given that it is claimed to be based on a randomized controlled trial design. Prior studies show little (if any) effect of social media effects on paper performance.

Unfortunately, the paper contains serious omissions and neither the authors, nor the journal’s editorial office, are willing to explain or defend this paper. More alarmingly, there is a lack of evidence that the study was performed as described. Unlike a medical trial, in which some data may be kept confidential to preserve the anonymity of patients, I don’t see a similar argument for a study that utilized published papers and public Twitter accounts. Moreover, the hesitancy for the authors to respond, given that several are also members of the journal editorial board, is alarming; the lack of concern from the editorial office is even more concerning. Without public access to the data behind this paper, the results of this study should be considered suspect.

[An update to this investigation was published on 13 July 2020, Reanalysis of Tweeting Study Yields No Citation Benefit.]

Discussion

8 Thoughts on "Intention to Tweet: Medical Study Reports Tweets Improve Citations"

Sample size much too small?

Given that prior research has demonstrated little (if any) effect of social media on citations, the authors’ sample size is really small. A two-means comparison with alpha=0.05, SD=0.25 and Power=0.9, the study should have been over 1000 papers, 10X what they used.

However, the authors write in their Study Design section (page 6):

“A power calculation was not performed because the relevant effect size for this study

remains unknown.”

Ignoring prior research is not a viable defense…

The Annals of Thoracic Surgery’s Impact Factor is only 3.9, so this big an effect of tweeting might have boosted their impact factor as well. However they do have a lot of articles (~500 per year) so the effect of just tweeting 50 articles would be diluted. The lack of visible tweets is very concerning…

I was really excited to read this SK article and find that increased efforts to tweet individual articles could help citations. I need this type of data to make a case to spend the time and effort on such initiatives, which I do think would help our journals. However, that is not actually the content of this article. Perhaps this article should be renamed? Additionally, it would be helpful to have some additional reference to other data that does/does not support this social media claim.

Hi Christine, if you follow the link in the article where it says, “Prior studies show little (if any) effect of social media effects on paper performance,” it will lead you to a 2019 post that summarizes the research on the impact of social media:

https://scholarlykitchen.sspnet.org/2019/05/23/can-twitter-facebook-and-other-social-media-drive-downloads-citations/

Thank you, David. I appreciate your response.

Phil –

Excellent analysis and critique of this paper. I’m hoping this post will lead to the authors to answer your questions…

Paul

“so it’s not clear how they got article-level Altmetric scores.”

It is possible to access the Altmetric scores of these articles through the free version of Dimensions: for example: https://app.dimensions.ai/discover/publication?or_facet_source_title=jour.1057026&or_facet_year=2018&or_facet_year=2017&order=altmetric

although it is not mentioned in the article if this method was used.

You can use get Altmetric article-level data for papers from any journal, regardless of whether the journal itself actively makes known its Altmetric data. The simplest way to do this is using the “bookmarklet” feature. Altmetric doesn’t require any cooperation with the journal to gather its data.

This criticism therefore is unfair and in ant case misses the real issue on the Altmetric data — namely, whether it is meaningful. In my view, Altmetric data is not a valuable statistic at an article level. The calculation underlying the Altmetric “score” is rather opaque and seemingly arbitrary. Capturing mentions on the various tracked platforms does not seem to be complete, and there is no quality filter to assess the context of a mention.