Editor’s Note: Today’s post is by Daniel S. Katz and Arfon M. Smith. Dan leads the FORCE11 Software Citation Implementation Working Group’s journals task force, and is the Chief Scientist at NCSA and Research Associate Professor in Computer Science, Electrical and Computer Engineering, and the School of Information Sciences at the University of Illinois. Arfon is the Editor-in-Chief of the Journal of Open Source Software, and Staff Product Manager for Data at GitHub.

Almost five years ago, a group of researchers decided to start a new journal, the Journal of Open Source Software (JOSS), to provide software developers and maintainers a means to have their work recognized within the scholarly system. And since we were doing something new and coming out of the open source community, we decided we would also make changes to the typical submission, review, and publishing processes to make them more like the practices that have proven successful in open source software, while still fitting in the scholarly publishing ecosystem.

We have been successful in many aspects, including publishing almost 1250 diamond open access (free to publish, free to read) papers to date at a total cost of less than US$3 per paper, many of which have been highly cited. These costs include funds required to host our web infrastructure (on Heroku) and fees for services such as Crossref (DOIs) and Portico (archiving of papers and reviews). Like many journals, JOSS relies significantly upon volunteer effort, in our case working at all levels (editors, reviewers, authors). Additionally, our technical interfaces with other scholarly systems (Crossref, Portico, ORCID, Google Scholar) are successful, simple, and flexible. This is because we designed our journal system from scratch based on good software practices, meaning that it’s relatively easy for us to meet the documented requirements of other infrastructure providers for identifying, indexing, and archiving our publications. However, our social and cultural interfaces have been more challenging, as we intersect with the unwritten norms and assumptions of other parts of the scholarly publishing community, most notably in terms of those who act as ‘scholarly gatekeepers’ and who are reluctant to recognize the value of the work we publish. In this blog post, we explain how our version of scholarly publishing works and how this has both advantages and disadvantages, particularly with respect to elements of the traditional scholarly publishing ecosystem.

The JOSS model

JOSS started in May of 2016, and we published our first paper shortly afterwards. From one point-of-view we focus on short papers that are, in some sense, placeholders in the scholarly publishing system, but more accurately, our submissions are both these short papers and the software that they describe; we review and publish both, though most of the review effort goes into the review of the software and associated materials. These papers describe the software; they are not allowed to include results of using it, except as examples of how it can be used, and scholarly results created using the software should be published in other venues. This is not particularly different from data journals or journals that publish research methodologies.

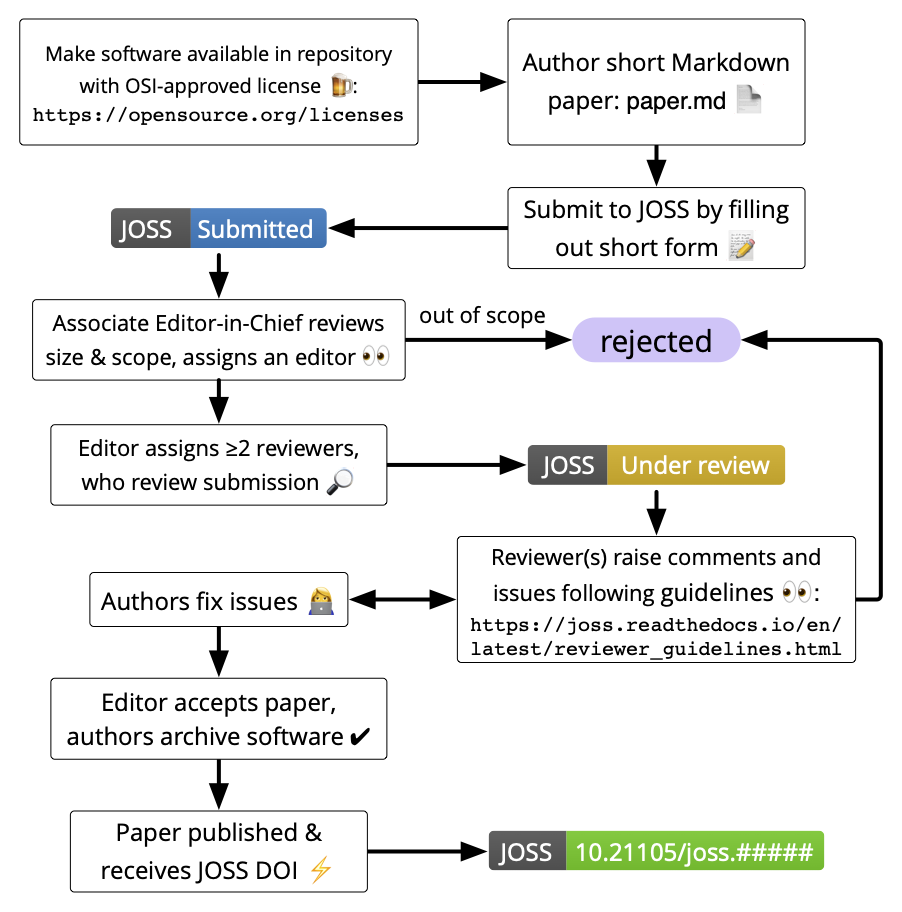

JOSS is designed to be friendly to software developers, reviewers, and the journal editors: it borrows a lot from open source practices, including being run on GitHub, with interactions implemented via GitHub issues, and being fully open, fast, iterative, and including a bot (@whedon) that can be commanded to perform a set of publishing-related tasks. This means authors and reviewers don’t have to learn a publisher-specific system or use different authentication credentials to the ones they regularly use. The full JOSS publication workflow is shown in Figure 1.

JOSS began with a two-tiered model of editors, an Editor-in-Chief (EiC) and 11 topic Editors. To scale up as the workload increased, we now have three tiers: an EiC, five Associate-Editors-in-Chief (AEiC), and 49 topic Editors. We also have sought new editors through open calls, where submissions are evaluated by existing editors, and where editors have an expected term of service that can be renewed. The EiC and AEiCs rotate through on-duty weeks, during which one AEiC assigns incoming papers to editors and publishes papers after they complete review.

Unlike most journals that value low acceptance rates, the goal of JOSS is to improve the submitted work to the point where it can be accepted. Reviews are checklist-driven, so that criteria for acceptance are clear to the author and the reviewers.

Given this, there are two points at which submissions can be rejected. The first is at submission: The AEiC checks that the submission is in scope, and meets JOSS’s substantial scholarly effort criterion, and if there is any question, asks the full set of editors to vote on it via a weekly email. Any questionable submission that an editor thinks should be reviewed will be, and often that editor may be the one to whom it is assigned. One of the reasons for this step is a desire to conform to the standards of the traditional scholarly publishing ecosystem and avoiding submissions that aren’t seen as being at least a minimum publishable unit, similar to how many journals would not review a one-page set of thoughts as a research paper. A second reason is to try to limit the number of processed submissions to what our volunteer editors and reviewers can handle. Since July 2020, shortly after we defined this policy more formally, about 30% of submissions have not been accepted for review.

The second point at which submissions are rejected is when there are problems found by the reviewers. Typically, the authors improve their work, and reviewers either accept the improvements as sufficient, or ask for more changes. Because these conversations happen in the open between reviewers and authors, they can be complex with multiple reviewers, multiple authors, and an editor participating, going back and forth many times (sometimes in a single day), or they can be simple, where one reviewer asks for a specific change, and the author makes it. But sometimes it either will take a long time for the authors to fix the problems, or the authors choose not to fix them, perhaps explicitly, and sometimes implicitly by ceasing to respond to the editor. In these situations, the editor will reject the submission. Since July 2020, about 5% of reviewed submissions don’t successfully complete review.

After both types of rejection, authors may later resubmit, based on expanding the submission in the first case, or fixing the problems in the second, and JOSS will treat these as new submissions.

There is an exception to this flow: when the software in JOSS submissions has been reviewed by community organizations with which we have an established relationship (today rOpenSci and pyOpenSci), JOSS trusts these reviews and does not re-review the software. Instead, the AEiC simply reviews the short paper and proceeds to publish the work.

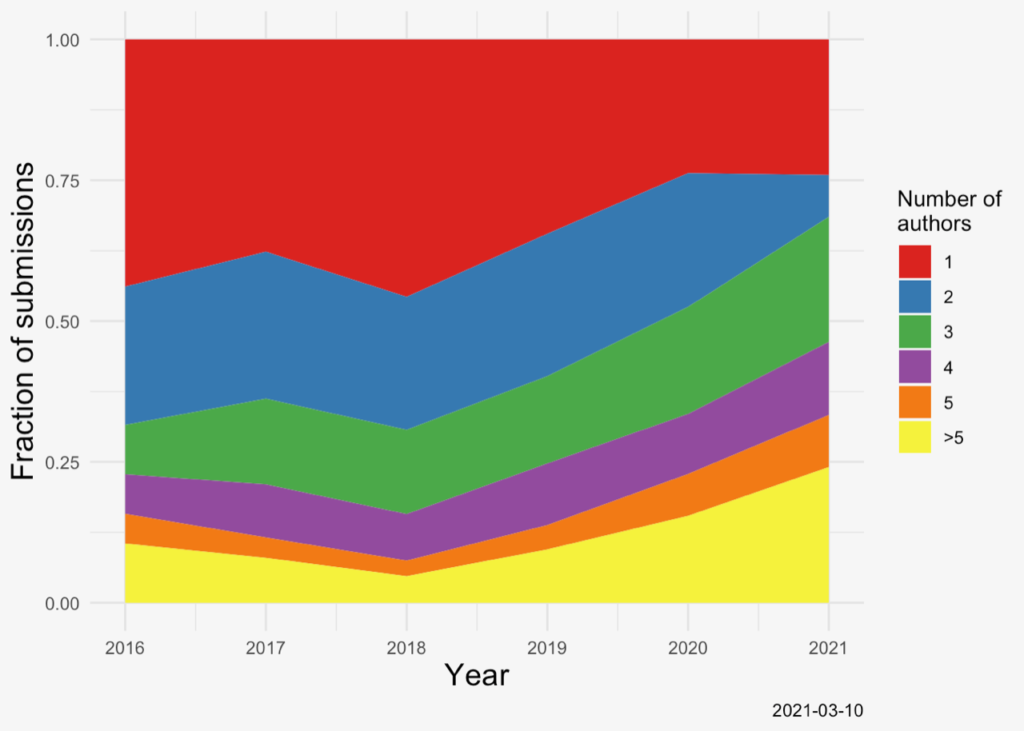

For those who are interested in more information, most JOSS data is public, and we regularly calculate and share an analysis of the data. For example, Figure 2 below shows that the number of authors per submission has been growing. We also publish a blog on different topics related to the journal.

Because our model is quite different from the traditional journal, there are both positive and negative consequences.

Positive consequences of starting an open, software-focused journal

We own our publishing stack. Our own software is a core asset, something we control, and something we regularly enhance and improve. While we have implemented this software stack on GitHub, a free and frequently used system that is both familiar to our authors, reviewers, and editors, we could switch to an open source tool such as a hosted GitLab instance if needed in the future, similarly to how we could move our web content to another hosting platform. Additionally, because of how our software works, there is no manual backend processing. Authors submit markdown documents, not typeset PDFs, and JOSS makes sure they can be interpreted as part of the submission process. When the paper is ready to be accepted, we automatically generate metadata about the paper and its references for Crossref from the author-submitted paper content using Pandoc, and the on-duty AEiC checks this as part of the publishing process. Soon, we will also automatically generate JATS as well. Finally, we archive PDFs, Crossref metadata, and our reviews with Portico.

Others can fork our software. Because we developed our software stack that enables JOSS as open source, it can also be used by others. One example is the Journal of Open Source Education (JOSE), which is a sibling journal run by the Open Journals organization, where we collaborate closely. Another example is the Julia Conference, which is run completely separately from Open Journals, but which has chosen to use our software stack for their own operations. As the JOSS software is forked and reused by others, improvements to core capabilities, bug fixes, and documentation have been made, which help everyone.

GitHub to reduce volunteer friction. One of the common challenges for most scholarly publishers is finding volunteers, whether editors or reviewers. Anecdotally, some journals report having to ask tens of candidates for each reviewer who agrees to participate. For JOSS, the number of requests per reviewer is around two, and asking three candidates is often sufficient to find two or three reviewers. In part, this is because we communicate with reviewers on GitHub, where they already work. This signals to them that we are different from other publishers, and that we will be using the same open source practices in our review that they comfortably use in their regular work.

Many reviews are highly collaborative, and embody the ideals of modern open source projects. Partially because of the nature of the work under review (open source software), and the environment in which the review is taking place, reviewers often contribute bug fixes to the submission they are reviewing as part of the review process, and requests for improvements that happen in the review process look just like other requests for improvements made by users, and can be treated together.

Collaboration with other open communities. Because there are multiple open communities working on software, and we have a rough, de facto, agreement on appropriate review criteria for open source software, we can accept the results of the open reviews from these other communities, such as rOpenSci and pyOpenSci, as mentioned above (when JOSS started, we based our review process on an existing software review process from the rOpenSci project.). For future similar collaborations, where our review criteria differ, we can just focus the work to be done in the JOSS review on those criteria, and accept the existing review on the similar criteria.

Collaboration with other scholarly publishers. Another interesting aspect of JOSS arises when researchers develop software and use it to generate scholarly results. We have a collaboration with American Astronomical Society (AAS) Journals to offer software reviews for the papers submitted to their journals. In this case, authors submit their paper to one of the AAS journals as usual and they submit a JOSS paper to accompany their AAS submission. In the JOSS review, they declare that the JOSS paper is associated with a submission to a AAS publication. When both submissions complete peer-review, each published paper cites and links to the other. As part of this process, AAS Publishing makes a small contribution to support the running costs of JOSS, which helps JOSS sustain itself. As discussed in the Principles of Open Scholarly Infrastructure, JOSS believes that revenue should be based on services, not data.

Negative consequences of starting an open, software-focused journal

Collaborative nature of reviews challenges norms of what is accepted by indexers. While some might think this peer-review model of working with authors to make their submissions publishable is good, others disagree. For example, when we asked for JOSS to be indexed by Scopus, we were not accepted, and were told “… this journal does seem to accept most submissions. Reviewing is somewhat limited. So, accepting this into Scopus would not be consistent with other journals’ acceptance. Rigorous reviewing of implementation work guaranteeing highest standards would benefit the entire area and allow us to accept such a title into Scopus. Unfortunately, the current title does not do this.” We disagree that the only way to prove a journal has high standards is that it has a low acceptance rate. Similarly, a recent study about Diamond OA journals says, “most OA diamond journals (67%) adhere to the highest level of scientific quality control (double-blind peer review).” We believe that we meet high standards in a highly collaborative manner (open collaborative peer-review), which is the norm in open source software, and that this leads to better scholarship than adversarial peer-review.

Open reviews can sometimes be challenging to manage. By working openly, reviews are part of the public record on GitHub (and archived with Portico.) Communications between authors, editors, and reviewers are generally highly professional and on-topic. A challenge sometimes faced by editors is managing the expectations of authors, who are usually very motivated to see their paper complete review, and sometimes have unreasonable expectations about how quickly a reviewer should work or an editor should respond to incoming reviews.

Reliant on volunteers. JOSS is dependent on volunteers for all functions. This includes the EiC, the AEiCs, the editors, the reviewers, and the authors, none of whom pay or are paid for their work. A previous blog post examines this model, explaining that the volunteers, mostly the editors, do some work that in other journals may be done by paid staff or outsourced, including copyediting and marketing. As we expand our number of published papers, we continually need to expand the number of editors and reviews, as well as replacing editors who either change jobs, focus, or just get burned out from the work required by JOSS. However, we’ve been able to do this successfully so far.

Conclusion

Where things are in our control, we’ve demonstrated that it’s possible to be at the forefront of modern publishing from both a technology and governance standpoint, all with a volunteer editorial board, at very low cost, and (anecdotally at least) with a high degree of author satisfaction. Unfortunately though, not all aspects of publishing are in the control of a journal and its editorial team, and some aspects of particular importance to authors such as indexing by third party commercial entities, remain outside of our control.

Overall, during the past five years we’ve developed a set of tools and editorial practices that have enabled us to publish a significant number of papers with a fully volunteer editorial team. Owning our tooling has enabled us to move faster than would have otherwise been possible while at the same time committing to flagship community standards such as the Principles of Open Scholarly Infrastructure. Ideas we adopted or developed over the past five years such as single-source publishing with Pandoc, extensive platform automation, and portable peer review are now becoming more conventional with other publishers.

Discussion

1 Thought on "Guest Post – Starting a Novel Software Journal within the Existing Scholarly Publishing Ecosystem: Technical and Social Lessons"

Personally, I think JOSS would be a very professional abd innovative journal in IT area. Its model would be very valuable. Such as Scopus and Clarivate (WoS), these databases should also rewrite their old rules under general principles because the high quality informaiton is what the industry needs….