When some of the leading technology minds in scholarly publishing gather each fall to polish their crystal orbs, the outcome has always been an invigorating and enlightening experience. This year, it has proved no different. At the STM Conference in Washington, DC today, the new STM Trends 2028 was released. Rather than focusing strictly on technology and its implications, the group took a slightly broader view, and included a more diverse pool of participants. Members not only of the STM Standards and Technology Committee, but also representatives from the Open Research, Research Integrity, and Social Responsibility committees, as well as a number of journal editors engaged in the process. The output is a vision of a world where humans and machines are integrated and engaged to support researchers as they navigate an increasingly complex world that is both analog and digital.

Prior to the start of the meeting in London in December, participants began with an exercise focused on envisioning headlines from the year 2028. In the meeting, the group dug deeper into the trends and issues that might face our community toward the end of the 2020s. As we think about where we are, how things will change and what might be of interest to those in the future, the resultant perspective adds value to the output, which is now available on the STM website.

It will come as no surprise to anyone that artificial intelligence was a key theme. How might the suite of tools that have entered the market in the past year impact our community over the long term. But many larger themes also shine through the AI hype. The ever increasing digitization of scientific processes, from labs to outputs to assessment will have significant implications for the research ecosystem and scholarly publishing in particular. The Trends report underlines that we’re not seeing an entirely new revolution in scientific communication, simply the next stage in a multi-decades long transformation from analog and simplified information distribution, to something much more robust, complex, and potentially valuable. These same innovations also pose risks to the integrity of the scholarly record, such as new tools that make faking or manipulating results easier, which poses a challenge to integrity, trust and reputation.

The Trends projects forward an environment where technology is blending the lines between purely human actors and machines that are engaging with and intermediating the scholarly content experience. Where trust can be enhanced through old practices, such as peer review and retractions, but also through the connectivity in the research knowledge graph. Ideally, we will also be in a position to toggle the controls of the network in a way that can tailor one’s experience to suit their needs, be they equity, trustworthiness, volume, or selectivity.

However, the vision isn’t purely a utopian one, where technology adoption is seamlessly integrated without its potential challenges and pitfalls. For example, technology tools can also be used by malicious actors, such as papermills and pirate content sites, to attempt to skirt rules or undermine trust in the process. We might have more reason to doubt or question the information we receive without understanding its provenance or vetting procedures. The network will develop and incorporate more of this information, making some more trustworthy. But what might one do to gain acceptance if they lack a background existence on the network or a reputation in this ecosystem, because they are early-career or because they are coming from a region outside of the traditional power centers of scholarship? This would certainly be a dystopian world, as the Trends report cautions us.

While technology is at the forefront of many of these issues, certainly they are not the only issues at play in this vision of the future. Additional risks such as geopolitical fissures, segmentation of global science into regional factions, or even international conflict, long simmering, seem to be growing as prominent concerns. Fundamentally trust, reputation, brand, and equity are all issues at play, each interacting with technology, but also with the social infrastructure we operate within.

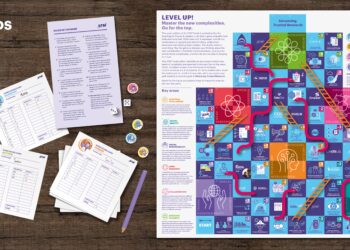

Throughout the graphic, there are three main themes at play. There is the developing and changing interactions between humans and machines. Related to this are various concerns about sources of truth and trust. Playing off both of these, as well as other factors, are the dulling forces of cohesion and fragmentation. Each section of the image draws one in and embedded in each are elements of these three themes.

Obviously, the melding of human and machine throughout the image is symbolic of how each of those engaged in the research process will infuse digital technology in their work. One can see some of this already happening, but the trends will only continue to accelerate in the next several years. The velocity of content is driving many to use technology to intermediate the consumption of vast amounts of information. It is envisioned that this will be incorporated into creation of content as well. From digital and robotic labs of the future, through AI tools that will assist in analysis and report generation. Tools and people will coexist, working together to register, validate, disseminate and archive knowledge. There will be new forms of expression, such as through augmented or virtual reality, which will need to gain acceptance in the scholarly content ecosystem.

However, AI and machine learning tools are only one aspect — if an important one — in this ecosystem. Often, it is too easy to focus on the technology in the trends we are experiencing, particular in our current milieu. In 2028, AI tools will be important, but our ecosystem is being transformed by several other digital-native, digital-first technologies. AI is one example — the latest example — which is building upon itself. But AI tools couldn’t exist without cloud computation being available at scale. Cloud computation would not exist without either the computing power, the network infrastructure, or the digital text that is ingestible into those models. As yet we still haven’t managed to navigate the last generation of existing digital tools and incorporate them fully into our processes. Perhaps this is why everything that is old is new again, as we try to figure out the place of the old as society develops.

Public trust in science is under threat because of disinformation and misinformation and it is expected these challenges will only continue to grow. Current and developing technology can facilitate paper mills, manipulation of outputs and images, etc., which will force people to doubt unverified information. While scholarly communications is built on verifiability, citation and peer review, some of these core features are being questioned because of the flood of fake content, the hallucinations of AI tools, the pace of submissions, or some combination of these and others simultaneously.

Despite these forces pulling at the fabric of the cohesiveness of the scholarly record, many threads still bind us. For example, journals, in their role as acknowleged brands that signal quality, are one age-old approach to conferring signals of quality and reputation. While events like mass retractions may cause people to question their conformance with that reputation and quality signaling, their existing role of journals as brands will likely continue well into the future.

The connectivity of the knowledge graph that represents scholarly communication is another trust signal that will continue to grow and extend in forms and connections. In the future it is envisioned that all research inputs and outputs will be identified and described in this graph providing a resource for both humans and machines to navigate and discover relevant knowledge. While critically important, there are also potential challenges here as well. There is the potential for this graph to fragment into lightly connected pools of knowledge because of geopolitical or security-based tensions. This is signaled in the image as separate but only lightly connected networks.

There are many factors that drive the connectivity of this network such as its efficiencies and the need for comprehensiveness. Simultaneously, there are external factors that pull this ecosystem apart into discrete segments. As we think about this knowledge graph, it is also important to consider that this is not a static ecosystem, as it changes over time and it continually expands. The gauges at the bottom indicate not only areas where we can monitor or adjust the inputs, but where we can monitor the parameter of success and the qualities of the scholarly ecosystem. We keep trying to adjust the ecosystem, and the users (either human or machine) have a role in tweaking their own experience of this ecosystem.

While less obvious in the graphic, embedded in it are references to how we define our collective place and our collective values in this new ecosystem. As you scan the image, find the peace sign, the student reading a book, the diversity of the people in the image, as well as the core elements of review, connection, and trust. All of these are critical. Technology is becoming ubiquitous and is propelling and advancing our ability to navigate this increasingly complex network. It is becoming part of the fabric of our society. Fundamentally, it is a tool in service of connection, of discovery, of communication of advances in our knowledge. Ideally all of this is in benefit of quality of life, our humanity, and our individual and collective success.

It is unclear now whether this vision, be it utopian or dystopian, is exactly what will become in five years’ time. As Ebenezer Scrooge asked the Ghost of Christmas Yet to Come in Charles Dickens’ Christmas Carol, the core question is whether these things are “the shadows of the things that Will be, or are they shadows of things that May be, only?” Might we change our path, avoid the pitfalls, and lead us to the best outcomes? Much like the story of Scrooge, we too have agency to affect our futures. The decisions we take now, about how we embrace these technologies, in what manner and in what situations, along with the guardrails that we construct will impact our future. The STM Trends for 2028 provide good guidance on what to consider, and outlines many of the opportunities as well as the risks that could deflect us from our path.

Discussion

2 Thoughts on "Flourishing in a Machine-intermediated World: The STM Trends Report"

Thanks Todd, from Hylke’s presentation at the STM meeting today on this topic, the idea of a Trust-o meter – I’m drawn also to the ‘honest signaling’ TSK post https://scholarlykitchen.sspnet.org/2024/04/16/honest-signaling-and-research-integrity/ and the work PLOS are doing around open science indicators, is data, code, protocols, preprints, ORCID IDs etc present along with the article. A lot of food for thought as AI and machines become more powerful – we have feed in the right trusted data and signals – everything is interconnected – I’m sure there will be more informative discussions on this topic and identified trends – I do love the image created for the 2028 tech trend, the committee does a great job of capturing the mood!

“Public trust in science is under threat *because of * disinformation and misinformation and it is expected these challenges will only continue to grow.” (My emphasis.)

I’m skeptical that this is a big causal factor. (And notice how locates the problem as one of gullible consumers of misinformation/disinformation, rather than as one of why people are so distrustful of epistemic institutions that they are receptive to embracing and sharing — though without necessarily believing — misinformation.) Most normal people in the real world don’t know much of anything about the problems in scholarly communication. But they see evidence in the news, much of which is selectively framed to pander to pre-existing political orientations, of political partisanship among epistemic institutions. (I guess if you include selective framing as “misinformation” or “disinformation” the claim becomes more plausible, but then nearly all news is guilty of that.)

https://www.researchsquare.com/article/rs-3239561/v1

https://press.princeton.edu/ideas/what-do-you-really-know-about-gullibility

https://www.cambridge.org/core/books/psychology-of-misinformation/2FF48C2E201E138959A7CF0D01F22D84