Editor’s Note: Today’s post is by Stuart Leitch. Stuart is is the Chief Technology Officer at Silverchair where he leads the strategic evolution and expansion of The Silverchair Platform.

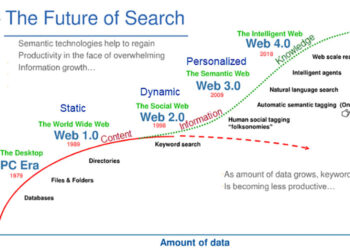

While LLMs (large language models) and RAG (retrieval-augmented generation) are promising, they fall short of human expert intelligence in many activities, and that gap varies widely depending on the task. These technologies have proven genuinely useful and are widely being incorporated into consumer and enterprise software, despite reliability issues. The sheer utility of these technologies will drive their widescale adoption by scholarly users well before all reliability or quality issues are brought down to negligible levels. The gaps in capability of LLMs and RAG in particular will narrow (and even invert) over time, but publishers and end users need education on those gaps to make investment decisions or to confidently utilize Generative AI (GenAI) tools effectively.

Jagged Edges

RAG solutions, when applied to scholarly literature, provide responses that often appear expert level in their accuracy and usefulness. However, RAG solution performance is not equal across all tasks, leading to a phenomenon known as “jagged edges,” where technology’s gaps become apparent. These gaps stem from intrinsic limitations in LLMs and specific implementations of RAG patterns. LLMs are designed to generate plausible predictions rapidly, but they tend to produce less reliable outputs when working with information or concepts that were very sparse in the training data or within rapidly evolving domains. This issue is particularly pronounced in fields that demand high specificity and up-to-date knowledge, such as medicine and law.

The RAG approach attempts to mitigate LLM knowledge gaps and hallucination tendencies by anchoring model responses in authoritative sources, like a publisher’s reference or research corpus. Nevertheless, the effectiveness of the RAG pattern is inherently limited by the quality of information it can retrieve and integrate into the LLM’s context window, where the LLM does in-context, or just-in-time learning. Retrieving specific context relative to the user query in order to generate accurate and high-quality, grounded responses, remains a challenging problem, especially in specialized and fast-changing fields.

In the context of scholarly and professional domains, the jagged edges manifest through several key challenges:

Query Interpretation Challenges

- Complex Query Interpretation: LLMs may struggle to grasp the full nuance of sophisticated queries, leading to responses that miss the mark. For instance, a highly specialized question about a specific scientific principle that requires substantial decomposition might be confused with a more general inquiry, resulting in answers that lack depth and specificity.

- Ambiguity and Context Sensitivity: Terms or concepts that carry different meanings across disciplines can confuse LLMs, leading to generalized responses that fail to address the specific needs of the inquiry.

Information Retrieval Limitations

- Search Relevance Failure: Unlike when you use Google to search something and you are the human in the loop to select which results to click on, in RAG, the top two to three retrievals are injected into the context window without human involvement. How well the LLM can respond to the user’s query is massively dependent on the quality of the retrieved context. Because search is far from a perfected technology, failure to retrieve the most appropriate underlying content is one of the biggest challenges in a RAG system.

- Distinguishing Between Established and Emerging Ideas: LLMs might not effectively differentiate between widely accepted theories and novel, potentially groundbreaking but less-established ideas, leading to an over- or under-emphasis on certain concepts.

- Overemphasis on Recency at the Expense of Rigorous Depth: If retrieval is tuned to heavily favor the most recent primarily literature articles, without adequately retrieving or highlighting secondary literature that provides essential context, results can be skewed by new literature with controversial views or findings that haven’t been confirmed or replicated by others

- Anchoring in Well-Established Content Can Obscure Innovation: Conversely, an overemphasis on detailed, analytical reference content can lead to overlooking recent developments, leaving users without critical up-to-date information.

- Constrained Context Limits LLM Abilities: Prompt length and volume of retrieved context injected into the context window are the most significant operational cost drivers beyond model choice. For pragmatic cost and latency or response time reasons, there are reasons to limit the amount of data sent to he LLM to generate a sufficient answer to a user’s query. This heavily impacts information synthesis as well as reasoning and analysis as outlined below.

Information Synthesis Difficulties

- Synthesizing Diverse Sources: The challenge of integrating varied types of information, such as experimental results, theoretical discussions, and case studies, can lead to incomplete or disjointed responses, undermining the utility of a RAG solution for comprehensive research.

- Handling Contradictory or Conflicting Information: When multiple retrieved sources present contradictory findings, the LLM might struggle to reconcile these differences and provide a coherent synthesis or explanation.

Reasoning and Analysis Limitations

- Depth of Reasoning: Responding to complex inquiries can require deep, nuanced understanding of data or perspectives from multiple domains. The amount of synthesis required can exceed an LLM’s capability to produce expert-level analysis, particularly in interdisciplinary areas.

- Handling Methodological Nuances: Different research methodologies, study designs, and analytical approaches can significantly impact the interpretation and generalizability of findings. The LLM might not fully account for these methodological nuances when synthesizing information from multiple sources.

- Significance of reported findings: LLMs can struggle to adequately recognize the significance of reported findings based on a variety of expert recognizable criteria such as larger sample sizes, longitudinal studies, and later-stage or randomized clinical trials, or from statistical indicators such as confidence intervals or P values.

- Recognizing and Conveying Uncertainty: Scholarly publications often discuss limitations, unknowns, and areas requiring further research. The LLM may not adequately capture and communicate the level of uncertainty or the need for additional investigation expressed in the sources.

- Limited Ability to Detect Research Fraud: LLMs struggle to catch signals that a knowledgeable human could. That could include: recognizing the authors, lab, or institutions; considering the suitability of the study design; evaluating disclosed approvals, funding/grant information, or conflicts of interest; examining submitted, accepted, and publication dates or the publication’s editorial policies and board; or the general plausibility of the results.

Domain-Specific Challenges

- Contextual Definitions: Like jurisdictional differences in law, specialized fields have their own sets of rules, theories, and practices that can vary significantly by context. LLMs can get confused about this context.

- Recognizing Discipline-Specific Conventions: Nearly every field has its own unique conventions or standards for presenting and interpreting information. LLMs may not be attuned to these norms, potentially leading to misinterpretations or inappropriate comparisons.

- Dealing with Evolving Terminology and Definitions: As research advances, terminology and definitions used can change over time. LLMs and encoding models are “frozen,” having been trained on data up to a cutoff date that can be months or years passed. Performance around terms not understood by the LLM/encoder will be degraded, leading to potential confusion or mischaracterization.

Bias and Fairness Concerns

- Biased Data and Fairness: Biases present in LLM training data can be reflected in the outputs, potentially perpetuating stereotypes or outdated perspectives in fields that have evolved.

- Internal Knowledge May Override Retrieved Context: In some cases where an LLM is presented with information that is contradictory to its world view, it may seek to reconcile the two and not strictly limit itself to the information provided in the context provided via the RAG pattern.

- Contextualizing Historical Data or Findings: When dealing with older publications, the AI may fail to properly contextualize findings within the context of the time, potentially leading to misinterpretations or false equivalencies with current knowledge.

Addressing these jagged edges requires ongoing improvements in LLMs, RAG implementations, and the methodologies for integrating and evaluating diverse and evolving information sources.

Trade-offs/Tensions Between Quality and Cost

The capabilities of a RAG system are also heavily impacted by cost, leading to major tensions in solution designs.

There is a strong logarithmic correlation between cost (development, operational and maintenance) and quality (usefulness, accuracy etc.), that is quality comes at an appreciable cost, particularly at the upper-limits or cutting edge of these technologies. Cost-quality tensions can generally be bucketed as follows:

- LLM strength: quality and cost are highly correlated. Claude Opus, arguably the strongest model currently, is around 150 times the cost of Mistral’s Mixtral 8x22B, a fast and cheap but respectable model ($15 per million input tokens for Opus compared to 10¢ for Mixtral with 100 tokens equating to around 75 words).

- Depth and breadth of material injected into context window: sophisticated analysis, where the answer can’t just be found from a single authoritative source, often requires a lot of information to be injected into the LLM’s context window. This can require a lot of tokens.

- Sophistication of content preparation: pre-preparation prior to ingestion to weight content for authority and suppress content that could lead to misleading results adds up-front cost. For example, reference sections often yield very high semantic similarity scores but are not sufficient for an LLM to draw conclusions from (although they will happily do so).

- Encoder strength and dimensionality: stronger encoders are more expensive and greater dimensionality increases vector database costs. Embedding models take content and approximate it down to dimensions expressed as floating-point numbers. These are stored in a vector database which allow for approximately finding the nearest neighbors in dimensional space, or the most semantically similar content. This is the core of the retrieval in RAG. The more dimensions, the more floating-point numbers need to be stored in the vector database, increasing both storage and computational cost.

- Chunking strategy: strategies such as summarization, hierarchical retrieval, sentence-window retrieval all add significant cost to both content ingestion and retrieval costs

- Retrieval strategy: techniques like query rewriting, HyDE, hybrid search, ReACT and FLARE all improve quality at significant additional cost

- Validation strategy: additional steps to check the quality of responses will catch many outliers but adds additional engineering complexity and incur higher token consumption costs

- Sophistication of ranking strategy: adds engineering effort and compute costs of cross-encoding

- Agentic strategies: as frontier models become better at following precise instructions, it is possible to create recursive loops where an agent (or many agents) builds a research plan and forages for information until satisfied. Sophisticated agentic techniques such as FLARE typically incur 10x the token cost of simpler approaches. Within a few generations, it is likely models will have the reasoning abilities and be sufficiently reliable at instruction following to perform comprehensive literature reviews, at potentially unbounded cost.

Scholarly publishers’ value propositions and reputations are grounded in the authority, trust, and quality of content they produce. Therefore, they desire a conversational interface across their corpus to be of commensurate quality. This will be particularly the case for legal and medical practitioners that will be using the product directly to influence their work.

The fundamental tension is that unlike web distribution of static content, which has enormous scale advantages due to very low marginal costs, the RAG pattern has high marginal costs (10-1000X) that scale linearly. While token costs remain high, for general scholarly applications outside of specialty practitioners, the central business or product challenge will be how to generate sufficient incremental revenue to offset the vastly higher compute costs to use GenAI technology to generate responses to queries.

These tensions and trade-offs are why we built the recently released “AI Playground,” where our publishers can enter a query and see the differences in answer quality, compute time, and cost of various chat and embedding models, as well as a host of other settings. Publishers need these sorts of safe spaces to consider the trade-offs of these elements of system design, see the shortcomings and strengths, and experiment with possible use cases. We feel that these hands-on approaches will help our community start more conversations about what the GenAI future of scholarly publishing could look like.

Meanwhile, it’s important to be aware of the jagged edges outlined above, so we can collectively make informed decisions that benefit our users, our missions and businesses, and research at large.