The ultimate value of altmetrics remains an open question. Altmetrics could provide a paradigm-shifting toolset that radically remakes the way we judge the value of a researcher’s work, or the field could prove to be merely the latest flavor-of-the-month, riding the hype cycle to an eventual niche role in the periphery of the research career structure.If the former, then a major hurdle to overcome is a reliance on factors that can be easily gamed: measurements of interest and attention. The power of publicity efforts in driving performance in these metrics offers journals a potential new arena for differentiation and added value for authors.

Any time the subject of altmetrics comes up, the question of gaming is immediately raised. But identifying actual gaming — what behaviors are acceptable and what should be considered cheating — is not as straightforward as you might think. Euan Adie, founder of Altmetric, recently posted an insightful look at the notion of gaming altmetrics.

Adie rightfully argues that it’s perfectly reasonable for a researcher to want to draw attention to his own work. If you don’t want others to hear about the results and to drive future research, then why bother working on it in the first place? But where do you draw the line between good faith efforts to spread knowledge and cynical attempts to game the system? Adie offers a variety of scenarios, from a researcher sending out a tweet about her new paper to a researcher purchasing retweets from a shady promotional service. Those extremes offer pretty clear white/black, good/bad scenarios, but the activities listed in between start to fall into shades of grey.

Adie’s examples all revolve around the actions of the researcher herself. He doesn’t begin to touch on the promotional efforts that are provided by scholarly journals (which should perhaps be added in as number 61 on this list). Journal publishers regularly do a tremendous amount of work to draw attention to articles. The range of activities includes full-blown press conferences, press releases, setting up interviews with major media outlets, blog posts, tweets, social media campaigns, Google adword campaigns, email marketing campaigns, advertising, awards, etc., etc.

It’s unclear where these sorts of activities would fall along the acceptable/gaming spectrum. They are legitimate efforts to disseminate information, and valuable services that authors appreciate. At the same time, anything that smacks of advertising is likely to induce a kneejerk reaction from the academic community, which, in principle if not in action, often considers the pure pursuit of knowledge to be above such sordid behaviors.

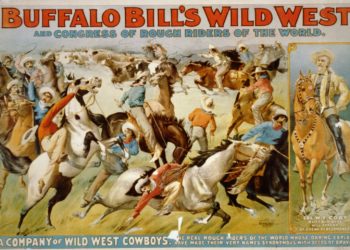

But if altmetrics take hold in the funding and career structure of academia, then expect marketing efforts to massively ramp up. Each university will likely build a publicity department (or expand their current one) if it means an increase in grant funding. Journal publishers will find themselves with a new means of differentiation, of separating oneself from one’s competitors in the eyes of authors: “Come publish your paper with my journal, and here’s the marketing campaign we’ll mount on your behalf.” You can almost picture the Mad Men style pitch from competing journals in the laboratory conference room (though hopefully a less smoke-filled atmosphere, unless the fume hoods are on the fritz that day).

Like nearly every other recent development in the world of journal publishing, this would favor the bigger publishers, further entrenching the current power structure. Oxford University Press, for example (full disclosure: my employer), already has in-house a large, global marketing team, augmented by a well-staffed publicity group, charged solely with reaching out to traditional media outlets as well as driving attention through social media channels including a highly-trafficked blog and more than half a dozen widely-followed Twitter accounts. There’s little chance that a small, independent publishing house or research society can offer authors the same efforts and reach that come with the economies of scale inherent to the biggest publishing houses.

So if attention becomes the coin of the realm for researchers, expect even more market consolidation and even further power granted to those journals which offer the most visible platforms to authors.

All of this may be something of a longshot, though. Ultimately, what funding agencies and research institutions want to measure is the quality of the work done, and the value of the results produced. A researcher’s ability to draw attention is not a direct proxy for curing a disease, solving an important problem or changing the way we see the world. As Adie clearly states in his post, “Remember that the Altmetric score measures attention, not quality.”

Quality remains the hole in the middle of the Altmetric donut, and without clear measures of quality, altmetrics may never take on the central role many are seeking. The metrics offered may be better suited to particular areas of research — the development of software, tools and methodologies for example, where tracking the uptake and usage of the research end product can indeed offer a direct measure of success.

Until these questions are resolved, a shift to a publicity-fueled author economy remains speculative, something of a fascinating alternative universe future that raises intriguing questions. Does a move to altmetrics merely replace chasing the journal with the best Impact Factor with chasing the journal with the strongest marketing team? Does a triumph of altmetrics signal an end to the ivory tower, a final breakdown of the separation (or at least the illusion of separation) between the academic and commercial worlds, creating a hype-fueled market where each new discovery becomes just another product to be sold to the masses? Is this the latest intrusion of the Silicon Valley/internet startup mindset into academia, where attention and popularity of a product seems more important than the actual value it generates? And perhaps most important, is this progress and the right path for academia?

Discussion

45 Thoughts on "Driving Altmetrics Performance Through Marketing — A New Differentiator for Scholarly Journals?"

i agree that the gaming altmetrics is a highly interesting and relevant problem. however, basically it’s the same problem for “classic”, i.e. citation-based, impact factors. with the rise of e.g. google scholar (which weights articles heavily based on citation counts) there is an incentive to try to manipulate citation counts to get good rankings on google scholar. and since google scholar indexes (academic) PDF from the web, the question arise how to get ones articles indexed (and ranked well) by Google Scholar?

we published a couple of papers on these topics (academic search engine optimization, and gaming google scholar)

http://www.docear.org/docear/research-activities/#academic_search

Citation is indeed an imperfect metric–it’s very slow, and as you note, susceptible to gaming. Though it should be noted that the bar for gaming is much higher, at least for the metric used as the currency of the realm, the Impact Factor. It’s not as easy as buying Tweets or putting up fake papers on a website, though it can be done.

yes, manipulating the original impact factor is difficult. but manipulating citation counts on e.g. google scholar is quite easy. probably even easier than buying tweets if you have some technical knowledge on how to create PDFs and upload them to the Web (and it’s free ;-).

It is important to distinguish different kinds of altmetrics, which may have different uses. On one hand there are short term metrics such as tweets and page views. These play primarily a news role, where people want to know if something big may have happened and citations take far too long. Marketing, and even gaming, can play a major role here. But promotion and funding are generally based on longer term performance, over years not weeks. Here long term usage measures are likely to be significant and these are less easily gamed. I personally do not think gaming is much of an issue at this scale. Mere possibility does not make a threat real.

I would also argue that long term usage is even better than citations as a measure of quality. We are after all talking about journal articles not romance novels. Usage of journal articles will usually be a strong measure of professional interest, hence impact. Of course there will also be other cases, such as an article that touches on a political controversy, but these are easily known. One must keep in mind that job and funding decisions typically involve a fair amount of individual scrutiny.

David C:

I am curious, does Oxford devote much budget to hyping individual articles? In my experience we promoted journals but not articles.

I tend to think of the cold fusion news reports and the aftermath when thinking about hyping individual articles.

“Budget” may not be the right measure, as so many of the marketing channels today don’t require a direct spend, just staff time and effort, which is likely attributed to “overhead”. But like most publishers, we do expend a good amount of effort promoting individual articles, through programs like journals naming an “Editor’s Choice” article in each issue, press releases, tweets, blogs, and all sorts of email campaigns (“read the most cited articles in journal X”).

There is indeed a danger of “doing science via press conference” as was noted for cold fusion, arsenic life and the Darwinius fossil in recent years alone. There is a line one has to be careful not to cross and the research needs to speak for itself.

Has the social media advertising resulted in any measurable increase in either readership or subscriptions?

It’s hard to say directly–when we do a big push on the OUP Blog or Twitter, it’s usually for an article that has been specially selected for broad interest or significance. So on some level, you can’t clearly state that the marketing campaign is solely responsible for the increase in readership, some of it may just be due to the excellence of the article.

But for example, an article like this was featured on the OUP Blog and Twitter:

http://blog.oup.com/2012/05/how-do-humans-ants-and-other-animals-form-societies/

The article saw nearly 4X the readership of other articles in the same issue.

John Wannamaker once said he knew half his advertising dollars were wasted, only he didn’t know which half. I would be similarly skeptical about any supposed connection between advertising and a volatile number like citation counts. The authors who cite your work will find it either by perusing their regular journals or through search services.

Advertising, however, may have value in serving authors who want to bring attention to their research programs. Competition for budget dollars is fierce and it never hurts to get your results before the public and taxpayers. Public relations is, therefore, another service a journal provides to keep its authors happy.

I’ve never seen any studies showing correlation between marketing and citation. That’s perhaps another positive benefit of citation as a metric. Marketing efforts, however, can be extremely effective in increasing usage and awareness of articles. There’s great value in this, as you note in author satisfaction, but also in terms of driving subscriptions (usage is nearly always one of the most important factors for library purchasing decisions), and it likely also can help in driving submissions.

David C. Can you measure any of what you say? I am not being argumentative but really curious.

One can readily track usage, and use unique links from any given marketing campaign to trace readers directly to those campaigns. There’s always some wiggle room for interpretation (as noted above), but there’s always an effort to collect as much direct information on effectiveness of any given campaign.

Thank you.

Things seem to be changing rather quickly.

I agree usage and citations seem to correlate. When our journal switched to a major publisher a few years ago, usage increased dramatically. Citations followed. You can’t cite us if you can see us!

Was this happy outcome attributable to our new publishing partner’s advertising campaign, or simply to having their sales representatives include us in their library deals? No good way to tell.

I’m not sure there’s a direct correlation–this study showed that you can massively increase an article’s readership while having little to no effect on citations:

http://www.fasebj.org/content/25/7/2129

I suppose what matters is who you’re reaching with your marketing campaign. Regardless, increasing readership is a worthy goal unto itself.

Correction: “You can’t cite us if you can’t see us.” Sorry!

Does the daily arXiv mailing that lists the latest preprints constitute a form of marketing?

The author of this paper http://arxiv.org/abs/0712.1037 found that “e-prints appearing at or near the top of the astro-ph mailings receive significantly [2 times] more citations than those further down the list.”

Kitchen chef Phil Davis wrote a post about this paper and its follow-up here http://scholarlykitchen.sspnet.org/2008/08/07/the-importance-of-being-first/

Which proposals get funded is typically based on peer review, not public or taxpayer perception. Too much publicity can actually be harmful.

I agree specific proposals are judged by peer review. On a programmatic level, that’s not the case. I spent a career in a major government science agency, where getting the word out to the public and, ultimately, their representatives (and our appropriators!) was critical.

No one would question the appropriateness–indeed, the necessity–of marketing for scholarly books and for journals as journals also. Why should it be considered suspect for journal articles to be promoted? Is it just because there is not a well-developed system of metrics for assessing value in the case of books as there is for journal articles? And, actually, it is not usually articles that are assessed but the overall reputation of the journals the articles appear in, right? Article-level assessment is relatively new, isn’t it?

I don’t think there’s currently an issue with the promotion of articles (except in cases where things get carried away like the arsenic life or Darwinius fossil papers, where the hype far exceeded what was shown by the papers). Both scientists and publishers share a common mission of spreading information as broadly as possible.

As you note, the current assessment systems are based on citation, and (with some problems) done on a title level through the Impact Factor. The question I’m posing is what would happen if one would move to an assessment system heavily reliant on “altmetrics” which, at the moment, are based around measuring things like attention and interest.

If those were the criteria for assigning funding and career advancement, we’d likely see an arms race in the marketing efforts made, and hence, something of a subversion of the system.

Perhaps you missed my original comment David, as you did not respond to it. How do you use marketing to manipulate say a two year usage profile? That is the kind of altmetric that funding and career decisions might be based on, not a week of tweets. Give the community some credit for intelligence.

Sorry David, I thought the answer was fairly obvious. As noted in another comment, an article featured on OUP’s widely read blog for example, greatly outperforms articles not given the same promotion. Those usage numbers continue at a higher rate given the better discoverability the article receives through search engines. The same is usually true for an article featured in the New York Times.

Another route is demonstrated through Phil Davis’ study here:

http://www.fasebj.org/content/25/7/2129

Articles made freely accessible saw nearly 2X the number of html downloads over 3 years as subscription access articles in the same journals (though no difference was seen in citations). That would mean that authors who can afford to pay an APC and free up their article would literally be buying success, the rich getting richer, receiving career advancement and funding based on their ability to spend rather than the quality of their research.

And if you have a full time staff dedicated to article promotion, why would you stop at a week of tweets? A regular series of promotions via email, Twitter, banner ads, prominent placement on the journal’s webpages, etc., can all continue to drive traffic.

You are making too many claims to reply to all, but as I said in another comment if someone gets high numbers due to intense marketing their peers will know that. It might even work against them as the community dislikes publicity hounds.

More deeply, we are talking about something like a million articles a year so I do not see publicity campaigns disrupting a system based on usage, which I think is a far better impact metric than citation. For that matter, impact depends on discovery and access so marketing increases impact. A great paper that is never read is useless. We are trying to measure impact not quality, right? If we did not want researchers to work hard to get their ideas out we would not value publication so much. Publication per se is marketing, as you are using the term.

I disagree on both the notions you’re presenting here:

Usage is a far better impact metric than citation:

Taking a look at the most read articles in the history of all PLOS journals:

http://almreports.plos.org/add-articles?utf8=%E2%9C%93&everything=&author=&author_country=&institution=&publication_days_ago=-1&datepicker1=&datepicker2=&subject=&cross_published_journal_name=All+Journals&financial_disclosure=&commit=Search&sort=counter_total_all%20desc

The top ten includes the following:

Fellatio by Fruit Bats Prolongs Copulation Time

Quantifying the Clinical Significance of Cannabis Withdrawal

Genome Features of “Dark-Fly”, a Drosophila Line Reared Long-Term in a Dark Environment

An In-Depth Analysis of a Piece of Shit: Distribution of Schistosoma mansoni and Hookworm Eggs in Human Stool

Do you think this is representative of the most impactful papers that PLOS has ever published in all its journals? Or are there perhaps other reasons why these sorts of papers are the most read, such as the Dark Fly paper which made it to the front page of Reddit?

Simply put, attention is not the same as impact. Citation, though flawed, at least offers the concept that the work being cited inspired or had an influence on the work citing it, hence an “impact”. A download merely suggests someone wanted to take a look at the paper, not even that they read it or found it useful.

if someone gets high numbers due to intense marketing their peers will know that

Perhaps true, but not always the case, and more importantly, one of the key reasons we have metrics is so people who are not directly involved in a particular field can get a sense of the importance and value of the work. If an administrator is involved in a tenure decision for a researcher for example, an accurate set of metrics can help rank candidates. That administrator is likely going to be unfamiliar with the marketing tactics taken by the particular journals. Those well versed in a field and expert in an area of research generally have their own sense of the quality of work done by a researcher, and are less reliant on metrics. So those who would have the intimate knowledge of how those metrics arise are conversely those less likely to rely upon them in the first place.

You are still making what I call an argument from outliers. The issue is understanding the role that every paper is playing in a system where millions of papers are playing at once. Altmetrics have the potential to do this much better than citations alone. Goofy counter examples do not change this fact.

Sorry, no, this is common to many, if not most, journals. Here’s the current most read list for a Toxicology journal I work with:

The Safety of Genetically Modified Foods Produced through Biotechnology

Low Concentration of Arsenic-Induced Aberrant Mitosis in Keratinocytes Through E2F1 Transcriptionally Regulated Aurora-A

A Short History of Lung Cancer

Arsenic Exposure and Toxicology: A Historical Perspective

Mechanisms of Hepatotoxicity

Here are the current most cited articles:

High Sensitivity of Nrf2 Knockout Mice to Acetaminophen Hepatotoxicity Associated with Decreased Expression of ARE-Regulated Drug Metabolizing Enzymes and Antioxidant Genes

The Plasticizer Diethylhexyl Phthalate Induces Malformations by Decreasing Fetal Testosterone Synthesis during Sexual Differentiation in the Male Rat

Perinatal Exposure to the Phthalates DEHP, BBP, and DINP, but Not DEP, DMP, or DOTP, Alters Sexual Differentiation of the Male Rat

Male Reproductive Tract Malformations in Rats Following Gestational and Lactational Exposure to Di(n-butyl) Phthalate: An Antiandrogenic Mechanism?

Pulmonary Toxicity of Single-Wall Carbon Nanotubes in Mice 7 and 90 Days After Intratracheal Instillation

No overlap whatsoever. Here are the same lists for a Gerontology journal:

Most Read

The Meaning of “Aging in Place” to Older People

Multiple Levels of Influence on Older Adults’ Attendance and Adherence to Community Exercise Classes

Japan: Super-Aging Society Preparing for the Future

The Physical and Mental Health of Lesbian, Gay Male, and Bisexual (LGB) Older Adults: The Role of Key Health Indicators and Risk and Protective Factors

Predictors of the Longevity Difference: A 25-Year Follow-Up

Most Cited

Assessment of Older People: Self-Maintaining and Instrumental Activities of Daily Living

Caregiving and the Stress Process: An Overview of Concepts and Their Measures

Relatives of the Impaired Elderly: Correlates of Feelings of Burden

Progress in Development of the Index of ADL

Psychiatric and Physical Morbidity Effects of Dementia Caregiving: Prevalence, Correlates, and Causes

Again, no overlap. I can give you a few hundred more examples if you’d like. What people click on and maybe read, maybe immediately drop out after the abstract, is not the best measure of impact. Compared to articles that were cited as being influential and leading to further experiments and the gain of new knowledge, knowing an article was read a lot doesn’t tell you a whole lot.

Who had more impact on the development of modern music? Louis Armstrong or Britney Spears? Given that Britney has sold more music over her career than Louis, are you suggesting she’s a more important, more impactful musician? If you were giving out NEA grants, would you offer her funding or Louis?

One presumes that the articles featured by OUP and NYT are chosen because of their importance. This may explain much of their success.

Sometimes. Others are chosen because they’re likely to have broad interest among the general public. “Popular” is not a synonym for “Good” or “Important”.

Popularity may well be importance. For example papers that are widely read by non-publishing practitioners such as doctors and engineers are very important but get no citations.

Not all research is done in the medical and STM world.

But yes, for some types of articles, clinical practice articles for one example, readership may offer insight into their impact (note this is discussed in the blog posting above). As noted, this may be useful in some niches, but may not be the most relevant metric across the board.

It seems to me that altmetrics is important and valid precisely because it measures attention, not quality or importance or what have you. It measures word of mouth, which we previously couldn’t measure. It also correlates to a new style of consumption, that of online access and immediacy.

I don’t know what role altmetrics will have in academic research, but it certainly does not occupy a niche role in the commercial sector, and should not be dismissed.

http://www.theverge.com/web/2013/10/7/4811410/nielsen-tv-twitter-rating-starts-today

I just have not seen nor heard about an academic scientist say while at a meeting: Gee, I had 1,000 tweets on my new article how is yours doing?

Perhaps you do not know anyone that lucky, Harvey, or unlucky depending on why thousands of people are discussing their article. In either case it is important. One big difference between attention and citation is that attention is often bad news, but citation seldom is. This is why attention metrics often include semantic issue analysis. The question is what is the issue driving the attention?

But by the same token it is wrong to assume that citation means the author’s work was influenced by the cited work. Many citations are used to in the beginning of the article, to explain the context of the work. Finding these cited articles may be a separate little project done when writing the article, after the work is done, so they have no direct bearing on that work. The question is then what is the issue driving the citation?

Note too that we are talking about the future not the present. Altmetrics are just beginning to be felt, and hyped unfortunately. But we clearly have new data that is just as important as citation, if not more so, when it comes to seeing where ideas go and how they work.

DavidW: what is that new data that illustrates that it is just as important as citation?

Having published some of the most often cited review journals and ones with the highest impact factor in a given discipline, I understand how many citations are used.

Thus, I am most curious as to what the new altmetrics are contributing to the furtherance of science.

Harvey, we now have the data that can potentially tell us everywhere an idea goes, plus probably what it does when it gets there. I think full text semantic analysis of all the message traffic, including journal articles, is the key. The text is the raw data I am referring to and much of it is only newly available as part of the eRevolution

In other words, text in mineable form is better data than citations when it comes to tracking the impact of an idea. I can track an idea from paper to paper and elsewhere based on the language used.

DavidW: But, wouldn’t the idea have to be cited?

Ideas are not tracked via citation, rather via language, Harvey. Here is one basic version of the argument. We are looking for influence of one person’s work on others. Suppose, contrary to fact, that every citation actually reports influence. That is, if y cites x then x’s work influenced the work y is reporting. Here is the problem. Suppose B has 20 citations including one to A. Thus A influenced B. But then suppose C has 20 citations, including one to B but none to A. We cannot tell if A influenced C or not. C may have been influenced by aspects of B’s work that had nothing to do with A.

Suppose as well that A coins new words or phrases in reporting its work. This happens very frequently in science because we are constantly talking about some aspect of the world that has not been talked about before, in fact that is the job of a journal article. (Disclosure: this is my doctoral thesis result, a theory of scientific language.) For example suppose A coins the term “Feynman diagram.” If C uses this term then we know that C’s work was influenced by A. I call this relation a “natural citation” (to coin some language of my own.) People can choose who to cite but they generally cannot choose which language to use. They must use the language that refers to the things they are talking about.

Thus by mapping the diffusion of the scientific language that originates in a given body of work we can map the influence of that body of work. It is not fool proof but I think it is much better than mapping citations. Note that these maps will probably not be network diagrams. In the example above we do not know if C got the idea from B or from A, nor do we care because we only want to know who A has influenced. It is characteristic of diffusion processes that they are not mapped by tracking every component motion, and so it is with ideas. Plus we can do this for all available message traffic, not just journal articles. Of course there is a lot more to it but this is one simple version.

DavidW: Interesting idea. But say A says the new word coined is misused and not a valid proposition and that becomes the idea behind the newly coined word. For instance it is an error to think Y (new coined word) because Y is not scientifically valid.

Does this contribute to the scientific literature.

I think scientists say they stand on the shoulders of others. Is the new altmetric merely affirming this belief?

DavidW: potential is a far cry from actuality. I do think efforts regarding altmetrics should move forward if for no other reason than to either verify or nullify the attempts.

Hi Charlotte,

You’re right in that altmetrics do let us measure some new and very interesting things. The question that must be asked though, is whether those things are important, and if so, to whom. There’s not an obvious or immediate translation between television ratings, used to draw advertisers to buy time, and the questions involved in whether a researcher’s work should be funded or their careers advanced through hiring and tenure.

These measurements of attention may be helpful and important for other aspects of understanding what’s going on in a field–if you’re looking to filter the research literature and stay caught up, then knowing what everyone else is reading can be powerful. But if you’re a research institute, do you hire someone/give them tenure based on how much attention they draw to themselves, or are you more interested in the quality of the work they do? If you’re a funding agency looking to cure a disease, how important is the attention the research draws as compared to how much closer it moves things towards that cure?

The question, David, is how do you measure that movement? Citation touches on it but alone it is a poor measure. The diffusion and growth of knowledge is a very complex process, one that is poorly understood. This is what is exciting about the new metrics. (Disclosure: I do research in this area.)

Fair enough, and I agree that citation, useful though it can be, is a flawed metric in many ways. Experimenting to find new metrics, new ways of measuring the value of research are always welcome and important. But as Bruce Cameron once said (and the quote is often wrongly attributed to Albert Einstein):

Not everything that counts can be counted, and not everything that can be counted counts.

It is important to look at new metrics with a critical eye, and in particular, understanding the context in which one is proposing to use them. Just because something is valuable in selling ads on television doesn’t automatically mean it is similarly valuable in the context of determining research funding.

I also am a bit concerned with approaches that seem almost the reverse of the scientific method. Clearly defining what one wants to know, then searching for methodologies that can prove fruitful seems perhaps a better approach than starting with a methodology because it can be done, and trying to reverse engineer meaning onto it.

Indeed, I think the current altmetrics hype should be severely criticized, especially the single value indexes. But “this does not work” tends to become “this cannot work,” with which I strongly disagree. As for the scientific method new data often comes first. The scientific question then becomes what does this tell us and that is where we are with altmetrics. The meaning is there to be found and I expect it will be profound. The science of scientific communication has much to learn.