This interview with economist Paula Stephan of Georgia State University and the National Bureau of Economic Research ran last April and accompanied my review of her book entitled, “How Economics Shapes Science.” Her book and this interview have since become touchpoints for many seeking to understand the incentives driving behavior and business around scientific and scholarly publishing.

Q: One of the themes in your book is the special economics of scholarly information — that while the information itself is non-rivalrous and non-excludable, priority and prestige are rivalrous and excludable, so scientists share reports of their results to transform economically non-viable information (for them) into economically viable prestige and priority. Is that a fair summation? What important behaviors emanate from these transformational events, good and bad?

A: I think that’s a fair summary. Knowledge has properties of what economists call a public good: once made public, people can’t be excluded from its use and it is not used up in the act of use (non-rivalrous). Economists have gone to considerable effort to show that the market does a poor job providing items with such characteristics. That’s where priority comes in: the only way that a scientist can establish priority of discovery is to make his (her) findings public. Or, stated differently, the only way to make it yours is to give it away. Priority “solves” the public good problem, providing a strong incentive for scientists to share their discoveries. The upside is that priority encourages the production and sharing of research. There are other positives — one relates to the fact that it is virtually impossible to reward people in science for effort since it’s virtually impossible to monitor scientists. The priority system solves this, rewarding people for achievement rather than effort. Priority also discourages shirking — knowing that multiple discoveries of the same finding are somewhat commonplace leads scientists to exert effort.

But there is a darker side: the priority system can discourage scientists from sharing materials and information. And institutions and the scientists who work there can become overly reliant on metrics of prestige rather than actually assessing the quality of the research. The current obsession with the h-index is but one example. At its darkest, priority can encourage fraud and misconduct.

Q: More authors are paying for publication today, often in so-called mega-journals, which mostly check for methodological rigor but not novelty or importance. Some authors also seem to be shrinking the minimal publishable unit to garner more publication events per research project or grant. Is this rational behavior given the current economic incentives in academic research?

A: LPU’s (least publishable units) have been around for many years and are an unfortunate logical extension of a hiring/promotion/funding system that relies heavily on metrics for evaluation. The best way to counter the trend is for organizations charged with evaluation to limit the amount of what they review — requiring individuals, for example, to put forth their top five papers, or some such.

Pay for publication is driven in part by the importance of publication and the considerable amount of market power enjoyed by many of the companies that publish academic journals. In the current environment, it is rational for scientists to pay for publication, especially since the costs are often absorbed by a funding agency.

Q: A recent study that you coauthored showed that cash bonuses to authors led them to make poor and costly submission choices. What differentiates a good incentive from a poor incentive?

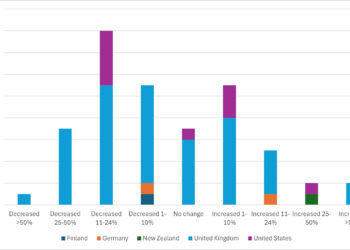

A: Right, we analyzed submissions to Science for the period 2000-2009 and found a tremendous increase in the number of submissions from countries that introduced the payment of cash bonuses for publishing in top journals but found no associated increase in the number of publications by scientists from these countries. However, we found that scientists residing in countries that introduced publication incentives tied to salary increases or career progression submitted more papers after the introduction of the incentive and had more papers published. Why? A plausible explanation is that these types of incentives encourage scientists to do better work, knowing that their research performance affects their career outcomes. Cash bonuses, on the other hand, are more like a lottery and encourage the purchase of multiple lottery tickets!

More generally, a good incentive provides benefits for scientists who make a positive contribution and imposes costs on activity that detracts from the system. A bad incentive provides benefits with minimal costs.

Q: You talk about the long-term productivity consequences arising from periods of economic downturn or uneven funding. How would you rate various governmental responses to the economic travails of the past few years? What implications do you see for research productivity over the coming years? Are younger scientists going to be affected more or less?

A: Wow! Lots of parts to this question! Funding for research comes from the government, industry, non-profits and institutions themselves. Even before the 2008 downturn, federal funding had been growing at a snail’s pace for the past few years and the budgets in constant dollars for some agencies, such as NIH, were flat or declining. Along came the recession, which provided a special challenge to state governments, many of which have responded by cutting their support for higher education and, indirectly, for research. The Federal government responded to the recession by creating the American Recovery and Reinvestment Act (ARRA), providing $21 billion of funding to agencies for research and infrastructure support, over a two-year period. ARRA definitely had a short-term positive effect on the research community and was undoubtedly especially helpful to young scientists who had no place to go: universities were not hiring; neither were many other places that typically hire scientists. By pumping a considerable amount of money into university research, ARRA provided the resources to extend postdoc appointments. But ARRA was short-term — even with creative accounting the funds will rapidly be used up, the economy is yet to fully recover and many universities are still not hiring. Thus, younger scientists have been particularly hard hit by this recession. The problem would not be so serious if there wasn’t a queue coming along behind this cohort, but there is! Thus scientists who were not able to get a position in 2009 are now competing with those coming of scientific age in 2010, 2011 and 2012. Not a pretty sight! Some will never recover.

Was ARRA a good thing? It was definitely beneficial in providing funds that could be used to bridge a lean period and support people who had few other alternatives. But the verdict is not yet in when it comes to research results. We will just have to wait and see if the return on short-term funded projects is there.

Q: Journals are sometimes criticized because there’s a propensity to publish so-called “positive” studies. Reading your book, it seems clear there are deeper economic incentives in research and academic funding leading to a propensity of positive findings. Can you talk about some of these pressures?

A: Sure! Most academic scientists only can do research if they have funding, either from a federal agency or a non-profit organization. For academics hired in soft money positions, it’s not just a question of whether they have support to do the research and maintain their lab. It’s also a question of whether they will continue to have a job and a paycheck. So funding is incredibly important to the academic enterprise. Furthermore, past research results play an important role in determining whether an individual is funded. Research producing “positive” results (and published in top journals) is what reviewers look for. There’s little to be gained in the peer review grant system if what one has discovered does not contribute, in a positive way, to the accumulation of knowledge. And there’s absolutely no role for “failed” studies, where the research simply does not pan out. Indeed, the pressure to be sure there’s not a failure down the road, leads funding agencies to look for strong preliminary data before funding a research project. At NIGMS at NIH it was common to say “no crystal, no grant.”

Q: Prizes have become major sources of funding for science. What are their pros and cons?

A: The idea of encouraging research by offering what are called inducement prizes is not new. The British government, for example, created a prize in 1714 for a method to solve the longitude problem. More recently in 1996, the Ansari X Prize was established for the first private manned flight to the cusp of space. In 2006, the X Prize Foundation announced that it will pay $10 million “to the first team to rapidly, accurately and economically sequence 100 whole human genomes to an unprecedented level of accuracy.” There are also prizes for dogs and cats: the Michelson Prize in Reproductive Biology, for example, will be awarded to the first entity to provide a nonsurgical sterilant that is “safe, effective, and practical for use in cats and dogs.” Since 2009, prize fever has struck Washington. That year President Obama called on agencies to increase their use of prizes as part of his Strategy for American Innovation.

Prizes have much to recommend them. For example, they invite alternative approaches to a problem, not being committed to a specific methodology. Second, they are awarded only in instances of success; the incentive to exaggerate is eliminated. Third, prizes attract participation from groups and individuals who might otherwise not participate. But there are also some cons. First, prizes encourage the production of “multiples.” Second, they are not well suited for research that has unknown outcomes — the desired outcome must be known and carefully specified. Awarding agencies are also tempted to raise the bar after a solution is proposed (if one has any doubt, read Dana Sobel’s engaging account of the longitude prize!) From an economic point of view, there is also the problem of determining the size of the award. Ideally, one wants it to be sufficiently large to attract entrants, but not so large as to overcompensate the winners. But the greatest problem, I think, with using prizes as a way to encourage academic research is that funding is only awarded after the research is completed: entrants are on their own to find the funds needed to compete. This means that prizes are only suitable for stimulating academic research if the research complements research supported by other means or if partnerships can be built with industry.

Q: Your final chapter is called, “Can We Do Better?” What is the most important thing to get right for science to continue to thrive?

A: The most important thing to get right is incentives. Currently a considerable amount is wrong with incentives — both at the level of the scientist and at the level of the university. For example, incentives operate to create more scientists and engineers regardless of whether there are research jobs for them. Why? Graduate students make for cheap, clever research assistants. And they are temporary. Moreover, no one evaluates a scientist at grant renewal time on whether or not the students trained in the scientist’s lab have actually gotten research jobs. Don’t ask, don’t tell. By way of contrast, funding graduate students on training grants rather than as graduate research assistantships arguably leads to better outcomes. Not only do the students enjoy more freedom in choosing the lab they will work in, but student outcomes play a role in reviewing applications to renew the training grant.

Another example of incentives gone astray is the pressure, both for scientists and for universities, to get “bigger.” From scientists’ point of view, bigger is better. More funding, more papers, more citations, more trainees–this, despite evidence that big labs may not produce all that much more research. From the university’s perspective bigger is better in terms of research dollars. After all, research funding is one of the major criteria used by the prestigious Association of American Universities (AAU) in selecting members. The quest for research funds can lead universities to behave in what might be considered dysfunctional ways. By way of example, the University of Georgia’s decision to pursue a medical school appears to have been inspired, at least in part, by the desire to increase its NIH funding and thereby get a leg up on being invited to become an AAU institution.

The quest for research funds also leads universities to hire increasing numbers of faculty into soft money positions, shifting all of the risk to the faculty member: no funding, no job. This, in turn, leads researchers to be risk averse. This detracts from science: low-risk incremental research may yield results, but in order to realize substantial gains from research not everyone can be doing incremental research. It is essential to encourage some researchers to take up risky research agendas; the current U.S. system simply does not provide sufficient incentives to do that — even for those in “hard money positions” whose research proposals are evaluated on the basis of preliminary results. The quest for funds has also led many universities to build more research space in the biomedical sciences than can possibly be paid for or used efficiently unless funding for research grows substantially in the near future. I could go on, but you get the idea!

It’s easier, of course, to point out what’s wrong with incentives than to talk about how to fix them, but at a start I would make at least five changes:

- Limit the amount of salary that scientists can write off of grants, thereby discouraging the hiring of scientists on soft money positions.

- Require that departments post placement data for their graduates on the web. Require that faculty submit data concerning the placements of former graduate students (and postdocs) as part of their grant application. No reporting, no funding.

- Put more resources into training grants and fewer into graduate research assistantships.

- Encourage the creation of more research institutes, thereby separating at least some of the research performed in the non-profit sector from training. While effective training requires research, effective research does not require training. And abstinence is the most effective form of birth control!

- Decrease the importance of metrics (such as citation counts and h-indexes) in hiring and promotion decisions as well as in evaluating applicants for grants. NSF, I think, has this right: applicants can only list the five most relevant publications on the bio that accompanies their application and five “other significant” publications. Departments and universities should do the same.

Discussion

3 Thoughts on "Stick to Your Ribs: Interview with Paula Stephan — Economics, Science, and Doing Better"

Reblogged this on The Citation Culture and commented:

A good interview about what is wrong with the current incentives system in science and scholarship.

Interesting about incentives and rewards. I can agree to much of Paula’s statements and recommendations but she’s got it wrong when it comes to incentives for publishing. There are models like the one currently in use by the Swedish government which gives incentives only for publications that receives recognition from the scientific community. Consequently, it is necessary to publish less amounts but more interesting papers. Put more information and more important stuff into your articles and you will meet more attention, a fact often stressed by the Norqwegian bibliometrician Per O Seglen. The Swedish model is described in papers which can be found at http://www.forskningspolitik.se.