The “early adopters” among us have been in meetings where our appreciation of technology and what it can do is dismissed or downplayed as “geek” or marginal in some way. It happens less and less, but even if the boundary is now navigated in silence, the swell of conceits about technology is still palpable. Computers aren’t print, there’s not enough tradition, they’re for young scientists or physicians.

In one of my talks, I point out that while the stereotype of technologists has been to portray them as “young, marginalized, and powerless,” it’s clear that technology users are now “middle-aged, central, and powerful.”

Yet, this bias against technology and what it can do continues to permeate the upper reaches of corporate decision-making and even the thinking of technologists themselves. Technology is still viewed as something “other,” a set of strange and opaque items foisted upon us just to make money, drawing us away from the age where our aspirations still reside.

As if there’s an alternative.

A new Kindle book, “Race Against The Machine: How the Digital Revolution is Accelerating Innovation, Driving Productivity, and Irreversibly Transforming Employment and the Economy,” outlines in blisteringly concise detail why there isn’t any choice. Information technology represents a new general purpose technology, like steam, electricity, or the internal combustion engine. General purpose technologies not only create their own industries, but change all other industries by virtue of being extensible and beneficial across the board.

Industries and employees that have been affected are almost too numerous to mention — travel (Kayak and kiosks), books (Kindle and Amazon), banking (ATMs and online bill pay), and so on. And we are probably only at the beginning of a long cycle of rapidly growing computer capabilities.

The authors relate the story (perhaps apocryphal, and credited to Ray Kurzweil) of the inventor of chess, who, when asked by the delighted king what he wanted as a reward, said that he’d like to have a grain of rice on the first square of the chess board, two on the second, four on the third, and to keep doubling the grains per square all the way to the last square. This seemed a small price to pay to the king, and all was fine until about the halfway point of the board, when it became clear that the doubling would give a pile of rice the size of Mt. Everest and make the inventor richer than the king. (The inventor was soon beheaded for his insouciance.)

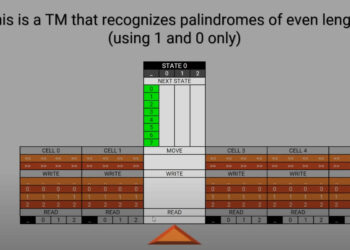

To apply the analogy, the authors take 1958 (the year the government first used the term “Information Technology” as a professional category) and Moore’s Law (computing power doubles every 18 months), and find that in 2006, we crossed over the figurative half-way point of the same chess board when it comes to computing power. Since then, we’ve seen successful real-world tests of autonomous cars, Watson win Jeopardy, Siri on our iPhones, and so forth. Each 18 months is another doubling on the chess board of technology.

Tim O’Reilly recently talked about this from a different angle, noting that the Google autonomous vehicle of 2006 provided a slow and dicey ride — yet the autonomous vehicle fleet of 2010 drove thousands of miles. As the authors of “Race Against the Machine” note, the only accident this fleet suffered occurred when a human driver rear-ended one of the Google cars at a stop sign.

In less than 5 years, autonomous vehicles went from curiosities to the point where now states are beginning to draft sample regulations to allow them on roads.

Robotics are poised to make huge leaps, as well, as this recent demonstration of Honda’s Asimo shows:

This is the power of doubling. But the power of general purpose information technology goes even further, through complementarity. As economists Timothy Bresnahan and Manuel Trajtenberg wrote:

Whole eras of technical progress and economic growth appear to be driven by . . . GPTs [which are] characterized by pervasiveness (they are used as inputs by many downstream sectors), inherent potential for technical improvements, and “innovational complementarities,” meaning that the productivity of R&D in downstream sectors increases as a consequence of innovation in the GPT. Thus, as GPTs improve they spread throughout the economy, bringing about generalized productivity gains.

One of the most significant consequences of these changes is that even higher-skilled jobs are being eliminated or chipped away as computers intrude quite naturally and capably.

As computers climb the ranks from calculator to artificial intelligence, jobs at various levels are lost or severely curtailed. The first signs of intrusion into our worlds was the disappearance of the mail, FedEx, or UPS guys. What used to be stacks of envelopes bulging with manuscripts became electrons flowing through tracking systems, and clicks sent the right manuscript to the right editor’s in-box. Artwork is now delivered, calibrated, sized, and placed electronically. And with the truly sophisticated systems, metadata keeps it all stored nicely in libraries, complete with logic around permissions, access levels, and rights.

Even in editorial ranks, you can feel the first gentle pressure of change as pre-processing routines check references, do initial styling, and introduce basic formatting. Full XML workflows push this even further. And if editorial decision-making is truly fair and unbiased, it’s certainly amenable to being replicated programmatically. Imagine a program not unlike IBM‘s Watson that could check a manuscript submission for novelty (via CrossRef, iThenticate, and other APIs), statistical validity (mathematical processing), sufficient referencing (citation network analysis), author disclosures, and conclusions that flow from the hypothesis and data. A set of algorithms and processes like these could certainly make a reasonable first pass at filtering the flood of submissions.

It’s worth noting here that a computer program (SCIgen) has beaten human editors multiple times, generating realistic-appearing but nonsensical scientific abstracts and even full papers, one of which was part of a notorious prank here.

I can actually imagine that a computer might be able to do a better (faster, more thorough) job at some seemingly complex editorial tasks, being unbiased and able to process more inputs faster and more objectively. It’s really just a matter of applying technology that already exists to a different task. As William Gibson famously said,

The future is already here — it’s just not very evenly distributed.

The lesson of “Race Against the Machine” strikes me as, “Don’t stand still.” Technologists need a stationary target. By advancing what it means to create knowledge, we win in two ways — we push ourselves to do better, and we make it harder to catch up with what we’re doing.

However, I doubt we can double our capabilities as quickly as . . . [sentence to be completed when computer editor arrives.]

Discussion

31 Thoughts on "Is Anyone Immune? "Race Against the Machine" and Today's General Purpose Technologies"

Have you considered what would happen if machines could do everything that humans could do? What if everything that humans needed could be provided by machines without human effort?

Would that be the golden age where humans were released from spending their lives in order to live, and could spend their lives doing … whatever it is they are living for?

Unfortunately, I think under our current economic system such an invention would be catastrophic. A few people would own the machines that could make everything (but would have no customers), and everyone else would be destitute (having no job).

Is it possible that our current malaise is because we are, in certain circumstances, closing in on that situation? A post or two ago mentioned 28K Google employees doing umpteen billion searches — I have a sneaky feeling the employees don’t do any searches at all. The searches are done by machines. And if the only thing that Google did (or ever planned to do) was searches they wouldn’t need 28K employees — may be less than 28. The human effort required to perform a search is as close to zero as you are likely to get. So is the cost of creating a new copy of an e-book. Or even the cost of a new copy of a processor chip, or sending a text message or watching a movie.

We are surrounded by technology with near zero unit marginal cost, and an economic system that is “designed” for manufacturing widgets where the principal cost is the unit marginal cost.

I tend to agree. The authors of “Race Against the Machine,” when interviewed on NPR, were pressed to see a bright side to this, especially given our current climate of economic exploitation and un/underemployment. They really struggled, but noted the need they felt to shine a light on it nonetheless. That’s reasonable — at least by seeing it coming, we might start to plan.

Their answer was that perhaps we’ll find value in the arts, in pushing ourselves physically and mentally, and in pursuing things that aren’t built around doing repetitive tasks. It may be jarring, but isn’t that all part of the same human story we’ve been cobbling together for the past few thousand years? Discover fire, contain fire, put fire in my pocket.

Or we could all float around on chairs like in Wall-E.

I like the old IBM slogan that machines should work while people think. Google’s R&D budget is several billions of dollars a year, mostly for humans I think. More generally we have transitioned to “cognitive production systems” whose product is thought. Stand downtown in a city and one is surrounded by towers full of people whose hourly product is thought. Think about it, ha ha. Then too, people blog.

This book by my college classmate and former editorial colleague at Princeton University Press (where he was science editor) is still relevant to the questions discussed here: http://www.amazon.com/Why-Things-Bite-Back-Consequences/dp/0679747567. Indeed, at my recommendation the CCC has recently named Tenner to its board of directors (on which i continue to serve) as someone who is keenly attuned to how technology can “bite back.”

The Rajah’s Rice: A Mathematical Folktale from India conveys the story of rice on a chessboard.

Kent, I find myself agreeing with what you say but at he same time wondering to myself whether or not the main point has been missed. I have always been a technology skeptic, not because I doubted its ability to change and make human effort more efficient, because the evidence is conclusive; it does.

I am a skeptic because I have always found the promise of technology to be greater than its reality. For my entire life, technophiles have told me how technology can improve my life. I can do more work faster with less effort, technology frees me from drudgery, I can more easily communicate with friends, family and colleagues – and all of this for less money. All of this is true, technology has changed my life in profound ways. But I have a question; is my life better for it? Is it; really?

If technology has improved our lives so much; why are Athens and Rome burning? Why is Wall St, Oakland and St Paul’s occupied? Why did we have the financial meltdown in the first place (after all sophisticated computer systems and algorithms were used to eliminate risk and therefore prevent future meltdowns; weren’t they?). Why are the Tea Partiers so angry? Why do people like Sarah Palin strike a cord with the average American? Where is the technological utopia we were promised? Were is all the free time I was promised by the technophiles? My life isn’t easier, just the opposite, I work more than ever. I am never disconnected and I am fairly certain that is not a good thing. I am connected all the time because my colleagues and clients expect me to be.

I sometimes find myself longing for the life of our founding fathers. It used to take three months to cross the Atlantic Ocean. Ben Franklin and Thomas Jefferson (monumentally important men of their era) were in effect incommunicado for six months as they travelled back and forth across the Atlantic. The world seemed to continue on without them. If I am missing for even 24 hours I get frantic emails and voice messages from one and all. Surely what I do isn’t nearly as important as the activities of Franklin and Jefferson. They were removed from the grid for months at a time, yet their colleagues seemed to get on just fine without them.

I have always maintained that the problem with the net is that it was invented and promoted by the Americans. It plays to all our national idiosyncrasies; we Americans want more of everything faster. I can’t help wonder how much better modern life would be if it had been the French who were masters of the net. “Would you like a brioche and a cafe au lait while you wait for your internet connection to be put through?” Or how about this message; “Sorry your search query will be put through once we return from the Riviera on September 1, in the meantime we are sending over a bottle of bordeaux to keep you occupied while you wait…” Ah now that is technological advancement!

Mark, if you work more than ever it is probably because you want to. Or are you calling leisure work? In Franklin’s day 90+% of Americans worked the land, so chances are you would have been neither. You would have been a subsistence farmer, consumed by physical labor and hoping not to die from starvation or infection. What we today call work would have been seen as leisure then.

You are more than a skeptic if you don’t accept that technology has improved your life, and the lives of people in general. People in around the poverty line today have lifestyles that rival those of Jefferson in his day — access to information, calories, travel, health, shelter. Plus, we often forget that technology isn’t just IT — ships, airplanes, vaccines, transport logistics, roads, building materials, energy grids, and so forth. IT is impressive because it is generally making us able to make better ships, airplanes, transport logistics, roads, building materials, energy grids, and much more, and get them in place faster. This all culminates in things we take for granted — because of technology enabling transport, cultivation, weather prediction, market projections, supply chain logistics, and much more, you can get a decent Cabernet for less than $20 per bottle in almost any US town. Pretty awesome.

Athens and Rome are having economic problems, but let’s keep things in perspective. Look at how moderate and considered and information-filled these problems are now. We all know a lot about their causes, their effects, and the possible remedies. Yes, there are small skirmishes here and there, but there isn’t and probably won’t be a worldwide conflagration based on miscommunication, communication latencies during which hostilities can peak, smoldering nationalistic hostilities, etc. Poland won’t be invaded by surprise, an autocrat won’t arise in an insulated but pivotal central European nation, etc. As a preventative on violence and terrorism, the rise of communications technologies isn’t to be underestimated. If a terrorist strikes in the US, UK, Spain, or Indonesia, it’s known worldwide on the same day, and 99.999999% of us condemn them and renew our vows to peace. Because of the communication speed and quality, getting over these economic troubles may feel like a rumble strip rather than a car wreck. As to how we got into this, no technology can transform modern neoconservatives and austerity nuts into competent leaders.

Having anachronistic, reactionary nostalgia based on an idealized view of the past is understandable. It happens to us all. But there are two problems with it — the past wasn’t so great, or else there wouldn’t have been such a panic to escape it among those who were in it (cities reeking of horse shit, syphilis and gonorrhea everywhere, stillbirth and maternal death common, air pollution, cold nights, cold days, stench, cavities, poor vision, infection); and today is imperfect but getting better despite the bumps in the road. You couldn’t pay me enough to return to the days of Jefferson — head lice, itchy clothes, people with bad teeth, watching 50% of my children die before their 3rd birthdays, and a limited selection of wines. I mean, really.

Technology also of course brings us weapons of mass destruction. It is always a double-edged sword.

Yes, general purpose IT will clearly lead to mass unemployment, just like electricity, steam technology, and the internal combustion engine did. Oh, wait…

As economist Brad DeLong is fond of pointing out, since the dawn of civilization technological advances have increased human productivity gazillion-fold. We can now do unimaginably more with each unit of time, labor, and resources than could the Babylonians. And yet, even in the currently-terrible economic climate, the US unemployment rate is around 9%, not 90%.

General purpose IT has dramatically changed what jobs humans do and how they do them, and will continue to do so. But it has not and will not lead to mass unemployment.

A clear difference between electricity, steam, and the internal combustion engine is that they took increased productivity while also increasing the number of jobs, and also moved at a relatively linear pace. IT is accelerating the pace of change, increasing productivity while displacing workers. It is a bit different.

I used to teach philosophy of technology and these are old, familiar issues. Successful technologies usually follow an “S” curve which includes acceleration. Conversely, it is far from clear that IT acceleration is any different. IT is part of the 50 year old computer revolution, as well as the electricity revolution, for that matter. More narrowly, it is primarily a communication revolution so the productivity increase is cognitive. So are the jobs and there are plenty and growing.

Productivity is defined as output/employment. Which implies that employment equals output/productivity. Which means you can’t know what effect an increase in productivity due to technological change will have on employment unless you know what effect it has on output. For instance, if a technology change reduces a firm’s costs to produce its product by X%, and if the firm can take advantage of this by reducing its selling price, thereby increasing sales by more than X%, the firm will need to hire more workers. This is a basic microeconomic point; the macroeconomics would be more complicated because the determinants of output for the economy as a whole are more complicated. But the basic economic point remains (it’s been the subject of much study by economists), and the fact that a technology is “general purpose” doesn’t change it. And to say that IT is different because it increases productivity while displacing workers is to beg the question. What’s needed is an explanation for why IT, unlike every other technology ever developed in human history, will in the long run permanently increase productivity without at least a proportionate increase in output.

Productivity is output/input. Employment is a form of input, but not the only input. So your inference isn’t complete (i.e., employment does not equal output/productivity). A farm that produces 100 bushels in one year and 150 bushels the next year with the same number of farmers working to produce those bushels is 50% more productive, but no labor was added.

Also, your example about pricing presumes a certain amount of elasticity, which may or may not be true. So I don’t accept either of your points.

As to “why IT, unlike every other technology ever developed in human history,” will reduce the need for human labor — I think you’re missing a fundamental point. IT is a general purpose technology. We’ve invented them before. They’ve all reduced the need for human labor. The challenge is that while engines made it so that, for instance, a farmer could effectively farm 1,000 acres rather than 10 acres, there was room for his kids to move up into white collar work by going to college or becoming botanists and so forth. IT is different because it has the potential to intrude at all levels, and we haven’t quite figured out where we’ll fit when, say, an editorial or academic or research algorithm or model might be able to produce a better journal, a better syllabus, or a better research project than we can.

My point wan’t that life was better in 1790; it wasn’t. Technology has improved the lives of everyone on the planet. That is undeniable, I think I stated that in my original posting. However, I agree with Sandy. In general life has improved, but technology is a double edged sword.

The past thirty years has not brought benefits for everyone. In fact, if you review the statistics the majority of Americans have merely tread water since 1980 (economically speaking incomes have remained flat for thirty years). The bottom 10% have actually fallen behind. David and Kent, you sing technologies praises because you are part of the elite (as I am) against whom the Tea Partiers rage. The past thirty years have been good to us. Who can deny that? But Rome and Athens burn and Wall St remains occupied because that statement is not true for everyone. The steel workers of Pittsburgh and the auto workers of 1980 are in fact NOT better off today. Yes, they may have flat screen TVs and iPhones and every other gadget modern technology provides, but that is thin gruel. They also earn considerably less than they did 30 years ago (in inflation adjusted terms). Medicine has advanced greatly in the past thirty years, but the system is no longer available to vast segments of the American population. Athens and Rome burn because those people marching in the streets have lost hope. Technology doesn’t offer them a bright future – it has taken their jobs and their dreams and left them for dust. Can you not hear their cries for justice? Kent, as you pointed out, those very iPhones and flat screen TVs (owned by the American laborer) allows workers in Mumbai to compete more effectively with that self-same American worker. That is good news for the workers of Mumbai but not so good for workers in New York, Rome and Athens. It is not an issue of technology destroying jobs (the concept of work being finite is a fallacy). It is an issue of competitive advantage and wages. The average Western worker earns less money today and has fewer benefits because technology has increased competition.

As in all advances, there are winners and losers – that is my one and only point. Kent, as I stated in my original post, I agree with you. Everything you point out is true. WE are much better off. But we are all part of the elite aren’t we? The problem with technology (to-date), if you are a worker in the West, is that the benefits of this advance have accrued to laborers in Asia and to owners of capital. I am not nostalgic for the past Kent. I am a realist. Way back in the early-90s I figured out that technology would accrue to the benefit of capital and the detriment of labor (in the West). As a Western laborer I determined then and there to alter my income stream from labor (wages) to capital (investments) and I liberally use the technology to do it. As a consequence, I personally have benefited greatly from all the technological advances. I can sit here in my home in Hawaii and debate in real time with you and David in England (Old and New). I can direct the movement of my capital on exchanges in London, New York, Sydney and Toronto with the mere pressing of a button. Except for the loss of some freedom, technology has brought me nothing but astounding benefits.

Kent, just one final note, I have lived in China. I have seen real poverty. I have my doubts about the lifestyles of the poor today rivaling the lifestyles of the elite in 1790 (or any other period for that matter).

Fun debate guys, I have thoroughly enjoyed this little tete a tete. Now if I could only figure out how to get this damn computer to serve up a brioche and cafe au lait…

The predicament you outline is why the authors wrote their book — GDP has risen, but employment hasn’t, undermining a basic economic principle. Our tax code hasn’t helped, as the bifurcation between rich and poor has increased and our tax code has only exacerbated it. Speculation ran rampant, regulation was lax or corrupt, and political leadership was inadequate. The poverty in China can be atrocious, but technology is also relieving it (although I hope the mercilessness often seen with early capitalism doesn’t continue).

You have it wrong when you say I “sing technologies [sic] praises.” This post was cautionary, clearly. That said, it’s also about being realistic — is there really a choice? When have we put a genie back in the bottle? Never. Ever. Ever. So, instead of trying to reconjure the past, the trick is to move forward. In fact, those whose plights you mention (the steel workers in the Midwest, for instance) were disrupted by better ways to make steel. There’s more demand than ever for steel — why aren’t they making it? Because they didn’t adapt their technology. It wasn’t because steel wasn’t in demand. It’s because they failed to adapt.

I personally wish the US tax code would revert to higher taxes on the wealthy so that government could reignite the economy, get people back to work on things machines can’t do yet (build roads, reinforce bridges, teach kids, fight fires, police our communities). The follow-on to this is that if it’s easier for the elite to make their money, there’s even more reason to tax it more heavily. After all, the only way the elite makes its money is by relying on the safety provided by police, the educated populace provided by teachers, the infrastructure created by government, and the peace of mind a viable firefighting force provides.

I agree with you entirely Kent. You are right, more steal is produced, but not by steal workers in Pittsburgh. Genies cannot be put back in bottles and technology is relieving poverty in China (partly at the expense of the American worker).

As you say, we must adapt or fail. And this is where technology fails us, it quickens the pace of change. Evolution teaches us not all adapt in a timely manner. But we must adapt, there is no choice, there is no going back (even if it were desirable). Except for the opportunity for moments of solitude, I don’t see much benefit in going back – I sure would like those three months on a ship from time to time though ;). My point is, not everyone will or can adapt. The quickening of technological change only exacerbates the problem.

This isn’t a debate about the past. It is a debate about the future. Who will prove more accurate; Roddenberry or Huxley? Are we entering a dystopia of a “Brave New World” or are we “boldly going where no one has gone before?” I think the jury is still out. The answer will not be known until 2050 (that is roughly the year when the population explosion bubble will have worked itself out in most of the world).

What do you folks think about cloning humans? Or the development of robots that can take over just about any type of work humans do now? Would the world be better off if no one had to do any work at all and people just could pursue whatever leisure activities they liked?

Work defines a great deal of who we are. A life without work isn’t rewarding. We need to work or contribute in a way that delivers a modicum of respectability and importance.

Regarding pain and productivity, one of the leading principles of my consulting work is that technological change is usually disruptive and therefore it involves a short term productivity loss. Technologies include systems of behavior and those systems cannot be changed effortlessly. This is true of small and large organizations, including whole human societies. But we do it because things promise to be better, which is why we call it progress. The trick is to first, choose wisely, then manage the disruption.

We are of course undergoing a disruptive transition right now in publishing, and what makes it worse for productivity is that a publisher has to keep the old print system going while adding on all the new tasks and procedures called for by the new ebook technology. This burden makes it doubly difficult to survive financially during the transition.

This sounds like a typical challenge in manufacturing, one that is indeed risky financially. It is basically adding a new product production and sales line, while maintaining an existing line as well, where sales of the new product may compete with the old one. Cash flow may be a serious challenge, so some form of financing is likely necessary. And as always with new products, the gamble may not pay. So this is not a step to be taken lightly, although I suspect some have rather wandered into it.

Note too that shutting the old line has its own risks, if it is a significant source of revenue. Plus the old and new line share some resources. But starting up the new line, in a new market, will be a big drain on the cognitive resources of the organization. That is the part that I tend to look at, the cognitive resource aspects. It is what causes the productivity drop, and the trauma. Confusion is the price of progress, but it can be managed. I call it “chaos management.”

As one who got that process started at a university press (but retired before the transition was completed), I can appreciate your description of it as “chaos management.” In our case, it was partly managed by teaming up with the library on campus, becoming an administrative unit of it and taking advantage of resources and skills (like book digitization, data management, and preservation) that our own staff lacked. So, part of success may lie in organizational creativity also.

Kent and David, not to get too theological here, but I was struck by the theological implications of your comments to Sandy’s post. The descriptions of Heaven, Nirvana, Valhalla speak widely of humanity’s liberation from it travails (the route of this English word is from the french “to work”). Kent seems to be arguing that existence without work is unrewarding while David states that sentient robots would allow us to; “escape the fetters of our biology” thus setting us free. Wouldn’t being unfettered from our biology be a working definition of death? Is freedom a working definition of death?

This brings us back to the age old question that has plagued humanity from the beginning of time; what is the meaning of life and what comes once we die? Has technological advance brought us to the point where we need to rethink the meaning of life and death itself? Or even rethink what it means to be human. In any case, the implications are far larger than those outlined in the book that started this conversation.

Mark, as I mentioned above, these are frequently discussed issues in Philosophy of Technology, a small but well established discipline. See http://plato.stanford.edu/entries/technology/ and http://www.spt.org/. I developed and taught this stuff in the 1970s, with a focus on technological revolutions. But I was at Carnegie Mellon, so artificial intelligence was a major theme. (I like to say I work on the intelligence side of AI.)

I do not see artificial systems developing cognitive abilities any time soon, perhaps never, because we simply do not understand cognition, but it is certainly an abstract possibility. So yes it raises the question what it means to be human. If you get a heart transplant are you the same person? We now say yes. Same for an artificial limb or organ? Yes again. How about a partial brain transplant? Yes again? Suppose the brain transplant uses an artificial device? How about a totally artificial brain transplant? How about if we simply transfer the cognitive contents of your brain into an artificial system? And so it goes. If we build and train things that think like us, why would they not be humans?

Science and technology continually force us to rethink our concepts, because concepts are built on the world we live in. When the world changes our concepts struggle to keep up. This is why technological revolutions are full of confusion, because they get ahead of our language. These are real world, everyday problems. But if the concepts are deep enough the issues are philosophical.

Here is one book in that field I would recommend, having been its sponsoring editor: http://www.psupress.org/books/titles/0-271-02539-5.html

That’s 9,223,372,036,854,775,808 (9 quintillion) grains of rice in case anyone was wondering….