A recent paper by John Ioannidis and colleagues is generating some buzz because it claims to have found that 1% of all scientists form what the authors label “the publishing core in the scientific workforce.” These are the scientists who publish consecutively, year after year.

According to their calculations, this 1% amounts to about 151,000 scientists. The putative downside of this concentration is two-fold: a lack of opportunity for the vast majority of working scientists, whose contributions are sporadic or drowned out, and a literature dominated by a small group of scientific researchers and hypotheses. The authors also claim it may show that the research system “may be exploiting the work of millions of young scientists.”

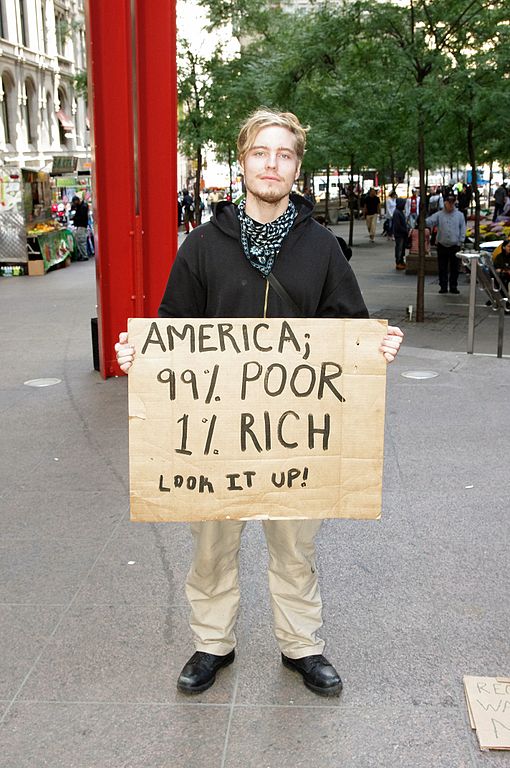

However intriguing the usage of the politically charged term “1%” might be, it’s important to look at the quality of the study before accepting the premise and its downstream arguments. It’s also worth contemplating whether the finding, if true, is unexpected or important.

The use of the term “1%” suggests a strawman of a sort, appropriating for sensationalistic purposes a meme based on inequity and greed. Every information media skews toward high producers — fiction authors, singers, journalists, record producers, photographers, directors, actors, data experts, you name it. Is it a bad thing that much of published sports photography comes from 1% of all professional photographers?

Scientific publishing seems less skewed, but it clearly must be skewed, as it is a media business with constraints on time, talent, and — most importantly for scientists — funding. A small proportion of people are able to work at powerhouse institutions, while only a small proportion of these do a great job of running their labs and securing grants, planning experiments and working with postdocs, and so forth. These gifted researchers produce a lot, or help to produce a lot, and get their names on a higher proportion of papers. But the first question is whether this can be measured.

The authors’ approaches generally left me scratching my head about whether their measurements can possibly be accurate.

First, there is a huge problem with author disambiguation that any study of this type faces. Is the “John Smith” in economics and England clearly not counted as the “J. Smith” who publishes in microbiology and hales from Australia?

To form the basis of their work, Ioannidis and his colleagues used the entire Scopus database in XML to analyze for unique authorship. Looking for what they call “uninterrupted, continuous presence (UCP)” in the literature, they claim to have identified authors who published every year during the period 1996-2011.

In Scopus, there are more than 15 million author identifiers. Whether each identifier is a unique author is a question the authors don’t address, in this or another paper they’ve written using the same database. By the end of the paper, the authors have made the leap, however, writing, “over 15 million publishing scientists” in their discussion and equating individual records with individual authors in table headings and so forth.

Other data sources show far fewer active scientists conducting research. World Bank data show about 8.9 million participants in research and development worldwide. If the Scopus identifiers are not disambiguated accurately (either disambiguating too often or not often enough), the discrepancy might throw estimates off. For instance, if the World Bank data were the denominator, you have doubled the percentage of UCP authors. It’s not huge on a percentage basis, but it’s double the estimate with just this one number changing. One percent changes to 2%, and the meme of social injustice falls by the wayside.

This also presumes that the population described by author identifiers is a stable one of 15 million over the 15-year period studied, which it clearly is not — for two reasons. First, there have been rapid increases in publications from Asia and other regions in that timespan, on top of more scientists coming from US and European centers. There are simply more publishing scientists now than in 1996. Second, the definition of “author” has evolved in many fields during the 15-year timeframe covered by the dataset. In many fields, what would have earned you authorship in 2011 would have amounted to an acknowledgement footnote or no mention at all in 1996. Scopus properly reflects what journal articles assert as authorship. But when this changes, Scopus changes. The stability the authors assume in the dataset doesn’t actually exist.

Scopus captures every author listed in an author list, but for collaboration groups, it only captures the group. This is another blind spot the authors fail to account for in their study. Therefore, making the leap from “identifier” to “individual” is a mistake. Some identifiers are groups, and when publishing as part of a group (something that has become more common in the 15 years covered), individuals aren’t given identifiers.

The issue of accuracy has some more straightforward concerns. In a presentation made last year, Paul Albert at Weill Cornell Medical College found that Scopus disambiguation is prone to making more records than there are people — a form of error called “splitting.” In his sample of more than 1,000 records, more than 700 suffered from this type of error. If you click on the video I’ve linked to, the relevant portion is at approximately 10:50. Albert estimates that Scopus has a 31% precision for finding the right person by name.

The authors made what strikes me as a rather feeble attempt to check the accuracy of their disambiguation — they used a random sample of 20 published authors’ names and checked their publication histories. They found that all 20 sampled identifiers reflected a specific author who had at least one paper published in each calendar year. Yet, in another place in the paper, they state that what they found in this sampling “had no major impact” on the results. The insertion of the word “major” is interesting as is the use of the word “reflected” instead of “corresponded,” especially given the large assumption that author identifiers equate 100% to individual authors. I’m not sure they had a completely clean fetch based on the slightly unclear wording, and testing for 20 out of 15 million seems less than satisfactory. Why not 100? Why not 1,000?

As an aside, in a paper that is so reliant on disambiguation, the authors themselves make a mistake in how they reference one of their own papers, getting the title wrong (reference 12). Accuracy is elusive for us all.

Then we get to the 1% of UCP authors the paper claims to have identified. It’s an interesting number the authors arrive at — approximately 151,000 names. Why is this interesting? Well, according to the US Census Bureau, there are 151,671 unique last names in the United States. The United States not only provides a high percentage of published papers, but as a culture it reflects a nice mix of European, Asian, and other advanced societies with productive scientists. The similarity of the numbers makes me wonder all the more about the data — that is, did the authors simply reverse-engineer Census Bureau findings? Last name is, after all, the easiest thing to disambiguate.

The second issue with the paper is that Scopus covers far more than journals. It includes books, conference papers, and patents. Within journals, it includes most types of editorial material — scientific papers, reviews, editorials, letters to the editor, and so forth. Many authors who publish papers might the next year be invited to write an editorial, a book review, or a review article. Many authors are replying to letters to the editor in the following year. The authors did not differentiate between new research and other editorial material in their study. UCP may simply relate to corresponding authorship — that is, the authors who reply to letters and deal with correspondence.

The authors state that UCP scientists were, according to their analysis, involved with 41.7% of the 25,805,462 published items indexed in Scopus. That’s 10,760,878 items divided by 150,608 UCP authors, or 71 items per author over the 15 years, which boils down to nearly five items per year. If this were to include book chapters, patent applications, conference proceedings, and all types of editorial matter, it’s plausible. For a busy author, it’s clearly doable. After all, according to Google Scholar, the most recognizable author on this paper (Ioannidis) has been a co-author on 58 papers just this year alone.

A third issue is the timing issue. The authors used the calendar to define each year, which seems reasonable but may not capture rolling 12-month periods accurately enough. For instance, if I published a paper in January 2009 and another in December 2010, I’d be counted as having published in consecutive years, even though I’d been given 23 months to do so. However, if I published in February 2009 and then in January 2011, I’d have published just as frequently but be counted as non-consecutive. There is no indication in the paper how often this is the case. The authors did create groups they call “Skip-1” (if an author didn’t publish in one year in the set but otherwise would have been a UCP author) and “Skip” (if an author skipped any years and then resumed in a subsequent year, but would not have otherwise ever qualified as a UCP author). These groupings still don’t address timing issues like that outlined above. Having counted the months between events would have generated more regular data.

This unevenly defined population is then subjected to counts of citations and h-index values, in an effort to understand if UCP authors are more highly-cited and generally have higher h-indices. Clearly, the answer should be yes, and it is. But are these UCP individual authors, or poorly disambiguated clusters of identifiers? Did the researchers just find the senior researchers across science, who have more honorary authorship? Did they just find the corresponding authors? Could the 1% simply be those with the hardest names to disambiguate? If Scopus has 31% (or even 90%) accuracy of disambiguation, the authors could be measuring an artifact.

Finally, even if this were true, is it interesting? Not especially. Does it matter that every field has well-known authors who are natural writers and who publish scientific papers, editorials, book reviews, letters, and responses on a consistent basis? As noted above, most media skew to a small proportion of stellar performers. It may simply be unavoidable. But it also seems to me very likely that this study overstates the disproportionate contribution of these hyperproductive types. Not only might the estimates be off by a significant degree, but the implication about what type of content is being counted may be misleading.

This study raises a lot of interesting issues — disambiguation, workforce estimates, citation concentration, measurement to meaning, and social norms. However, after examining the paper in question, I’m 99% convinced it can’t support its claims.

Discussion

14 Thoughts on "The 1% of Scientific Outputs — A Story of Strawmen, Sensationalism, and Scopus"

If someone can be listed as an author in 58 papers published in seven months then “author” does not mean what it used to mean. Maybe that is the problem.

He includes an authorship position breakdown in his CV, if you’d care to examine it.

That is really not the point. The co-authorship fad has changed the meaning of authorship. For example, if I have an electron microscope every paper that has used it is likely to list me as an author, even though I know nothing about the research, beyond getting my machine to work on it.

Are we supposed to go into every author’s CV to find out what their contribution was? That is impossible. It used to be that 100 articles in a lifetime was a major achievement. Apparently it is now 100 articles a year. This makes no sense.

There has been a lot of discussion about “contributorship.” Some disciplines have very specific guidelines about who gets to be considered an author (ICMJE). Other fields play by their own rules that fit their practices. Some journals are asking for the exact contribution of each author and a taxonomy is in the works to define the roles. Part of this discussion also addresses “credit.” Should the guy with the microscope get credit beyond a mention in the acknowledgements? I have no idea what the answer is because these are academic and researcher issues. I can’t think of any other profession that cares as much about credit as the academic community.

Does ICMJE actually say that some people cannot be authors? That I have not seen, just concerns about defining roles, as you mention. I really do think the multiple authors push is a bit of a fad, perhaps tied to the foolish yet popular idea that interdisciplinary research is somehow better than disciplinary research. NSF is big on this one.

As for your last observation, I think that the arts and politics rank high as well. All for the same good reason, namely success is defined by what others think about your work. Jockeys and horse trainers too, come to think of it.

I have been battling Elsevier’s contradictory authorship definition for years, and my complaints to all sorts of intellectuals has fallen repeatedly on deaf ears. And, as a result of my battles with Elsevier, have now suffered a serious hit. I was banned from their key horticultural journal. I decided to document the academic fraud of this publisher and editor board at Retraction Watch: http://retractionwatch.com/2014/04/10/following-personal-attacks-and-threats-elsevier-plant-journal-makes-author-persona-non-grata/. This is important, because I simply cannot understand why Ioannidis et al. focused exclusively on Scopus, an Elsevier product, when there are so many other useful, but perhaps overlapping, data-bases that could reveal different patterns and conclusions, such as SpringerLink, Taylor and Francis Online, Wiley Online, PubMed, etc. Since Elsevier claims to house about 25% of the world’s science (http://www.elsevier.com/online-tools/promo-page/science-direct/et_sd_adwordsgeneric_nov2013/home?p1=p032), can we thus assume that the assertions made by Ioannidis et al. are only 25% correct? In that sense, I fully agree with Kent Anderson’s criticisms.

Although SCOPUS is an Elsevier product it covers much more than Elsevier publications and would likely include most, if not all, of the citations found in the other sources that you mention. Leaving aside the other methodological problems that Kent identifies it is a perfectly appropriate starting point for an investigation of this sort.

The ICMJE criteria for authorship are quite explicit:

Substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work; AND

Drafting the work or revising it critically for important intellectual content; AND

Final approval of the version to be published; AND

Agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

If someone does not meet all four they should not be listed as an author.

I think this says more about Ioannidis than anything else. In fact, if the “1%” phenomenon that he has identified is a cause for concern, then the Ioannidis phenomenon (he must be in a tiny fraction of 1%) is a huge cause for concern.

The “John Smith” vs. “J. Smith” issue is dealt with by ORCID, an identifier for contributors to academic papers, journals, and other such publications. It’s the equivalent for such people of an ISBN for a book, or a DOI for a paper.

An ORCID enables us to determine that the John Smith who wrote paper A is the John Smith who wrote paper B, and J. Smith who wrote paper C, but not the John Smith who wrote paper D. It also allows us to determine that John Smith-Jones is the same author, after a name change, say on marriage or divorce.

An individual can create an ORCID – http://orcid.org – free, and the process only takes a few seconds. The ORCID is then theirs for life, even if they change job or affiliation. They can use their profile on the ORCID site to show a list of work they’ve authored.

Organisations using ORCID already include Nature Publishing Group, Cambridge University Press, Wellcome Trust and Wikipedia/ Wikidata (I lead the integration work on the latter pair).

There is more information on the ORCID website.

I’m a huge fan of ORCID, but the levels of adoption are still too low to have had any practical impact on this vast dataset. As it becomes embedded in researcher workflows we’ll see adoption levels rise, but it might take a different approach to fill in the gaps for papers older than say 5 years old, where a good chunk of those authors may no longer be active in research.

P.S. I forgot to say, my ORCID is http://orcid.org/0000-0001-5882-6823 – there’s a plugin for WordPress, that allows commenters to include them with their other contact details: http://pigsonthewing.org.uk/orcid-plugin-for-wordpress/