Research Consulting, a UK consultancy, was recently retained jointly by London Higher and SPARC Europe to examine what it costs UK institutions to comply with the open access (OA) requirements laid out by various UK research funders, especially Research Councils UK (RCUK) and the Higher Education Funding Council for England (HEFCE). The need to measure these costs has become particularly acute because HEFCE announced earlier this year a new and slightly more stringent set of OA requirements to which journal articles and conference proceedings (in all disciplines) must adhere if they are to be eligible for submission to the post-2014 Research Excellence Framework (REF), by which the research performance of UK institutions is evaluated.

The resulting report is based on responses to a web-based survey targeting “all UK higher education institutions (HEIs) and public sector research establishments (PSREs) in September 2014, followed by a series of case study discussions with a subset of the participating institutions.” The survey “relies on institutions’ estimates of time spent on open access,” including costs of staff time, overhead investments, and other direct expenditures involved with implementing the RCUK’s policy—however, explicitly excluded from this calculation is the cost of article processing charges (APCs) that are levied on the majority of “gold” OA articles. (The APC expenditure figures reported here are artificially low anyway, since they “relate only to those costs met from RCUK block grants and managed by university libraries and research offices”—not to APCs paid with local funds).

The report is well worth reading in its entirety, but its summary of key findings puts things in a nutshell quite nicely:

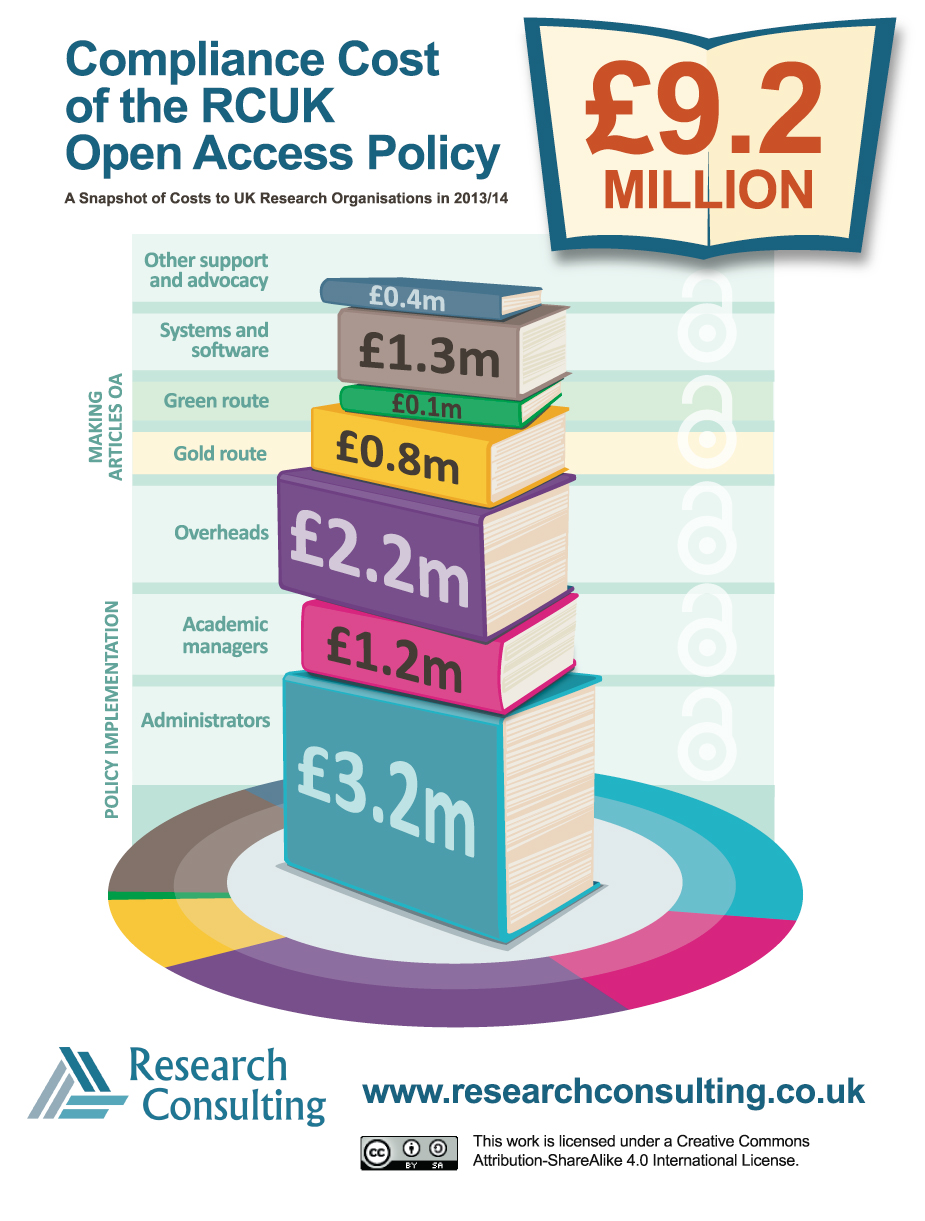

- £9.2m cost to UK research organizations of achieving compliance with RCUK Open Access Policy in 2013/14

- The time devoted to OA compliance is equivalent to 110 full-time staff members across the UK

- The burden of compliance falls disproportionally on smaller institutions, who receive minimal grant funding

- The cost of meeting the deposit requirements for a post-2014 REF is estimated at £4-5m

- Gold OA takes 2 hours per article, at a cost of £81

- Green OA takes just over 45 minutes, at a cost of £33

- There is significant scope to realize efficiency savings in open access processes

A few thoughts on the report and on some of its findings:

At several points in the report, it is noted that most of the costs identified arise from “staff time, often at a senior level, spent on policy implementation, management, advocacy and infrastructure development.” One can reasonably assume that some of these costs will decrease over time—though the report does point out that even when infrastructure development is finished and organizational efficiencies realized, there may not be significant economies of scale available; many of the costs associated with article-by-article processing remaining the same on a unit basis no matter how many articles you process.

Although the overall cost figure that will be most often cited is the £9.2m price tag for institutional labor and overhead, if we’re truly “counting the cost of open access” then it makes no sense to exclude APCs from the calculation (though it certainly does make sense to discuss them separately). According to the report, APCs amount to “some £11m or more,” bringing the total cost of compliance with RCUK regulations to “in excess of £20m.”

I found interesting the repeated mention of costs related to “advocacy.” This struck me as curious, given that we’re talking about the costs of compliance with a mandatory program. Why invest in advocating for a program that you have no choice but to adopt? Looking at the detailed data worksheets provided at the Research Consulting website, it appears that the costs reported for “Marketing and Events” and “Consultancy” are being categorized together as “advocacy.”

The study’s reliance on library staff’s informal self-reports of time spent on OA-related projects (which “in many cases… represent[ed] the best guess of a single member of staff”) is a little bit troubling, especially given the strong normative force of OA in the library world. I doubt that any respondents would have deliberately understated local costs, but the social pressure on librarians to show themselves supportive of OA is strong and should perhaps be taken into account when considering the accuracy of those best guesses.

On the flip side of that point, it’s also important to bear in mind that while this report attempts to document costs, it does not (and could not possibly) measure the value that is certainly created by open access to this publicly-funded research. Measuring cost is important, even essential, but when evaluating a policy or program it’s obviously only part of the picture (as this study’s authors would surely agree).

This paragraph, dealing with the distribution of costs as compared to the distribution of grant funding among UK institutions, is worth quoting in full (emphasis mine):

The total cost of compliance with the RCUK open access policy in 2013/14 (c.£20m) appears broadly comparable to the total block grants provided by RCUK in the period of £16.9m. RCUK has offered institutions significant flexibility to meet non-APC costs from block grant funding in the early years of its policy, an approach which was welcomed by many of the survey respondents. In practice, however, a significant proportion of research organisations’ costs (predominantly academic and administrative staff time and overheads) are not easily identifiable and recoverable from these grants, and are thus borne by the organisations themselves. Many therefore find themselves carrying forward significant balances of unspent RCUK funding, even though the overall cost they have incurred is likely to exceed the value of grant received.

Overall, I think this is an admirably clear-headed and useful report, and while its overall findings may not be completely generalizable to countries that don’t have a centralized national higher education system (<cough>United States<cough>), the more granular data it provides regarding local infrastructural and administrative costs should be both interesting and useful to a very broad readership.

Discussion

37 Thoughts on "Quantifying the Costs of Open Access in the UK"

Very interesting, thanks for summarising.

Two quick calculations:

First,since “The time devoted to OA compliance is equivalent to 110 full-time staff members across the UK” and there are, coincidentally, 109 universities in the UK, this works out to almost exactly one OA staff-member per university. (Obviously the distribution is not that even.)

Second, since APCs “bring […] the total cost of compliance with RCUK regulations to “in excess of £20m”, and the population of the UK is 64.1 million, that cost comes to 31.2p per person, or 1/72 the typical pay-per-view price of a single article at $35 = £22.46. (Of course, that’s only taking into account the benefit within the UK, ignoring the broader benefit to the rest of the world.)

How does dividing by the population translate into a benefit? I doubt every man, woman and child will read journal articles. That there are any benefits to this scheme seems somewhat far from certain.

It’s also (on the OA side) only taking into account the per-taxpayer cost of administering OA programs in an environment of significantly less-than-total compliance — not the total cost of publishing an article plus the cost of providing it on an a la carte basis, which is what’s represented by the pay-per-view price. (In some cases a profit margin is figured into that price too, of course.) Comparing program administration cost with the market price of a finished product isn’t an apples-to-apples proposition.

That being said, by no means am I arguing here that these OA programs represent a bad deal for the UK taxpayer. I’m simply sharing the cost figures that were calculated by this study. Those figures can (and most certainly will) be parsed in any number of ways by people with different agendas and different perspectives on the OA question.

What percentage of total UK articles does this cover? What percentage of UK articles are being made OA to incur the costs listed? It’s clearly not 100% as of yet, as the RCUK talks about achieving smaller levels of compliance and slowly ramping up. So if the costs are X for Y% of articles, what would they be for 100% of articles?

The report estimates the first-year RCUK target at about 10,000 papers (see p. 14). I don’t think we have firm numbers yet on what the actual rate achieved was (though a report’s due soon) but based on what people have said so far, it probably was about that or a little higher.

There’s estimates on p.14 for what the £33/paper green estimate might mean at various scales, though note that (per p.2) the admin costs to process a paper only accounted for about 10% of the overall costs, and the rest will probably not rise quite as fast as overall numbers go up.

Those are all good questions, and I’m not sure if anyone has studied the percentage of UK-produced articles that is currently administered by existing compliance programs. If a reader knows of such a study, please do share it. It would be great if this report spurs deeper and more comprehensive cost studies.

Mike the entire population of the UK never read and does not read journal articles. To use total population figures is a canard. Thus, costs have to be based on cost per reader. There are some 2.3 million college students (https://www.hesa.ac.uk/) and 185,500 academics, I have no idea as to the number of scientists in the UK but lets say there are another 185,000. There are some 900,00 employed in science companies (http://www.prospects.ac.uk/science_pharmaceuticals_sector_overview.htm). There are some 211,500 (http://www.gmc-uk.org/doctors/register/search_stats.asp) registered doctors in the UK. Can we agree that the vast majority of the market for journal articles is comprised of the total of these populations. If so, we are looking at a market of some 3 million.

What was and is the cost of subscription based journals in the UK? Considering that the vast majority of those who read the articles, read them for free through their library subscription, or if one was not part of the above communities could go to a library and read them aren’t the costs just being shifted from the public/private sector to the public? Before OA, the public was paying for the subscriptions and now the public is paying the publication costs. On the other hand the private science companies are not pay for article access except through their taxes.

Has article readership in the UK gone up because of OA?

Lastly, I don’t see the “greedy” publishers losing money because of OA, but rather making money off of it just as P1 is now making money off of OA.

Thus, OA was and continues to be a shell game and the reason is simply because there is no free lunch!

This estimate is probably very low because it does not include the labor of the researchers, which may well be one or two orders of magnitude greater than that of the administrators. Presumeably this labor is paid for by the HEI’s, so is a cost to them. What they call advocacy is probably mostly communication of the rules to the researchers, which in itself can be laborious for the latter.

Figuring out new rules is a major cost to the community. In the US regulatory system all this labor is called the “burden” of the rules and the burden is estimated for every regulation because we have a Federal burden budget process that limits the burden that agencies can impose on people. (I helped design it.) Under this budget process an agency cannot impose new burden without cutting existing burden somewhere. They thus try to minimize the burden of all their rules.

I remember a day when publishers were criticized for making “value” arguments vs. “cost” arguments to justify their prices. It seems that part of your interpretation is that “cost” cannot be the only basis of assessment, and that “value” needs to be taken into consideration. It’s an interesting twist.

I can’t imagine a reasonable analysis of any system, program, or price that doesn’t take value into consideration. Of course, there’s a difference between saying “You should take the value of our product into consideration when considering our price” (I don’t think anyone would argue with that) and saying “You should accept any price increase we are able to shove down your throat because, trust us, our product is worth it.”

Rick, programs and markets have rather different economics. The value of a program is usually called the benefits, as in benefit-cost analysis, or do you have something else in mind? In markets the I think the value of a product is measured by sales.

David, by “analysis” I mean the thought process that goes on in someone’s mind when deciding whether or not to participate in a system or program or to buy a product; I’m not talking about the formal economic analysis of a market.

Economics is supposed to include rational individual decision making, in fact it is based on it. The value of the product to the individual is measured by the price they are willing to pay, which varies from person to person based on the utility specific to them. This is why sales measure value (in the simplest model). Of course when the individuals come in collective systems, like families and universities, things get more complicated, but it is hard to see this principle not holding.

Economists may use price as a proxy for value in modeling, but neither they nor anyone else actually believes that price is, in the real world, a fully reliable indicator of value. In fact, we all know it isn’t; that’s why we stop and ponder possible purchases (“Will this be worth the price?”) and sometimes do extensive research on the quality and reliability of products before committing to purchase them. That process of pondering (with or without research) is the “analysis” I’m talking about; Kent used the word “assessment” in the comment to which I was responding.

Rick, it is not the price that measures value in economics. It is the price one is willing to pay, as I said. The kind of pondering you are talking about has to do with determining that. The point is that the product has no value per se. Value is always in relation to a given individual. The idea of markets is to find the price that best fits the distribution of what people are willing to pay.

Some thoughts (I was a survey respondent):

a) Yes, “advocacy” as used here more or less covers all the internal communications – time spent telling researchers about the policy, encouraging them to use the internal systems, etc. I’m not sure how we ended up with the term, but it seems to have stuck.

b) I agree entirely about the time estimates (particularly estimates for time taken by authors). I ended up omitting this from our return as we’d never tried to count it before and so I didn’t trust any retroactive numbers I’d come up with.

That said, the very rough total suggested on p.13 of one FTE to do the admin work per thousand papers is in line with some other estimates I’d seen before this survey came out. So it feels plausible, overall.

c) One thing to note is that the final £81 estimate for gold includes an estimate of £17 to cover processing the APCs, but that the £33 estimate for green does not factor in the admin time for paying any page charges etc, probably because APCs are often paid and tracked centrally and page charges often aren’t. As a result, the difference in cost to the institution of handling the two types of paper may be less than these numbers initially suggest.

On this last point, I’d love to see some work done to estimate the overall cost of page charges etc alongside these studies – it seems to be the big missing element in the “total cost of publication” discussions.

It is great to see some actual cost estimates, however partial; now we need the same on the benefits side. It will be interesting to see if the US Public Access rulemakings to come include cost-benefit analyses. This is required if the rules are deemed to be economically “significant.” Having to do this may help explain why we still have no agency programs almost two years after the OSTP memo, except DOE’s. DOE claims they do not need a rulemaking.

Benefit estimates are necessarily speculative and the US rules for doing them recognize this. See OMB Circular A-4: http://www.whitehouse.gov/sites/default/files/omb/assets/regulatory_matters_pdf/a-4.pdf. But doing a benefits estimate requires at least making clear the kind of things that have to happen in order to justify the cost of the rules. OA seems to lack this kind of picture at present.

I’m pleased to see my report has generated some interest beyond these shores, and thanks for the largely positive feedback. Rick has done a good job of summarising the key findings and recognising some of the nuances and caveats that applied to the study. To pick up on just a couple of the points in his article and the associated comments:

1) I was interested to note Rick’s observation that social pressures to be supportive of OA may have led librarians to err towards lower estimates of time. In many cases I suspect librarians (and research managers, who are also important stakeholders in the process) would in fact be more inclined to overstate the time spent, as this helps them make the case for additional funding and resource. Certainly the reliance on self-assessment of time is a weakness in the study methodology, but I think it is very difficult to say whether this skews the results in one direction or another.

2) Regarding the percentage of articles covered, the figure of £9.2m relates to the costs of achieving compliance with the Research Councils UK policy in 2013/14. It’s estimated that RCUK funds about 25,000 articles per annum, or just under 18% of the total scholarly output of the UK (which a recent Elsevier study cited in my report put at 140,000). Only 45% compliance with the policy was required in 2013/14, so it is probable that 10,000-12,000 articles were actually made OA in 2013/14 in accordance with the policy. However, this doesn’t mean costs would scale up in accordance with article volumes – only about 10% of the costs I identified were directly linked to article volumes, the rest were about implementing the infrastructure and cultural changes needed to support OA.

We are currently working on an academic paper that will draw on some of the findings in the report and explore some of the implications in a little more detail, so the comments here provide some very useful prompts as we put this together.

Good comments and questions, Rob. In response to this:

In many cases I suspect librarians (and research managers, who are also important stakeholders in the process) would in fact be more inclined to overstate the time spent, as this helps them make the case for additional funding and resource.

This is a great point and may very well be true, especially in the UK where there is a real and binding mandate at work. At my (US) institution, the argument “you should give us more money so we can manage OA protocols” would likely be answered by administrators with “why are you spending all that money managing OA protocols when our faculty have already indicated to you that they’re not much interested in OA?” But again, our faculty have the luxury of not having to be much interested in OA, except in the case of funder mandates.

Rick/Rob: If you will permit a related query, is it fair to predict that the responsibility for managing OA publication will migrate from librarian to research manager? That would seem logical.

Andrew, it’s a good question but that’s not what I’m seeing so far, though it is certainly true that the distinction between the responsibilities of the two roles is becoming increasingly blurred. In most institutions in the UK OA is managed as a partnership between librarians and research managers, with the library generally leading on communications and repository management, but the research office more closely involved in the funder reporting and compliance aspects, though of course there are exceptions. It’s hard to see research managers taking overall ownership as repositories almost invariably rest within libraries, as does the expertise on copyright and licensing. I also think librarians need to retain ownership of OA in order to preserve visibility of their institution’s’ total cost of publication, and to be able to negotiate with publishers over both APC and subscription prices collectively. I should perhaps point out that I used to be a research manager, so this is not to denigrate the importance of their role at all, but I think librarians are usually better placed to deal with OA in its entirety.

Just one UK viewpoint:

Historically universities have had no role in the process of getting published. Open Access policies from research sponsors and university funding agencies are changing that. Much of our cost comes from workflows that don’t recognise this new reality and the workarounds we use to compensate for that misfit.

Publishers consistently obtain a DOI for an article when accepting it for publication and share the DOI with authors and allowing them to enter a URL for a manuscript version on a subject or institutional repository

Publishers notify universities when one of their authors is accepted for publication and send the DOI, allowing them to enter a URL for a manuscript version on a subject or institutional repository

Publishers send the university (or some clearing service) a pdf of the AAM together with basic metadata on authors (eventually ORCID), title, journal and embargo in addition to DOI

Publishers collect structured information on grants that supported the work reported in the article and shared this with authors/institutions. They also ensure consistent acknowledgement sections formats and data content.

Institutions (with help from CrossRef) use DOI to ensure OA manuscript location is available as well as publisher Version of Record location to anyone with the DOI

Institutions use the PDFs to populate a repository that promotes the publisher VoR

Institutions use the grant information to relieve researchers of the tedium of grant output reporting (or some of it)

Result: lower admin costs, effective use of DOI and reconciliation of citations, happier authors.

I know publisher systems were not designed to work this way, but is it really so hard that it couldn’t happen?

Currently, Cross Ref does not allow two DOIs to be assigned to one piece of content. For example, we are not permitted to have a new DOI on a translated version of a published article. Even if the article are not in the same language, CrossRef considers the content to be the same. There are a few exception in which messy and very non-user friendly resolver pages are allowed if the content is dual hosted. I don’t get how having one DOI from the publisher with a new DOI for the manuscript can work.

My proposal was that a single DOI can be aware of and report more than one location for an article. So there is a single DOI for a single article, but that DOI can return the VoR (default) or the manuscript location (if passed a suitable query parameter). I wasn’t suggesting that this could be done today, just that it would not be difficult to extend CrossRef to make this possible, should the owners of Crossref wish it to happen.

That is interesting, Angela, because with CHORUS some publishers are listing both the manuscript for public access and the VOR for sale. I have assumed they would have different DOI’s because the DOI that CHORUS gives the Feds has to land on the manuscript. Perhaps the DOI landing page will provide access to both versions. It is common for the DOI landing page to not be the article, but rather to offer the article, plus a lot of other stuff. Have to check this out.

I thought they were also talking about having a DOI that goes directly to the full text, but maybe that is just talk at this point.

In general, the DOI resolves to the abstract page for a given article. From there one can navigate to the different versions available.

I can see why the Feds might be hesitant to accept that DOI link for public access, if you are selling the VOR from the landing page. That is called a commercial activity and they are to be avoided.

I’ve not heard any problem from the DOE on this. We also run ads on the VOR, another commercial activity.

but isn’t there a problem in that not all instances of the article are accessible from that page? Very often researchers land on the abstract page and if they don’t have a subscription, assume that they don’t have access and use dark sharing to get the content. In fact, that use might have access to the content though an aggregator, an institutional repository or a subject specific repository or pre-print server.

Link resolvers are the traditional way to tackle the ‘appropriate copy problem’, but they have a number of issues, depending on the institution and how they obtain content, links are often broken, incomplete and not-intuitive at all. I hope that CHORUS can alleviate the problem, but currently, it’s too sometimes difficult for many scholars to work out where to get properly licensed copies of content and so they resort to slow, inefficient ways of getting content, like emailing the author and asking for a copy.

Is the purpose of the DOI to be a persistent identifier of the version of record or to be a pointer to “all instances of the article”, particularly those that are accessible to persons without a subscription? Perhaps the DOI is not the right tool for doing what you’re seeking to do. One would think that a search engine would be the appropriate tool for finding online copies of something.

I don’t know if this is how it ‘should’ be, but it seems to me valuable that an article have a single identifier, not least so it becomes easy to collate citations. If some people are going to find and cite the manuscript, it would be good that this be tracked as well as citations of the VoR – unless you think manuscript citations ‘don’t count’…

Citing the work is citing the work, regardless of which version you read. But where it gets tricky is when you have multiple versions of a paper available, the version of record, the author’s manuscript and potentially a version posted to a preprint server. If all are significantly different, what happens when I cite a statement that was removed from the final version?

It’s one of the reasons why I think using the Version of Record as the “official” green OA version through CHORUS can save a lot of trouble.

That may well be, but it won’t help our organisation comply with government (HEFCE) policy…

CHORUS has been built to be scalable and expandable. While for the moment we’re focused on the US government policies, the not-for-profit behind CHORUS is called CHOR, Inc. The notion is that the US part of CHORUS just represents the first project we’re doing. So while not on the immediate horizon, there’s potential for meeting a wide variety of needs through the system.

Hi – I wanted to clarify this point. Specifically I am talking about CrossRef DOIs – DataCite DOIs that say, figshare, assigns, have different rules. It’s not true that publishers aren’t permitted to assign a new DOI to a translated version of a published article. CrossRef is a citation identifier and a translated version of an article is a separately citable item and could be given a new CrossRef DOI.