The Oxford English Dictionary’s overarching definition of the transitive verb “publish” is “to make public.” An early use, dating to 1382 is “to prepare and issue copies of (a book, newspaper, piece of music, etc.).” This is probably how most publishers think of the term: public distribution of a text. In usage dating from 1573, however, the item in question and the manner of its distribution is more expansively conceived: “to make generally accessible or available for acceptance or use . . . to present to or before the public;” in more specialized (and obviously more recent) use “to make public . . . through the medium of print or the internet.”

Recent contretemps in history, covered by The Chronicle of Higher Education and featured in an unusual forum in the American Historical Review, re-raise critical questions about what it means “to publish” in a digital era and suggest that the earlier, tighter usage is still central for historical scholarly practice.

The short version is this. (Disclosure: I know three of the principals professionally; one of them is a good friend. Such is this small world.) David Armitage, chair-on-leave of the History Department at Harvard and Jo Guldi, an Assistant Professor of History at Brown, wrote The History Manifesto, published in an open access manner by Cambridge University Press (CUP) and copyrighted October 2014. The History Manifesto called, among other things, for a return to, if not longue durée, then a longer durée in historical scholarship. They contended that historians in the last decades have been occupied with smaller chronologies, smaller geographies, and smaller topics, and have thus lost the platform for addressing – or showing leadership on – big problems of global urgency. The book was widely reviewed, with some plaudits and some pans. There were blogs and departmental conversations. There were debates about the correlations of scale and impact, and the definition of each.

And there were questions about methodology. In the AHR forum Deborah Cohen, Professor of History at Northwestern, and Peter Mandler, Professor of History at Cambridge and the President of the Royal Historical Society, summarized some of these and raised more of their own. For example, when Armitage and Guldi assayed the bigness or smallness of dissertation and monograph topics, did they fundamentally misinterpret the data — particularly the analyses of other scholars on this subject?

But things really heated up in the late winter, months after the initial round of reviews and just as the AHR forum was made available online (for print publication in its April issue). A new, revised version of The History Manifesto was posted on the CUP website. For weeks there was no notice that this was a revision, and the new version still showed the October copyright date. The website now heralds the revision as dated February 5, although it was not posted until late March, and offers a page with both the original and revised versions and a modest list of changes to the latter (most of which seem to be in direct response to various criticisms of the Manifesto, including those of Cohen and Mandler). Armitage and Gould contend in posts on Guldi’s blog in the last few days that there is nothing nefarious in the delayed acknowledgement of the revision, and that they, and CUP, were learning as they went along, blazing a new path to open access humanities publishing. I haven’t seen other reviews of the original version post a notice to this effect, but the AHR forum now carries a note that Cohen and Mandler’s critique, and the forum discussion, was based on the first version of the Manifesto.

There is a lot of grist for any mill here. The issue that I think will linger has to do with what Armitage and Guldi have claimed as the brave new world of Kathleen Fitzpatrick’s Planned Obsolescence — a world of free-flowing exchange and revisable text. Guldi’s blog post on April 9 notes that in the midst of both the time consuming efforts to update the website and the “bloggingly fast” critique and revision, “there are scant precedents for appropriate habits of keeping readers updated with what version they are reading.” Indeed.

Also this month, Perspectives on History, the magazine of the American Historical Association, which also publishes the AHR, featured a series of four essays on the theme of “History as a Book Discipline.” The authors considered a range of issues about the role that books (as opposed to articles or other forms of scholarship, including public history work and digital humanities projects) play in the discipline as a method for advancing humanistic knowledge and as a professional credential. Claire B. Potter, a Professor of History at the New School and widely known for her blog, Tenured Radical, asked “Is Digital Publishing Killing Books?” (Answer: nah, there’s been a crisis of books since the nineteenth century, and besides, books seem to be thriving alongside all sorts of digital media.) But none of these authors considered whether “the book” was a fixed text. They assumed. And as most readers of this blog will know, Fitzpatrick imagined, and enacted, a process of open review of her manuscript in process, not post-“publication.”

It is uncertain how review and revision should function in practice, “post-publication.” But it is also unclear why we should evaluate work that might – or might not – be complete, One explanation is that because print publications have often made corrections to subsequent editions of a work, this online revision really isn’t much of an innovation. On the other hand, some argue that online publication offers a new form of scholarly communication, and with the speed of the digital age it should not be surprising that the traditional stages of peer review, publication, and critical evaluation, are stepped up and intermingled. Another rationale holds that because post-publication revisions improve the work, we should revise in this way to better scholarship. Perhaps diversity in forms and expectations of a “published” text should simply be welcomed; to quote the great French philosopher Pepe Le Pew, “vive le difference.”

But isn’t scholarship built on an architecture of citation? Even if one doesn’t credit the whiggish notion that we will know more and be better for it over time, the structures of scholarship are such that one builds on what has come before. Citation, the essential scholarly building block, requires some measure of fixity, a version of record. Open access requirements to deposit “accepted manuscripts” that have not yet been through the editorial and production processes have already been called out for creating too many confusing (and uncitable) versions of scholarship. In the case of The History Manifesto and the AHR Forum, reviews were written about one version of the book while another was in the works. Reviewers quoted passages and cited pages that changed. Perhaps the AHR book review editors would have paused before assigning reviews if they had known the book was a moving target. Or perhaps they might have encouraged their reviewers to engage with the project in a different manner.

So what does it mean for scholars to “publish?”

Discussion

50 Thoughts on "Version Control; or, What does it Mean to “Publish?”"

Perhaps we need the concept of the “version-less” document. Wikipedia articles, even more so crowd sourced Google docs. This is where the “as of” citation comes in. And yes they play hob with traditional print based practices. Not sure there is an alternative.

I think the point is that the document, for better or worse, is an artifact of print. In the digital world everything becomes a service. Scholarly communications has not caught up with this yet. But think of the constant updates you get from consumer services. We will be reconceiving the article as a dynamic service. It will probably go through many iterations before we get there. Stay tuned, and be prepared to live a long life.

I think there’s a fundamental difference between something that’s assumed to represent the evolving state of knowledge (borrowing David’s phrase below), such as Wikipedia or a project on google docs that a group is creating, and a published piece that people are responding to through formal (published) review. But this is exactly the issue. Should the reviews be revised, too?

The question is when we are talking about. If you mean today, any solution is a kludge. If you mean in 10 years, the fixity of the article disappears, and along with it the ecosystem of citations, precedence, etc. I am not saying I like this, but that’s how schol comm is evolving.

Joe, I respect your vantage on this, and I’m not denying your prognostication abilities. But I’d like to know how expertise develops in the world when “the fixity of the article disappears, and along with it the ecosystem of citations, precedence.” I’m not so old school that I believe in any kind of linearity of knowledge accumulation, but I do think that being able to converse (and in this I include scholarly “conversations” that are conducted via research publications) knowledgeably requires some common foundation.

I don’t have the answer to this, and as I said before, I am not saying I like the situation. But it’s hard to escape the trend. I think as publishers we have to stop talking about publications and start to focus on the ecosystem of publication.

Sure, but publication ought to be first about *what’s being published* and not how it’s being published. First principles! If the how isn’t serving the what, then we need to do some serious course (trend) correction.

Karin, all the evidence is in the opposite direction. The medium is the message. A “what” perspective is an artifact of print publishing. As I said, I am not suggesting that I like this: I am an observer, not an advocate. McLuhan coined the phrase in 1964 (which, coincidentally and irrelevantly, is the year that the Beatles first appeared on the Ed Sullivan show). That’s over 50 years ago, and we still don’t feel that lesson in our bones.

The problem is that this is not a fundamental difference. It is just a relative difference in practices that the digital era may make impossible to sustain. It is normal for revolutions to obliterate distinctions.

Regarding reviews, if the review is of a versionless document then the version being reviewed needs to be published with the review.

How much time and effort are you going to put into writing and publishing a book review for something that may entirely change, making your book review article instantly obsolete? Does this either spell the end of the published book review, or does it start an arms race of back and forth revision (review comes out, book changes, review is revised, book changes….)?

For most humanists, book reviews serve as a key mechanism for discovering books. If we believe that reviews are threatened in an environment of reduced fixity, which I think is a reasonable hypothesis, then new discovery systems will be required.

Many discovery systems assume fixity, perhaps most. Citations certainly do, also DOIs to some degree. Doing a Google search means searching their index of prior material, not the actual Web. Versionless documents will be a real challenge. But then too, there are different kinds of variability. Adding content, as with annotations and blog comments, is different from replacing content. There are many scenarios.

Some problems this creates:

1) Ask yourself, how often when you look at a Wikipedia article do you dig back into previous versions? Unless you’re a person actively editing a page, I’d suggest this behavior is pretty rare.

For most readers, what’s on the current version is going to appear to be what has always been on the current version.

2) Given the continuing use of the PDF version of articles (and eBook versions of books) which are downloaded once, and then consulted over time without ever going back to the original online source, a system like CrossRef’s CrossMark (http://www.crossref.org/crossmark/) becomes increasingly vital.

3) What is the motivation for an author to update their previously published paper? Given that our career and funding system is built around getting credit for publishing new works and new discoveries, will funders/institutions really care about updating old material? How will this be tracked and measured?

Hi Karin,

Your last post raised a fundamental question about the purpose of the research article–is it a high level communication meant for experts in a field or an announcement of a discovery meant for the non-academic public? Here you raise a similarly fundamental question about each piece of research literature–is it meant to serve as a historical record of the state of discovery at that particular time or as a constantly updated and comprehensive explanation of a phenomenon or concept?

In the science world, we assume that every single paper that is published will later be corrected by the next paper, or at least the understanding of what’s described will be greatly expanded upon over time. If I write a paper that claims that vaccines cause autism, and ten years later this is proven to be false, is it okay for me to go back and change my original paper and claim that I never said that, that I knew all along what was happening? Is there a level of deceptiveness in doing this? We are at war with Eurasia. We are at war with Eastasia. We have always been at war with Eastasia.

Further, does this subvert the peer review process? To publish the article, I must meet the criteria of the editor and the peer reviewers. Once published, am I free to completely rewrite the paper however I like? In this case, did the publisher and authors run those changes through the standard peer review process?

Right, David, that’s exactly the issue for history, too. As my graduate school advisor, the much-published Jack Greene, used to say of his own work “that’s what I thought then!” Revised editions, largely because of the cost of print, often included minor corrections published years later, though I’d love to have some hard data on how often revisions/ corrections are published in print. In the few examples of major works that have multiple editions, whether revised or condensed, it’s always been a bit of a hassle to distinguish even between those few.

But a constant and very fast process of revision suggests that to be part of the scholarly conversation on a particular piece you’d have a very hard time entering at a middle or later stage.

The entering problem also arises when a field is emerging or changing quickly via a flurry of papers. But if the revisions are versionless then yes one can only deal with what one has. But then in this case the prior history should be largely irrelevant. Presumably the focus is on the document not the reasoning that led up to it. If prior reasoning is important then versioning will probably occur. This may be the need that limits the versionless method, the need to see the prior reasoning..

Had Armitage et. al. published The History Manifesto in Apple’s iBookstore, it would have had a version number, like software. Revisions would require incrementing that version number and a précis of major changes made. AFAIK, it is not possible to obtain earlier versions.

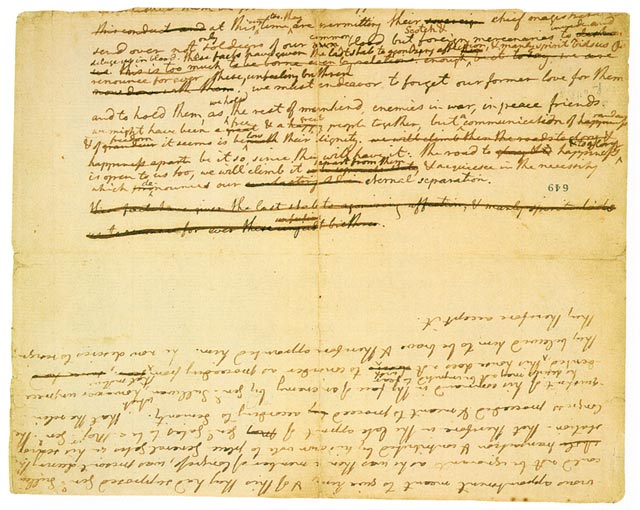

Loss of audit history militates against gaining insight into an author’s thinking. That is a loss that we should think about restoring somehow. No longer do we get to see early, handwritten drafts, marginal notes and the collegial correspondence that may have contributed to an article or book. Those things usually get flushed in one way or another. Indeed, we may even loose the primary source material itself. Can anyone still read what Olli North wrote about Iran/Contra on an obsolete IBM system called “Profs”?

So I’m going to go back to your construction metaphor and see if that helps us a little. First I’d argue that citation is the cement that holds together the bricks, not the ‘essential building block; itself.

So once upon a time bricks and mortar was the prime mode of converting architectural plans into buildings. But new methods, new materials, new process were invented. They allowed all sorts of new forms to be created. So the Shard in London would be unimaginable in a pour bricks and mortar world.

But houses are still by and large built from bricks and mortar. Or wood and nails in some locales.

And perhaps that’s the message. There will be superb new pieces of scholarly architecture built from new materials beyond what we now recognize that we can scarcely imagine now.

But bricks and mortar (articles, chapters, citations) – they’re not done with yet and perhaps never will be.

I think this is a good point. We tend to see things as zero sum games. Something new must replace something old. Each new technology is an “iPhone-killer”, rather than an additive device. The research paper and the research monograph are highly evolved forms, meant to serve a particular purpose. The proposed change (constant updating) does not serve that purpose. So has the purpose become obsolete (hence replacement with something new that serves a different purpose) or are we talking about a different functionality here and something additive in the literature?

As a computer scientist, as well as writing papers, I also write software. The two things work in different ways. A paper is an artefact, a thing, that is unchangable and reflects the point in time at which it was written. Software, on the other hand, is a process. You catch the bus at some point in it’s journey, and it can change its location. I often wonder whether we should have knowledge like this, rather than the stale environment of thoughts we once had that is scientific publishing.

Having said all of that, with software, it’s changeable nature does not mean we have no fixed points. In fact, in this environment, fixed points become pretty much essential. We call them releases. In the software process, we are keenly aware of the difference between a stable release and a snapshot. And we have extremely advanced tools for version control (called, er, “version control systems” — I don’t know if your title was deliberate or not).

I am not sure this is a new issue, though. Wikipedia happened a long time ago, and it copes well with change also. I am not advocating that, as scientists, we go there, but I think that publishing could learn a lot from both it and the software engineers about how to maintain stability in the face of change.

So interesting to think about “versions,” both theoretically and technologically (literary scholars of course have a lot to say about the former, and digital scholars about the latter). A lot of my post was about function– how does a version function in the scholarly conversation. The function of software releases seems to be very akin to a publication as David articulated it. It marks a moment, and a point of reference.

The question remains though whether rapid re-versioning facilitates or inhibits scholarly communication. I’m obviously arguing that in the case I cite it does the latter.

Not necessarily, I think. You cite my paper as support for your position. I withdraw my paper because I realise that it’s all wrong. I update my paper with a description of why it is wrong.

Now, for some one reading your paper, has this not improved communication?

Of course, this is not an either/or thing. I have had publishers correct typo’s in my papers before (introduced by them!). I guess few would object to this (although the author of a scientific study of typos might). So some changes are okay. Wholesale updating of the arguments? Well, we’d all think that is wrong, no doubt. But what about updating an analysis given the release of new data?

Do I really need to write an entirely new introduction, methodology and so on?

My conclusion: notions about “an academic paper” are flawed. Really, we need more than one form. Now that we are off paper (sort of) we can explore different kinds of publishing process and I hope that we do.

Now, for some one reading your paper, has this not improved communication?

This is the key question. The point isn’t to preserve a format or practice because it’s how things have always been done, the point is to do what provides the best level of clear communication. Let’s use your example, you publish a paper that states A = B. I do the subsequent experiment and realize that in fact, A = C. Right now I publish the next paper, cite your original. Is it an improvement for you to then go back and change your original?

First, it seems to me that it would invalidate my citation. In my paper, I say that you claimed A = B, but when someone now goes to read your paper, it says A = C. Now I look like an idiot who didn’t understand your original work, and my subsequent discovery looks like it was swiped from your earlier paper. It also then throws out of context the 100 other papers that were written in between ours that all cited your A = B theory before my discovery. If I read one of those papers then go to yours, again, your paper now says something completely different than my citation claims it does. That to me does not sound like an improved level of communication. Once you change your paper, then every single paper that has cited your paper must then change to accommodate your change.

Would it be better, instead, to leave your original paper as is, but add a comment at the end noting my subsequent work and the different conclusion that now holds true? How much should you be allowed to change? Do your changes go through the peer review process?

But what about updating an analysis given the release of new data?

Do I really need to write an entirely new introduction, methodology and so on?

Sure, why not? Has the background in the field changed, has the motivation and theory behind the paper changed? If so, you need a new introduction. Has your methodology changed? If so you need to write that up. Is it a new and original contribution to the literature? Then why shouldn’t it stand as a new paper? Why is an updated version of an incorrect paper better than a new, correct paper?

And given the way career credit and funding is allocated, aren’t you better off publishing a new contribution to the field than adding a footnote to an old one?

If your citation is “as of” then the change does not invalidate it. Moreover, writing a new paper when the old one is found to be incorrect leaves the incorrect version to be found and believed, including via your citation! What is clear is that a versionless literature would be very different from what we have now. Little else is clear. Revolutions are like that. (Sorry to keep saying this but revolutions are my field.)

There is zero chance of journals going version-less. Whether there is a market for version-less journals is a different question, and far more interesting in my view.

If the purpose of the primary literature is to represent the best understanding at the time or to tell the story of a set of experiments, then future discovery does not invalidate it as historical record. The literature is almost entirely “incorrect” by your definition, as knowledge moves forward. Perhaps the mistake is in reading the primary literature as a means to understand comprehensive and current knowledge of a field. Do you expect a 20 year old medical paper to still reflect current clinical practices? Is it dangerous that we allow it to continue to exist in its original form? Must we go back and update the writings of Archimedes and da Vinci because they are now incorrect and out there to be found and believed?

And no one has even attempted to address my peer review question. Who’s going to review all these updates and corrections for accuracy?

Suppose on the other hand that the purpose of the primary literature is to present our best understanding. If not then where is that to be found? How do you propose to communicate the discovery of prior error? I hope the purpose of the present system is more than the creation of historical artifacts.

But you are ignoring my last paragraph. I see the version-less report as an addition to the classical journal article, not a replacement. The present, piecemeal approach leaves out a lot of the reasoning, which is what the primary literature should capture. If I publish four articles reporting A, B, C & D, what is missing? At least two things. First, why I went from A to B, etc. Second, given I got to D what do I now think about A, etc.?

The journals are basically personal diaries and the way they are done now there is a lot missing that an ongoing narrative might reveal. I wager that most of what we know is between the lines of the present system. I have studied this at great length and it is very troubling.

Part of the purpose of the primary literature is to present our best understanding, but it represents the best understanding at the time the paper was written. I propose to communicate prior error through retractions, corrections or publishing subsequent papers. Once a particular set of experiments is concluded, aren’t they historical and shouldn’t they be treated as such?

Let’s look at a landmark paper, Watson and Crick’s 1953 model for the structure of DNA (http://www.nature.com/nature/dna50/watsoncrick.pdf). Should Watson have spent the last 62 years constantly updating this paper with every new discovery about DNA that has been made? What sort of monstrosity would the paper now be? Would it be thousands of pages long? Hundreds of thousands? Should he have had to do this constant updating for every single one of the thousands of papers he has published over his lifetime? If so, when would he have had any time to do any research?

If you were new to science and wanted to learn about DNA, would you go to this mess of 62 years of annotations? Why choose that one rather than say, Meselson and Stahl’s 1958 paper on DNA replication–it’s five years more modern and presumably would have been constantly updated in the same manner over the years?

Or would it instead make more sense to read a recent review article or a recent textbook chapter? Those are different types of literature that better serve the purpose you are seeking than the primary literature, where the main purpose is to report on a set of experiments/present a new argument.

There are entire journals dedicated to publishing just review articles and new review articles come out regularly in most fields. Though most do not offer the personal anecdotes you seem to be seeking. Those are preserved in historical works and biographies (see http://www.genestory.org/ as an example).

We seem to have different ideas about the version-less paper. I think an ongoing report of Watson’s thinking would be ongoingly useful to the field (not to novices), and very different from a series of reviews. If I were new to the science I would start with Google, not a 1958 paper.

It is useful, and you can get it from reading his latest book or review article. Asking him to annotate and update every single one of thousands of previously published papers however, seems a remarkably inefficient way to get this information out, and a remarkably confusing way to find it.

Most of the problems associated with change go away if you think of a paper as an artefact in the first place. In a software environment, if you depend on A==B, then you just stick with that version of “paper” i.e. piece of software. But, of course, you are depending on old software. Sooner or later you will have to update.

In short, with computational knowledge (i.e. software) we have to think explicitly about change management and how we adapt to changes made to things we depend on outside our control.

You are entirely correct that in terms of career brownie points, yes, you are better of publishing a new paper (not a new contribution, no one cares about contributions to the field, just about papers). This is why people who maintain databases write papers every year where they struggle not to write “we do the same as we did last year, but with more data”. Publishing is not the only area of science which is anachronistic and not fit for purpose.

If you need to update your software, is it better to go back to the original software that has had a bunch of new material tacked onto it or would it be better to start with a new, clean, modern version of that software? Would you want all that legacy code to remain in there or would you rather start fresh?

Similarly, if you want to know about the state of discovery in field X, is it better to go to the latest papers in that field, or would it be better to deal with a 20 year old paper that had 50 different kludgy versions made over the last few decades?

Is versioning in science just “the next paper”? Is that worse than only publishing one paper in your lifetime and then constantly revising it?

If the “one paper” is a timely report of each step in the research, plus ongoing higher lever discussion of why the steps were taken and how the thinking was evolving, then it might be far more useful than 100 piecemeal papers published in dozens of journals. Of course it world be well over a thousand pages long at the end. Calling it one paper may miss the point.

I fail to see how scientific publishing is anachronistic and not fit for purpose, quite the contrary. Nor is software computational knowledge, although it may be based on such. In any case, change management is something done by projects and individual firms, not by communities. Revolutions cannot be managed. We simply do not know where these ideas are going, which is what makes it fun.

Not fit for purpose? Oh, well, scientific publishing is slow, the knowledge in peer review is mostly lost, it’s expensive, it’s only just starting to catch up with the existence of the web, presentation is largely static — some journals still charge extra for colour! Journals use DOIs to put the weight of maintain static URLs on the authors. Author names are only just now becoming standardized. Different journals have different style requirements, requiring authors to re-write papers constantly. Reviews from one journal are lost when the paper is bounced. Journals still like to use nonsense like IF as a badge of quality. Reviewers are overloaded, so reviews are rarely useful. It’s saturday morning, so I’ve run out of ideas rather quickly but you get the idea.

I think that you have ideas about change management backward. Of course, it’s supported by tooling, but understanding change and how to adapt to it is more or less entirely a feature of the community.

Whether it facilitates or inhibits is too simple a question. First, it will occur where it is most useful. I seriously doubt that journal articles will ever see a versionless version (but a living summary of one’s research to date might be very useful). On the other hand, Google docs projects are already very useful. But then even where it facilitates it also inhibits. One thing that happens in Google docs projects is that newcomers raise issues that have already been thrashed out, because they lack the history. Every way of doing things has a downside.

A better question might be how best to proceed, given this new capability? Where and how shall we apply it?

Here’s yet another vantage on versions. Several above have noted the importance of the historical record, meaning the point of departure for the next work (whether one’s own or someone else’s).

But how about the actual *historical record* as in the material that documents the knowledge production of and in a particular moment/ context? If David’s op cit revises to A=C, we won’t know that A=B was substantially in play. That’s important for the discipline of which that debate is a part, but it’s also important some years hence when we look to the evolution of thought.

Also, thanks to the keen (editorial!) eye who caught that I was apparently claiming to be a scholar of “amen, family, and gender…” when I really did mean to indicate that I am a historian of “women, family, and gender.” But unless you’ve taken a screen shot, or I suppose if you can access and parse the metadata, you’ll never know.

People who collect rare books, as I once did, are familiar with the terminology of “editions” and “issues” and “impressions” and “state”: http://www.biblio.com/book-collecting/basics/what-are-points-of-issue/. E.g., I own three first editions of Hobbes’s Leviathan, but they are different issues. When new printings were done, often small changes were made to correct errors in the previous text, but these were relatively insignificant and generally did not affect the content and meaning of what was written. They are, however, different versions of the original work and had different value in the marketplace for collectors. This practice continued into the modern era of printing, and when paperbacks were introduced, they often contained such minor corrections also plus, sometimes, a “preface to the paperback edition.” Occasionally, the opportunity was taken to add a postscript which, however, generally was not so major a change as to justify calling the new version and different edition. Because of the limitations of print, such changes were usually made at the beginning and end of books, so as not to require the costly process of repagination throughout. There was always a continuum on which such changes existed, though, and there could be arguments about how much change was needed to call a new version a second or third edition. The digital age has not changed this system of versioning so much as it has added to the range of possibilities, making changes anywhere in a text much cheaper to make and repagination automatic. One might suppose that the changed version of the History Manifesto online would constitute a new edition, while ongoing changes to a posted work might not rise to that level but be more like new issues. In the print world these changes were sometimes noted, but often not, except when they rose to the level of new editions. Later printings might contain any number of small corrections not noted anywhere in the book but simply “silently” done. There is, though, one kind of dynamic, evolving document that was described by Robert Darnton in his “The New Age of the Book” and exemplified by his own ongoing project in book history arising from what he found in the rich archive of a print in Neuchatel. This kind of multilevel, multidimensional document could be constantly undergoing change in any level and any dimension in a way that might make distinctions between issue and edition otiose. But so far we have seen very few of this new kind of document. Also in this category might be ongoing projects like the Perseus Project and the Valley of the Shadow about the Civil War. Or even online reference books like encyclopedias that could be undergoing constant change.

P.S. By the way, the digital age has probably made it impossible to say that any book is “rare” anymore. So much for rare book collecting!

Reblogged this on Fabrication Nation and commented:

How should doctoral programs and scholarly societies address the issues of publication in a digital age?

Late to the conversation! In my view, version control is fundamental to the entire process of pre-publication, in-publication, and post-publication… we just aren’t there from a technology perspective yet. Having a strict versioning system allows people to exchange entire sets of files (the article and all its inputs together) unambiguously. Modern versioning systems calculate a fingerprint of the content tree. The history is simply a linked list of these fingerprints (timestamps embedded) that can never change or be tampered with (mathematically). Using this fingerprint as a citation key is natural way to refer to that version of the document (or file directory) that publishing has not adopted yet. The problem is that these systems are still too technical for most of the general public.

My recommendation is to stop thinking of the article as an dead artefact and start thinking of it as a conversation. Then the frame changes dramatically and questions like ‘what is a version’ and ‘do we version comments’ become a little redundant.

This changes the purpose served by the research article from providing a brief summary describing a discrete set of experiments into something entirely different, an ever-changing overview of the current state of knowledge. Both are useful things, but must we think in terms of them being mutually exclusive (one must replace the other)? Why can’t we have both things?

Further, what is the motivation for a researcher to participate in that “conversation”? Career advancement and funding are given to those whose own work advances a field and presents new discoveries. Under the current academic structure, there is likely very little advancement to be achieved by commenting on someone else’s article, and likely very little reward given to a researcher for updating an old article in lieu of writing a new article.