With so much new literature published each year, why are authors increasingly citing older papers?

Late last year, computer scientists at Google Scholar published a report describing how authors were citing older papers. The researchers posed several explanations for the trend that focused on the digitization of publishing and the marvelous improvements to search and relevance ranking.

However, as I wrote in my critique of their paper, the trend to cite older papers began decades before Google Scholar, Google, or even the Internet was invented. When you are in the search business, everything good in this world must be the result of search.

In order to validate their results, the helpful folks at Thomson Reuters Web of Science sent me a dataset that included the cited half-life for 13,455 unique journal names reported in their Journal Citation Report (the report that discloses journal Impact Factors). Rather than relying on the individual citation as the unit of observation (the approach used by Google Scholar), we base our analysis on the cited half-life of journals. This approach has the obvious advantage of scale, allowing us to approach the problem using thousands of journals rather than tens of millions of citations.

In order to approximate a citation-based analysis, each journal was weighted by the number of papers it published, so that small quarterly journals don’t have the same weight as mega-journals like PLOS ONE. Each journal was also classified into one or more subject categories and measured each year over the 17-year observation period. Our variable of interest is the cited half-life, which is the median age of articles cited in a given journal for a given year. By definition, half of the articles in a journal will be older than the cited half-life; the other half will be younger. The concept of half-life can also be applied to article downloads.

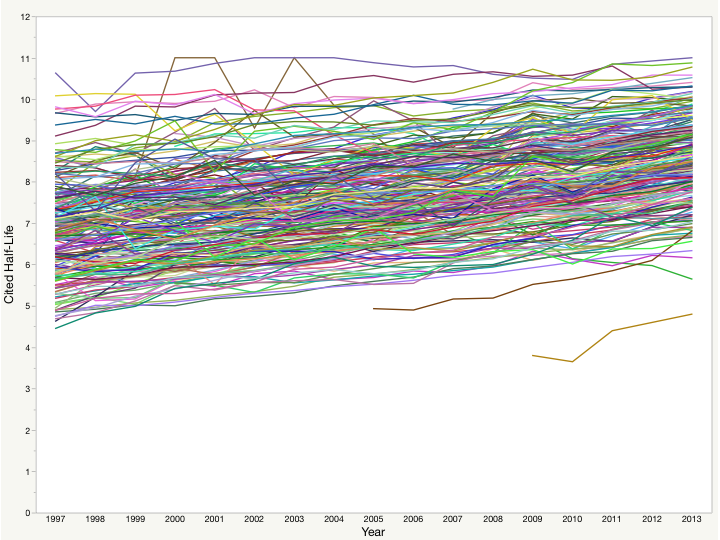

For the entire dataset of journals, the mean weighted cited half-life was 6.5 years, which grew at a rate of 0.13 years per annum. For those journals that had been indexed continuously in the dataset over the 17 years, the mean weighted cited half-life was 7.1 years, which grew at the same rate. For the newer journals, the cited half-life was just 5.1 years, but grew at a rate of 0.19 years per annum.

Focusing on the journals for which we have a continuous series of cited half-life observations, 91% (209 of 229) of subject categories experienced increasing half-lives. Some of these categories grew significantly more than average. For example, Developmental Biology journals grew at 0.25 years per annum, Genetics & Heredity journals grew at 0.20 years per annum and Cell Biology journals grew at 0.17 years per annum.

Conversely, the cited half-life of 20 (9%) of journal categories decreased over the observation period. With few exceptions, these fields covered the general fields of Chemistry and Engineering. For example, the cited half-life for journals classified under Energy & Fuels declined by 0.11 years per annum, Chemistry-Multidisciplinary declined by 0.07 years per annum, Engineering-Multidisciplinary by 0.05 years per annum, and Engineering-Chemical by 0.04 years per annum. Granted, these are smaller declines, but they do run contrary to overall trends.

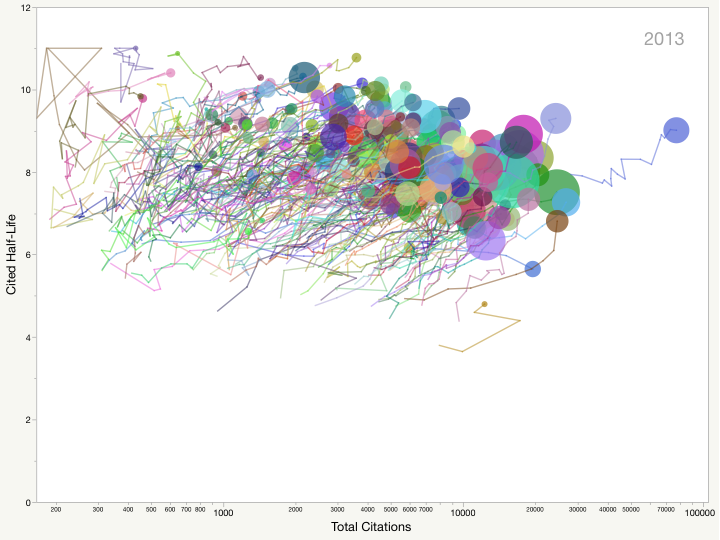

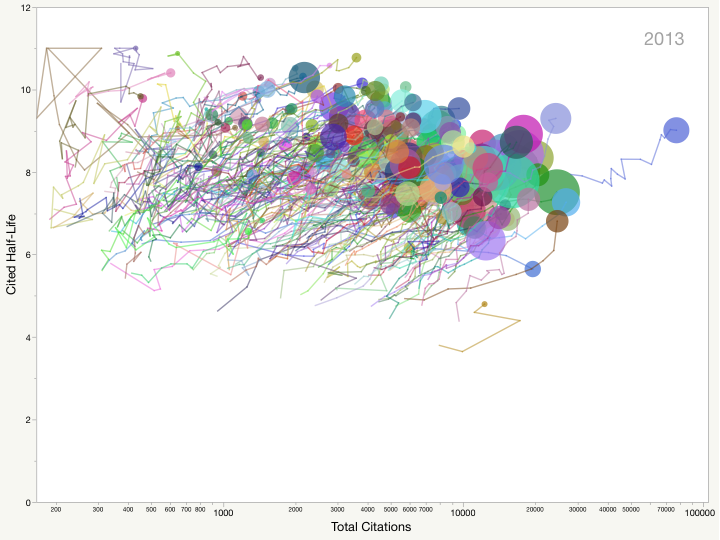

We also discovered that cited half-life increases with total citations, meaning, as a journal attracts more citations, a larger proportion of these citations target older articles. This can be seen in Figure 2, as journal categories move from the bottom left to the upper right quadrant of the graph over the observation period.

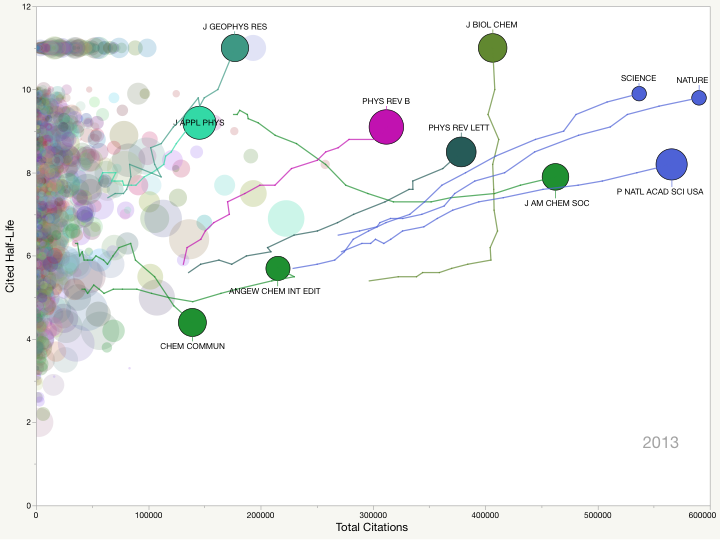

The next figure highlights the trajectory of highly-cited journals from 1997 to 2013, illustrating how cited half-life increases with the total citations to a journal. While most highly-cited journals move toward the upper-right quadrant of the graph, we highlight three chemistry journals that run contrary to this trend: Journal of the American Chemical Society, Angewandte Chemie-Int Ed., and Chemical Communications. Those readers wishing to speculate why Chemistry and Engineering journals were bucking the overall trend are welcome to do so in the comment section below.

Readers are also welcome to explore the data (for categories and for journals). The files (.swf) require the Adobe Flash plug-in. Mac users may need to hold the Control key and selecting one’s browser when opening these files. Categories may be be split into component journals. Other controls moderate the size, speed and display of the data.

In sum, we were able to validate the claims by the Google Scholar team that scholars have been citing older materials, with some exceptions.

The citation behavior of authors reflects cultural, technological, and normative behaviors, all acting in concert. While digital publishing and technologies were invented to aid the reader in discovering, retrieving, and citing the literature, the trend appears to predate many of these technologies. Indeed, equal credit may be due to the photocopier, the fax machine, FTP, and email as is given to Google, EndNote, or the DOI.

Nevertheless, a growing cited half-life might also reflect major structural shifts in the way science is funded and the way scientists are rewarded. A gradual move to fund incremental and applied research may result in fewer fundamental and theoretical studies being published. Giving credit to these founders may require authors cite an increasingly aging literature.

Correction note: Table 1 of the manuscript “Cited Half-Life of the Journal Literature” (arXiv) contains a sorting error. A corrected version (v2) was submitted and will become live at 8pm (EDT). Thanks to Dr. Jacques Carette, Dept. of Computing and Software at McMaster University for spotting this error.

Discussion

26 Thoughts on "Why are Authors Citing Older Papers?"

This is interesting stuff. I wonder how big the availability effect is. Being able as we are now to search (even full text) and retrieve the full backfiles of journals compared to browsing recent issues/volumes as we did say 20 years ago may be influencing these patterns. Of course this effect is dampened by authors’ decisions not to cite if they deem the article too old or its contents out of date. For the recent years this effect is extra strong because of the hybrid way Google Scholar ranks, with citations (and thus age) strongly affecting the ranking.

Another effect may be simply the growing historical volume of journals. Probably with all other things being equal a journal with a 100 year history will have a higher half life than a journal with a 20 year history, simply because of the chance of encountering relevant older papers. This effect is especially strong for young journals that in the research window 1997-2013 have grown from for instance 5 to 22 available years (because relatively fewer topically relevant papers will be rejected for citation by authors because of their age). Consequently perhaps there is also an effect of the growth of the number of journals in the JCR with many journals added that have a short history.

Availability may be creating a mild cultural shift. My research suggests that most citations occur early in an article, where the history of the problem is explained. Reviewers may simply be calling for more history, especially in scientific fields.

A quick comment on the culture of mathematics. I find it fascinating really, in that once a theorem is proved, it stands for eternity. A proven theorem is essentially a building block for future research. This means that for mathematicians, their articles’ citation half lives are often long – a cultural dimension.

This is also largely true for science and engineering. Paradigms may be overthrown by revolution, but individual research results seldom are. So while we may lurch from paradigm to paradigm, there is also a steady accumulation of knowledge. Falsification seldom occurs at the project level. (This has implications for the data sharing issue as well.)

Advocates of what we have been calling “basic research” are now wanting to call it “discovery research,” because basic research has become dull and more difficult to fund, while also becoming more politicized in many fields. This lack of funding for discovery research — discoveries that can blow open paradigms, make us rethink what’s possible or even probable, and lead to entirely new ways of pursuing science — has been underfunded for decades now. I’m tempted to believe much of this is based in the incrementalism of modern research, which is necessary but is not being matched by an equal rate of really new discoveries.

The recent reviews of the first decades of the Hubble telescope show how funding breakthrough devices and approaches can yield all sorts of discoveries. In the case of Hubble, things like dark energy and the idea that black holes were at the center of every galaxy weren’t anticipated, but were discovered through Hubble. We need more of this in every field.

Classic papers can’t help getting older. Perhaps it takes time for most classics to get that status. Indeed it’s only by being cited over a long period of time, that they generally get this status.

So younger papers that fail to get cited enough early on find it hard to keep getting cited. And fall off. The good ones keep getting cited and so eventually continue as they move beyond the half life. And keep being cited – perhaps by habit because they really are irreplaceabale.

So if we say papers cite on average 30 other papers. And there are 10 classics. And 10 established mid age papers. And 10 recent papers.

In 5 years times, the equivalent paper still cites 8 of those classics. Plus 5 of those previously established papers that are now classics. Plus 7 of the then recent papers that are now mid aged. This leaves 10 that are recent still. But the average age overall is likely to have got older as 66% (20 of 30) of the cited papers are the same ones but are now five years older.

Can we test this? Can we examine if there is a concentration on the number of older papers cited (ie fewer but more often) whereas more younger papers show the opposite (more cited but less often)? This might be an affect of so many similar younger papers getting published that only a select few can gain classic status.

Statistics can mask all sorts of effects.

Search and sort could also influence this behavior; Papers with higher numbers of citations (often older papers) land on the first page of Google Scholar results, and on the first page of citation database results when the option to sort by citation count is available. More people citing a paper means more people see that paper, means more people cite that paper. So driving the Matthew Effect – the rich (in citations) get richer.

It is also true that people are tweeting and posting and so re-invigorating older papers.

You can only legitimately cite a paper that you’ve seen. Anything that contributes to the discoverability and visibility of a paper creates a condition favorable to citation.

I’d love to see this: for a each year of citing papers, one can determine the number of citations per cited paper for each year of age. You could also see if citation concentration has changed by comparing across successive citing years.

I think this is an excellent point Marie. I also wonder if this may reflect poor searching strategies from newer researchers in emerging markets. In my experience in working with many of these authors, they often restrict their literature search to Google Scholar (free) and review articles (which often cite older papers). Because of the citation bias in Google Scholar (and the fact that it indexes too broadly; i.e., non-peer reviewed material), I usually recommend younger authors to use stricter databases that are both broad (like Web of Science and Scopus) and field-specific (like PsychINFO, PubMed, SciFinder, etc.).

I think educating younger researchers, particularly in emerging markets, in how to proper find recently published quality articles related to their topic is essential in doing good science (identifying current trends and relevant research questions).

Older articles are more accessible today than they have ever been. Factors such as the publisher’s own archive digitisation programmes – I have been involved in two major retro digitisation programmes over the last 10-15 years, one in Physics going to back to 1874 and another in healthcare going back to the late 60s. The library’s continued drive and desire to provide access where possible to older materials for researchers, made possible often by last minute or year end funding. Digitisation of retrospective journal indexes (e.g. INSPEC, BNI etc) also helps the postgrad or librarian doing the discovering if not for the author themselves. Also, lastly but by no means least, CrossRef has provided a huge service for access to retrospective literature. Many publishers will quite sensibly include the one time cost of uploading their DOIs to CrossRef when they are building their budgets for their own journal archive digitisation projects.

It’s amazing to see how quite how heavily older material is used when compared to current literature.

Digitizing and making older content accessible, particularly in search, is certainly a factor. But, just because it’s there doesn’t mean people will cite it. Clearly there is value to the older content, not just in downloads but also in the “thumbs up” citation. This is encouraging for publishers looking to include backfile content in their digital archives.

Back in the good old days, even B4 Current Content, at least in the chem/physics arena, it was important to cite key, early, papers as foundational (e.g. the works of Einstein) as a way for validating and, perhaps, equally important, other searching for that key paper would find yours because of the citation. That got you more requests for reprints and raised your article’s prestige and thus your rank in the community. It was, in many ways, the early version of “gaming” the system. Of course your work did stand on the shoulders of those who came before- sort of.

This is v interesting, Phil. In the spirit of “When you are in the X business, everything good in this world must be the result of X.” I also wonder whether new services that enable researchers to breathe new life into their older publications, connecting them to later work / developments in the field, and explaining how the one influenced the other, could lead to more citations of older work. (Probably not yet, but it might be another variable to factor in to future analysis of this kind).

While the growth in fraction of older citations has indeed been occurring over a long period, an acceleration in the growth occurred over the timeframe that digitization of archives brought the treasure trove of long-hidden scholarship to light and relevance-ranked fulltext search made it easy to dig deep into it. You can see this in Fig 1 of our paper. It is also summarized as one of the conclusions of our article:

“””

Second, for most areas, the change over the second half (2002-2013) was significantly larger

than that over first half (1990-2001). Overall, the increase in the second half was double the

increase in the first half. Note that most archival digitization efforts as well as the move to fulltext

relevance-ranked search occurred over the second half.

“”””

To quantify this at a bit more granular level, I recently computed percentiles. Of the total growth in the fraction of older citations over 1990-2013, the first one-third occurred over about 12 years (1990-2012), the next one-third over 7 years, the final one-third occurred over the last 4 years.

Digitization of journal archives has been a great achievement by scholarly publishers. It has made it possible, easy and indeed expected for researchers to dig deeper into the scholarly record. As someone who grew up in a place with limited libraries, the ease of being able to search over such a large collection is magical.

As for why researchers dig deeper now than before, the obvious answer, because it is so easy to do, is more likely than other more complicated causes. That which is useful and is easy to do gets done a lot more. We all do this. And do it all the time. Consider how often you use maps, write notes, publish short notes (like this one), lookup information and so on compared to 20 years ago…

What is the contribution of review articles in these data sets? I would assume that with the increasing number of scientific articles being published (from the US and the world) that more reviews are being written. In my experience, reviews tend to cite older literature.

Clay, good question. The data set is based on the journal level, not the article level. But I could take a first cut of titles with the word “Review” in it and see if they perform any differently.

Certainly agree that in your dataset it may be difficult to discern the contributions of journals that publish more reviews. If I recall correctly rewiews were treated like articles in the Acharya datasets and it should be possible to discern there.

Also, I’m not sure pulling titles with “review” in them will help, at least for oncology many don’t include “review” in the title.

This is interesting but it would seem to me to require research into the amount and type of research as well as funding.

We are glad to learn that the results offered by Phil Davis’ work match the ones we presented earlier this year (http://arxiv.org/abs/1501.02084), even though the designs of the studies are slightly different from one another (we used the Aggregate Cited Half-Life for the 220 subject categories present in the Journal Citation Reports and limited the study to the period comprised between 2003 and 2013, while Davis’ work analyzes a longer period). Nevertheless, probably the most interesting addition to this debate is that we discussed the causes that might explain this phenomenon. We pointed out three causes, in this order:

The first factor that should be considered has already been studied extensively, and it is the relation between the exponential growth of scientific production and the pace of obsolescence. Well, if we know that growth and obsolescence are closely related, what does the increase in citations to old documents reported in this study means? Does it mean that we are in a period of slow scientific growth? Or what is the same, is science growing exponentially like in previous periods? Or, is today’s scientific production of a lower quality, not providing as many new discoveries and techniques? These are all interesting as well as disturbing questions (http://goo.gl/HAk8xt).

The second factor is the one the Google Scholar team pointed out in their work and now also here: the advancements in information and communications technologies has increased accessibility to old documents. The truth is that these arguments seems quite reasonable, and they are supported by the changes in scientist’s reading habits detected by Tenpoir & King (http://goo.gl/WTgZL9): “the age of articles read appears to be fairly stable over the years, with a recent increase in reading of older articles. Electronic technologies have enhanced access to older articles, since nearly 80% of articles over ten years old are found by on-line searching or from citation (linkages) and nearly 70% of articles over ten years old are provided by libraries (mostly electronic collections)”.

The third factor that should be considered is the influence of Google Scholar on these changes. It is undeniable that Google Scholar has revolutionized the way we search and access scientific information. A clear manifestation of this is the way results are nowadays displayed in most search engines and databases, a key issue that determines how the document is accessed, read, and potentially cited. The “first results page syndrome”, which is causing that users are increasingly getting used to access only those documents that are displayed in the first results pages. In Google Scholar, as opposed to traditional bibliographic databases (Web of Science, Scopus, Proquest) and library catalogues, documents are sorted by relevance and not by their publication date. Relevance, in the eyes of Google Scholar, is strongly influenced by citations (http://goo.gl/bqrwgs, http://arxiv.org/abs/1410.8464).

Google Scholar favours the most cited documents (which obviously are also the oldest documents) over more recent documents, which have had less time to accumulate citations. Although it is true that GS offers the possibility of sorting and filtering searches by publication date, this option is not used by default. On the other hand, traditional database do the exact opposite: trying to prioritize novelty and recentness in their searches (the criterion the have always thought the user will be most interested in) they sort their results by publication date by default, allowing the user to select other criterion if they are so inclined (citation, relevance, name of first author, publication name, etc…). The question is served. Is Google Scholar contributing to change reading and citation habits because of the way information is searched and accessed through its search engine?

I think Phil has said that this trend is far too old to be due to Google Scholar, or even to the Web. The Internet was fielded in the scholarly community in the 1970’s, plus other availability technologies have emerged. GS might have helped with recent acceleration, if there really is some. My conjecture is that adding more history to the article’s opening background section has been the trend, simply because of the increase in availability. There may be nothing particularly deep in this trend.

The explanation may be simple: life sciences had a boom period from the late 70s to early 2000s – molecular biology revolution, genome sequencing, etc. A lot of path-breaking, field-opening papers were published then. We are still productive and making progress, but we are making that progress in the same fields so we all cite those papers that opened those fields.

Thanks for a very nice analysis that is provoking much valuable discussion. Amongs other things, it helps to give the lie to Impact Factor [sic] with its very narrow time window (citations within two years of publication). Many of us in research areas with longer ‘half-lives’ typical for papers rightly bemoan the grip the benighted Impact Factor still has on the science publishing and funding world. This article helps to reveal another aspect of Impact Factor’s irrelevance to assessment of real impact, long-term significance, real ground breaking or measuring ‘esteem’ in the community of researchers. Much food for thought!

In chemistry, the reduced citation half-life of multidisciplinary journals might have to do with the growing percentage of life sciences-related content these journals publish. Since life sciences have a shorter citation half-life than traditional chemistry areas such as organic chemistry, that might bring the averages for chemistry journals down.