Editor’s Note: Today’s post is by Rachel Caldwell. Rachel is scholarly communication librarian at University of Tennessee Knoxville. She has a dual Master’s degree in Information Science and Library Science from Indiana University Bloomington. Prior to her position in scholarly communication, Rachel worked in museums and as an instruction librarian.

No library can subscribe to every publication that might be of interest to their communities. Most academic libraries base collections and resource allocation decisions on quantifiable metrics, such as cost per use. These are important considerations, but they are not holistic. Traditional, quantifiable, collections-based metrics overlook a wide range of important aspects of relationships between academic libraries, their institutions, and suppliers of one kind or another. One such aspect is determining whether the business practices of a supplier/vendor support or match the mission and values of the library.

Many vendors supplying academic libraries with collections and other resources engage in practices that are not only markedly out of step with the values of libraries but also misaligned with the broader values of many public institutions of higher education (HE). In North America, this is especially true among land-grant colleges and universities, including both public and private institutions, whose missions and values prioritize conducting research for the public good and providing broad access to education. Publishers and citation index providers are important examples of vendors often out of sync with such values, not least because of the tremendous amounts of money institutions of HE pay them. Journal publishers are particularly visible because of their work with faculty as authors, the role of journal metrics in retention, tenure, and promotion (RTP) decisions, and the recent spate of libraries canceling their “big deal packages.” All of this leads to a great deal of tension between journal publishers, authors, institutions, and libraries, often centered on “quality” of publications (using the Impact Factor as a measure) versus “costliness” of access, including not only subscriptions but also open access article processing charges (APCs).

To address this misalignment, libraries would benefit from evaluating how well a publisher’s practices align with the values of libraries, public and land-grant institutions, and some learned societies. Such a system would do several things. For example, it would inform library decision-makers about licensing choices, as well as aid in communication with a library’s campus community about the nature of the decisions it may face. To be clear, such an evaluation system would not be used by the typical institution as the sole metric for licensing decision-making, nor would it be used only in licensing decisions.

In a recent article, I have proposed such a system provisionally: Publishers Acting as Partners with Public Institutions of Higher Education and Land-grant Universities (PAPPIHELU, or PAPPI for short). It is important to note that this system has not been vetted or used by any library or institution. It is a provisional system intended to provide an example of how values-based metrics could be applied to journal publishers. The evaluation criteria are based on values widely held by libraries, land-grant institutions of HE, and some learned societies. Notably, these societies serve primarily as a proxy for researchers who often share many of the same values as libraries and HE institutions. Under the PAPPI evaluation system, journal publisher practices in ten different categories earn points toward an overall score that reveals how well the publisher’s practices align with the values of libraries, land-grant institutions, and some learned societies.

In other words, this provisional system evaluates how well a journal publisher acts as a partner with land-grant institutions and libraries. The partnership criteria stem from three key values identified by examining the histories and missions (including those of relevant professional organizations) of the three entities:

- Democratization of information and education emphasizes the value of public access to knowledge held by libraries and institutions, especially land-grant institutions. PAPPI criteria relating to this value include public access and open access (OA) to scholarship/research (and not just through immediate “gold” or “diamond/platinum” OA publishing); history of politically lobbying against government policies meant to broaden access to research/scholarly literature; and permitting reuse of articles for noncommercial educational purposes.

- A second value, information exchange, relates to making research/scholarship findable and shareable through open, interoperable metadata and long-term, non-profit-run preservation. Information exchange ensures that literature reviews are well-informed, leading to solid studies and scholarship; thus, many learned societies share this value with academic libraries. Criteria related to this value include the following: the publisher voluntarily contributes metadata to repositories and indexes; metadata is OA; authors are identified with ORCIDs; journals are archived or preserved with CLOCKSS, Portico, or a similar service.

- Finally, all three entities—academic libraries, land-grant institutions of HE, some learned societies — value the sustainability of scholarship as a scholarly enterprise. This value relates to the agency of authors and institutions and is especially important in evaluating whether a publisher acts as a partner. Related criteria include not only reasonable article processing charges (APCs) but also policies that allow researchers at any institution to contribute to the scholarly record if their work meets peer-review standards; researchers retaining agency over their work through authors’ retention of copyright and/or agreement to license their work under the least-restrictive Creative Commons open licenses that facilitate access and reuse; requirements for non-disclosure agreements in contracts with libraries/institutions; and permitting text and data mining at no cost for scholarly purposes. (For particulars on these categories and credits, please see the PAPPI wiki.)

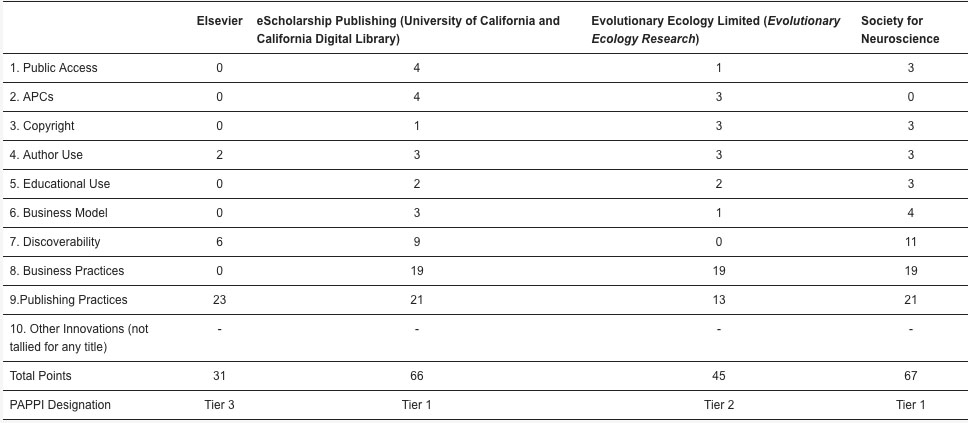

Points earned by a publisher result in PAPPI designations similar to the U.S. Green Building Council’s Leadership in Energy and Environmental Design (LEED) certification for buildings. In both, the designations represent levels of achievement in meeting the system’s outlined practices. LEED has four levels of certification (platinum, gold, silver, and certified) for the construction of buildings. PAPPI proposes three levels of credit for publishers’ practices: Tier 1 for publishers earning 62 points or more, that is, 70% of all possible points (89 total); PAPPI Tier 2 for those earning at least 45 points (51%); and PAPPI Tier 3 for those earning at least 27 points (30%).

To test this partnership scoring system, I evaluated the practices of four different journal publishers. I included Evolutionary Ecology Limited (EEL) and the Society for Neuroscience (SfN), which, respectively, publish one and two journals. I also evaluated Elsevier and the University of California/California Digital Library’s e-Scholarship Publishing, taking a random sample of journal titles from each of their respective publication lists. While, unsurprisingly, the journals published by the library and by the learned society scored highest — because PAPPI was designed with library and society values in mind — a few observations from the results bear special attention.

I deliberately chose to evaluate a society publisher that was a member of the Scientific Society Publisher Alliance (SSPA), in part because many SSPA members publish their own journals. Those that are not working with a larger publisher are able to set their own journal publishing policies and priorities, aligning with their missions and values. Though not all societies share the values of libraries and land-grants, many SSPA members do. For example, SSPA members publish both hybrid OA journals and fully OA journals, meaning that they do not publish all articles “gold” OA by default; they still maintain subscription-only content. But unlike many hybrid publishers, much of their subscription-only research is made publicly accessible after brief embargo periods.

Observation #1: Many SSPA members make 100% of articles available in PubMed Central (PMC) and in their journals after a three-, six-, or twelve-month embargo — not just the articles that authors paid to make immediately OA through the “gold” option, nor just those with NIH funding — taking the significant onus of “green” open archiving off of institutions and authors. (Of the eleven society members, four deposit all journal content in PMC — SfN is one of these — two deposit all content from half or more of their journals.) This, and the fact that they are mission-driven organizations grounded in providing support to researchers and students in myriad ways beyond journal publishing, earned the SSPA-member publisher a high score as a partner with HE and libraries. Libraries may want to reach out to these publishers, and the faculty making up these societies’ memberships, and begin cultivating conversations about partnerships and collaboration if they have not done so already.

In the same vein, it is not a surprise that e-Scholarship Publishing scored highly. It is a member of both the Library Publishing Coalition and the Open Access Scholarly Publishers Association (OASPA), publishing only gold or platinum OA journals. Their APCs are low to nonexistent and they operate with a mission-driven business model. Naturally, their journals benefit from the relationship with the library. Metadata is well-understood by the library publisher, so the eScholarship journals’ discoverability scores were comparatively high. In contrast, faculty-owned publisher EEL scored well in many areas, but suffered from low scores in discoverability and publishing practices (e.g., preservation, membership in industry organizations) — practices most aligned with the value of information exchange.

While their information exchange scores were low, EEL’s scores for practices related to democratization of access and sustainability — copyright terms, educational reuse, and business practices — were relatively high.

Observation #2: Allotting to EEL’s journal, or any journal in a similar state, institutional resources to improve its visibility and impact makes sense when viewed from a partnership lens. That is, if a library is considering canceling an EEL subscription, the library may want to offer them additional (and different) support. This could begin as a consultation with librarians on the topics of metadata creation or preservation practices, and might result in an ongoing partnership with a library for related services. This observation demonstrates PAPPI’s utility as a means for identifying potential partners that could benefit not only from libraries’ budgets first and foremost but from librarians’ knowledge of discoverability and best practices in publishing. These are intellectual resources and allotments for which librarians should be recognized and availed of by likeminded, nonprofit publishers.

Elsevier scored lowest of the four evaluated publishers, largely due to practices related to democratization of access and sustainability of scholarship as a scholarly enterprise (i.e., its copyright and licensing terms, business model, and business practices, such as a history of lobbying against OA policies), but one criterion deserves special attention in the Elsevier evaluation. In “Publishing Practices,” three publishers, including Elsevier, scored highly but of these Elsevier was the only COPE member. COPE membership earns a publisher the highest number of points possible (5 points) for any single credit in PAPPI. If the society publisher, SfN, became a COPE member — which, based on their current practices, could seemingly be done easily as they meet nearly every COPE requirement — then the learned society would have the highest score in Publishing Practices. This is significant because PAPPI metrics in Publishing Practices are arguably the least controversial metrics, being focused not on rights, permissions, access, or business practices, but instead on practices and standards that scholarly publishers have, by and large, agreed to set for themselves. In other words, if a publisher scores highly in Publishing Practices — and especially if they are also a COPE member — they could be considered a “good publisher.” With these metrics in mind, SfN could possibly be considered at least as good of a publisher as Elsevier, if not better.

This leads to Observation #3: SfN’s Publishing Practices score demonstrates that it is doing the same as or more than the largest publisher in following or creating best practices, yet it and many other learned societies and small publishers often struggle more than larger publishers with visibility, drawing a high number of submissions, and improving their journals’ impact factors (IFs). A high IF is important to a journal’s success, and while there is broad agreement that many current uses of the IF are deeply flawed, HE continues to rely on it for many decisions, spurring a large number of submissions to the journals with the highest IFs. The situation is a catch-22. Values-based metrics could ease (not erase) some of the tension caused by relying on one dominant but flawed quantitative evaluation for journals (the IF) by providing additional metrics by which to compare publishers. In this case, the metrics would reflect commitment to access, information exchange, and the sustainability of scholarship as a scholarly enterprise.

It is worth noting that Eugene Garfield, creator of the IF, intended for librarians to use it in making subscription and collection decisions. Its application has shifted in powerful ways. It is not wild to suggest that librarians want additional metrics by which to make resource decisions. Nor is it wild to suggest that publishers want additional metrics by which to demonstrate their support for researchers and highlight contributions to the sustainability of scholarship.

There is no individual substitute for the IF. However, metrics comparing researchers’ individual scholarly outputs now include not only which journals accepted their work (and those journals’ IFs), but also their individual citation metrics (including their Hirsch index, or h-index) and alternative metrics for a more robust understanding of an author’s research contributions. And the San Francisco Declaration on Research Assessment (SFDORA) states that none of this is an adequate substitute for actually reading and considering the scholarly merit of each work. Hopefully, faculty are looking at all of these aspects when considering another researcher’s “success,” not just the accepting journals’ IFs. (Admittedly, it is an ongoing effort, but these additional metrics certainly help rather than hinder the effort.)

Just as we continue to add depth to our evaluation of research itself, metrics that compare publishers’ practices can improve our understanding of each publisher’s interest in contributing toward the health of institutions and libraries and the independence of the scholarly enterprise. Hopefully, institutions and libraries look at all of these aspects when considering what it is that makes a publisher a good partner, not just its journals’ IFs.

This shift in perspective could influence resource allocation decisions in the future. For example, the SfN publishes two journals. One is fully-OA, one is hybrid-OA. Many libraries do not pay APCs for a hybrid journal, but those same libraries pay APCs to gold OA journals run by publishers that do not act as partners. Instead, a publisher with high scores across all PAPPI categories might warrant a library paying all APCs within reason, regardless of whether the journal is hybrid or not. Thus, publishers contributing to green OA efforts, either through PMC or other means, might receive increased support for their practices if they align with partnership practices overall. On the flip side, a publisher with low scores, especially in practices related to democratization of access, may warrant little or no APC coverage by the library, and perhaps even a significant review of library subscriptions with that publisher.

Because PAPPI scores quantify practices and values to more easily and holistically compare publishers, they could also aid libraries in making subscription cancellation decisions. For example, compare a PAPPI score to the metric of cost per use (CPU). Just as the IF is useful, flawed, and pervasive, so too is the CPU metric in library collection decisions. CPU is practical but is also limited and lacking in vision, both in terms of recognizing what libraries have to offer as partners with publishers and in terms of what benefits or harms libraries and institutions might accrue from publishers. For example, if a publisher is contributing to public HE missions outside of libraries, perhaps through mission-driven support for graduate students and early career researchers and short embargo periods followed by publisher-fulfilled open archiving procedures, these are important metrics to consider because library collection budgets, in many ways, belong to HE holistically. Such publisher practices are simply not reflected in a CPU analysis.

What if, in addition to CPU, PAPPI scores factored into budgetary decisions and led to more cost-sharing opportunities between libraries and their institutions? Perhaps costs from any publisher with a sufficient level of usage and a high enough PAPPI score would be fully funded by the library (according to a library’s budget allowances), but a sufficient CPU and low PAPPI score would trigger a cost-share with university administration or particular colleges or departments. If colleges or administrations were asked to pay a percentage of costs invoiced by publishers that do not meet partnership values, that could lead to a number of interesting conversations. What the library saves could go into supporting open scholarship infrastructure, à la David Lewis.

Put another way, if PAPPI scores were applied by an institution or library, a PAPPI Tier 1 publisher might receive an increase in support from the library. A PAPPI Tier 2 publisher that scores highly in democratization of access and sustainability of the scholarly enterprise might also receive an increase in support. Resource allocations to PAPPI Tier 2 publishers with low scores in these same values would warrant further review, as would any allocation to a Tier 3 publisher or below.

PAPPI scores could be relevant to HE in other ways, too. Values-based metrics may be utilized by researchers, especially those at land-grant universities, for whom democratization of access and research for the public good can be institutional imperatives. In their CVs, these researchers might list not only the IF (which identifies top journals by journal citation counts) but also the PAPPI tier (which identifies top publishers by values and partner practices) of any journal in which they publish, identify any COPE member journals/publishers, and present a variety of other metrics to give a robust presentation of their work and its reach.

Additionally, a publisher’s PAPPI score, as a measure of partnership practices, could also be shared across an institution to help inform decision-making about the publisher’s parent company and their other products (e.g., data analytics products). And PAPPI, or any other values-based evaluation system, could expand beyond publishers to form the basis of similar scoring systems for any vendor: analytics companies, textbook publishers, scholarly monograph publishers, digital content providers in the humanities, citation index providers in the sciences, and so on. It would take work to develop appropriate metrics, but PAPPI provides a model for doing so.

There have been ongoing conversations about how libraries and institutions might evaluate publishers and vendors. PAPPI is just one model. It considers libraries not as islands but as organizations responsive to, and working with, researchers and institutions. Finding shared values among members of our community and using those values to evaluate organizations with which we work is an effort that impacts, and should include, all of us. Especially now, amidst the COVID-19 pandemic, as libraries and institutions decide how to spend shrinking budgets and diminishing energies, values-based metrics suggest that investing in the work of likeminded, “like-missioned” partners, and supporting under-resourced communities, may be a way to find real solutions to resource concerns. Even if somewhat costly in the short-term, pursuing these solutions matters now more than ever for long-term sustainability and self-determination. The PAPPI system for evaluating publisher practices is one way, albeit provisional, to identify publishing partners who have earned our support. I hope we can figure out how to give it to them.

Discussion

18 Thoughts on "Guest Post — Evaluating Publishers as Partners with Libraries and Higher Education"

I think I have a much easier system. If you don’t want it don’t buy it or if free make its usage available to your community. What you are proposing is a kind of censorship matrix.

Thanks Rachel. I am sure many society publishers would find this idea interesting.

There would need to be a moderating group who reviewed the scoring system periodically and made sure it represented a fair balance between the needs of libraries and ‘partner’ publishers were in balance.

A question: how much have you discussed PAPPI with other librarians, and what has their response been?

Hi, Rod. This model is quite different than what most libraries use when considering a license agreement. Librarians who have seen it express interest, but recognize (as I do) that it needs more input from others, including other libraries, institutions, and publishers, before it can be applied. This is very much a starting point, not an end product. I hope there will be opportunities for further development, with learned societies playing a big role in that.

That sounds good, thanks. Please do get in touch if you want input into that further development.

I am Managing Director of IWA Publishing (a society publisher), and on the Council of the Society Publishers’ Coalition and also of ALPSP.

I share David’s wariness about metrics in a context like this, but would prefer a good, collectively developed (if imperfect) set of metrics to the current situation we have.

I’ve been thinking about this post a lot as we worked it into shape for publication here. I would very much like to see better support for the non-profit, the research society, and the university press sector by universities and libraries. But I’m not sure that support is something that can be easily translated into a quantitative metric.

One of the biggest issues may be the assumption that a library’s values are the same as that of its host institution. I was reminded of this 2016 post by Rick Anderson:

https://scholarlykitchen.sspnet.org/2016/03/17/library-institution-misalignment-one-real-world-example/

Here you had libraries and their host universities advocating on opposite sides of an issue. And that brings to mind the question of whether the library exists to serve the needs of the administration and faculty or whether the library should be in the lead, pushing the administration and faculty into better policies (or probably in most cases, some balance of the two). The measurements offered by PAPPI suggest one specific set of values. How accurately do they represent the administration and faculty positions of universities? Have administrators and faculty been surveyed? Can a metric like this ever be universal or will it only apply to a subset of like-minded organizations?

Some of the measurements are kind of fuzzy — how does one measure a company’s lobbying activities for example? Most of these are not publicly disclosed, and many publishers do not lobby directly but instead do so through industry organizations like STM and AAP. But being a member of those associations does not automatically mean that you support every policy initiative that comes out of those organizations. Is someone available to try to comprehensively collect this sort of information, and if not, how could it be equally applied to all organizations being measured?

I agree with Rod that an outside organization or panel would need to be involved to make this a reality. I think such a group could address your questions, David, about collecting information and applying scores equally.

In general, as a provisional system, there are many improvements that could be made to PAPPI. With regard to lobbying activities, there are indeed challenges in finding such information. Lobbying was perhaps not the best criterion to include, but there are ways to improve this criterion while keeping the spirit of it. For example, Harvard’s Berkman Klein Center/Harvard Open Access Project extensively document publishers that have announced support for or opposition to particular OA-related legislation.

As to surveys, no survey of administrators or faculty has been done here; however, I have not created the provisional system haphazardly. For example, there have been several publications in recent years about what it means to be a land-grant institution, and I have tried to reflect the ideas of those scholars in this system. How that would really play out with administrators would vary by institution. With a colleague, I also interviewed several publishers a few years ago. Those conversations informed much of this work. Faculty opinions vary considerably, but I’ve found that many of those involved in leading learned society publishing do share the values that informed PAPPI.

Finally, a note that academic libraries’ missions include access to and preservation of the scholarly record as fundamental principles and activities, which are historically unique from, but complementary to, the missions of other entities included here. As such, I think libraries can serve as a sort of “conscience” in higher education related to access to, ownership, and preservation of information. Involving libraries, along with other partners, in decision-making helps our institutions engage in and support better, more inclusive, practices.

It’s funny the way academia gives its copyrights to these publishing companies and then gets upset when they protect and exploit their intellectual property for profit.

I like the idea that libraries should preferentially do business with publishers that are aligned with academic interests and values. But I think another reason why Elsevier, for example, is doing a better job on metrics than SfN is because SfN uses publishing to make money to support their other activities (you can see on their financials that it generates a large percentage of income but receives a much smaller percentage of expenditure). In contrast, Elsevier spends its money in its publishing businesses. Why wouldn’t a company that reinvests in its services and products outcompete a business that is using publishing as cash cow to subsidize its other programmes? SfN journals are not cheap – let’s not pretend J Neuro and eNeuro are diamond journals published out of the pocket of some generous professor. If SfN has failed to reinvest in its publishing business and improve the value of its services for authors and readers, then maybe it deserves to lose market share?

Elsevier and other large publishers have an incredible number of staff as publishing companies, which I am sure help them do “a better job on metrics.” Many learned societies are neither publishing companies nor businesses. So, “outcompete” is an interesting term here, because while publishing companies and learned societies that publish do compete for authors, learned societies are also serving faculty in many other non-competitive, non-publishing ways that I think deserve credit. This is one way to give credit for some of those other activities that positively impact those authors and readers who are also faculty and students.

Thank you Rachel for this excellent piece.

I see several commenters who are wondering if libraries should simply cancel contracts or if they are in fact in alignment with other campus stakeholders. Rachel is arguing for a formal framework to articulate values, which typically does not exist in the academy. The process that would yield this framework would drive alignment between library and other parts of campus, so that where appropriate the library could take a stronger position at the negotiating table or in extreme cases cancel outright. The library might not get everything it wants out of such a framework – but having one would improve overall alignment and thereby strengthen the library’s ability to incorporate academic values more effectively in its licensing work.

Some of you will not be surprised to hear me make this case, as it’s one I’ve tried to make before but with a less well developed framework proposal. https://sr.ithaka.org/publications/red-light-green-light-licensing/

Thanks again Rachel, and I hope to see something like this actually implemented.

Thanks Rachel — Thinking about your closing comments: “likeminded, “like-missioned” partners, and supporting under-resourced communities, may be a way to find real solutions to resource concerns.” Makes me wonder if a number of other factors should be included in a metric. Perhaps one that also looks at not just content access, but also support of the library and university mission and values in other ways? What about the value of integrated content into curriculum, or to support learning outcomes, digitization efforts, or other scholarship? Would be an interesting expansion to what you have provided.

Interesting work. Thanks, Rachel, for the thoughtful approach. I agree that it is more important than ever to align partner, purchase, and adoption choices with the mission and values of your organization and, when possible, to agree on these as a community. You have probably been following the Values and Principles work being done by Educopia and partners through the Next Generation Library Publishing project, funded by Arcadia. It is a flexible framework so addresses some of the concerns raised here about metrics. Potential to work together? https://educopia.org/nglp-values-principles-framework-checklist/

Hi, Kristen. I think these represent somewhat different approaches (in scope and method) to accomplish similar goals. The Next Generation Library Publishing project is fairly new to me, and Galvan’s VDRL that Lisa points out below is absolutely new to me. As I learn more, it seems many of us are thinking along the same lines. That is gratifying but it also indicates that now is a good time to collaborate. Thanks to both you and Lisa for making these connections!

Having read your article, Rachel, in which you detailed PAPPI when it was published, I am thrilled to see it brought to the attention of the SK community via your post today. I appreciate as well that you offer this as provisional and a starting place for the profession to consider. I myself feel strongly that user data and privacy would need to be accounted for, particularly given the public/land grant mission for equity and inclusion, when user data extraction is particularly harmful for certain user communities. I’m sure others will put forth additional considerations and I hope your work can find a place for extended discussion and development. I see mention has already been made of the work Educopia is doing. I’d also suggest a look at Scarlet Galvan’s Values Driven Resource License (https://speakerdeck.com/galvan/the-values-driven-library-resource-license) for those interested in this topic. Thank you again!

Would be interesting to see this turned into a plug-and-chug sort of analysis tool — select the criteria that matter most to your library/institution/faculty and create your own custom metric, rather than trying to homogenize across the board.

I do see value in articulating a method with definitions etc. that is used collectively. To just take a simple example … when people say “average APC” … is that list, contracted (like in a transformative agreement), or paid? is it where you add up one APC per journal and then divide to get the average or do you add up the articles that have an APC and then divide to get thee average? Over the last year, last five years, etc.? Averaged over the 10th-90th percentiles or across the entire portfolio. Etc. etc. etc.

Librarians have decades of experience wrangling this sort of thing – definitions, measures, etc., e.g., in the USA for IPEDS reporting, and it isn’t easy to do much less as a single institution. But, with a shared method, a given institution/library could then select from the overall to create a picture that reflects its priorities within the larger ecosystem of considerations.

I’m teaching Evaluation + Assessment of Library Services at UIUC’s iSchool this spring semester … I’m starting to think up a class exercise from this discussion!

Agreed, standards are super important (paging Todd Carpenter). I was thinking more about different organizations with different missions and priorities. And thinking beyond the library — could you create a tool that had a standard and well-defined set of performance measures among which you could pick and choose to best reflect your position and what you expect from a partner. One could easily see a society publisher or university press using it as a factor in selecting a platform partner or submission system for example.

I was a bit surprised Elsevier scored zero for accessibility. The business invests a lot on making its content accessible to all users regardless of physical abilities. https://www.elsevier.com/about/accessibility

PS: I work for Elsevier’s parent company RELX.

Hi, Paul. I did not score any of the four publishers on accessibility (8a in the supplemental file/spreadsheet). I did not score any of the four on providing rights statements on all tables, illustrations, figures, etc. either (9c). Those two criteria were not scored; it is not that Elsevier scored zero. In fact, I would be surprised if Elsevier did not earn a high score for accessibility. I provide further explanation on my decision not to score these two criteria in the journal article.