Chris Anderson (no relation) published a fascinating post recently about how the vast amounts of data and processing capacity now available are putting pressure on the scientific method as generally practiced today, revealing that the model of discovery the scientific method presupposes might shift soon.

The scientific method of hypothesize, model, test, Anderson argues, is a result of a limited data environment. Now, in an age in which petabytes of data are emerging in a vast computing cloud, the notion of test, model, and hypothesize might flip things over. Or, even more dramatically, the idea that correlation can equate to causation might finally find credence. In vast data sets, correlation, established through sufficiently robust mathematics on sufficiently robust data, might come close enough to approximating causation that science can springboard from it.

Anderson draws parallels between this approach, in which raw mathematical practices are used against data to draw out advantage, and Google‘s advertising approach. As he writes:

Google conquered the advertising world with nothing more than applied mathematics. It didn’t pretend to know anything about the culture and conventions of advertising — it just assumed that better data, with better analytical tools, would win the day. And Google was right.

Anderson’s writing is a little sensationalistic, but it zips right along.

Of course, the tension he hits upon has been within science since the beginning. Essentially, Anderson is pointing at the difference between inductive and deductive reasoning. Inductive reasoning depends upon analyzing a lot of experimental findings, then finding theories that explain the empirical patterns. Deductive reasoning begins with elegant principles and postulates, then deduces the consequences from them. As Walter Isaacson states in his biography of Einstein, “All scientists blend both approaches to differing degrees. Einstein had a good feel for experimental findings, and he used his knowledge to find certain fixed points upon which he could construct a theory.”

As Einstein himself said,

The simplest picture one can form about the creation of an empirical science is along the lines of an inductive method. Individual facts are selected and grouped together so that the laws that connect them become apparent. However, the big advances in scientific knowledge originated in this way only to a small degree. . . . The truly great advances in our understanding of nature originated in a way almost diametrically opposed to induction.

Anderson’s probably correct that computational induction on a magnitude hitherto unattainable will allow scientists to reliably discover and extrapolate using vast data sets. Quantum physics has already done so, and biology should be next. But I sense a sensationalism in Anderson’s article hinting that the two approaches cancel one another out. As the history of science shows, they have always coexisted. We need more science. It’s not a zero-sum game.

I would recommend reading his entire post. It’s well-written, compelling, and very likely partially right. I would also recommend reading, “Einstein: His Life and Universe.” You will enjoy it.

At least, that’s my hypothesis.

Discussion

8 Thoughts on "Will Data Beat Hypotheses?"

seems to me that deductive reasoning is absolutely always necessary for making predictions. inductive reasoning is useful for clarification of hypotheses, and for important steps along the way to proving them. both will remain necessary to scientific advancement. after all, it is possible to look at a really large pile of data, and still postulate a completely false model. think how long it took science to discover the existence of the ovum. and to disprove spontaneous generation of frogs & the like. no matter the *volume* of data, if the crucial *kind* of data are lacking, incorrect conclusions will be reached.

Ice cream sales are strongly correlated with drownings, murder, shark attacks, boating and lawnmower accidents. Does that mean that ice cream causes these events? In the absence of theory, we would flippantly believe that these associations are causally based.

Its frankly hard to believe that Google researchers are atheoretical. They hire some of the greatest minds — minds that would be wasted if all they did was look for simple relationships in the terabytes of data they have available.

Without theory, we’d be adrift in a sea of meaningless data.

There is much interesting in this article – as well as the rest of this issue of Wired, actually. I highly recommend it.

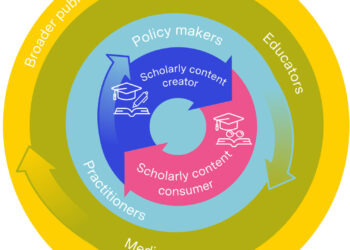

It will be very critical for publishers to consider the effect these new models of information distribution will have on traditional models of publishing. In the era of data computation and analysis, there will be a much heavier reliance on the sharing and re-use of data. These are two areas where most publishers (and their publication agreements) are frequently at odds. Traditional publishing is centered on citation rather than re-use and control rather than sharing. The value of having a dataset is not to plow through the endless tables of data, but rather to run their own simulations or analysis, or incorporate and overlay other datasets they might have at their disposal.

Its probably time for the many publishers who haven’t considered these questions to start asking themselves things like: How will you incorporate datasets into your publications? What sort of re-use and applications will your organization allow, or disallow, or try to control? Are your systems flexible enough to incorporate, republish and share large datasets?

For the community, there are tremendous open questions related to provenance, citation, preservation and intellectual property. One things is certain, it will be difficult to find areas of science that will not be touched by the increasing flood of data.

These are very interesting and thoughtful comments. To Phil’s, you have hit on a key bit of the sensationalism in the Wired article. No mathematical approach can be atheoretical, IMHO. The choice of approach is based on a theory, implicitly or explicitly. You could use the same data to reach a different conclusion with different math — aka, a different theory of the numbers.

Todd’s point is interesting to ponder. While it may seem like publishing data sets would allow for different scenarios to be assessed, the prior point illuminates some risks with that. If the data were gathered under a working hypothesis, changing the mathematical approach might lead to the wrong conclusions. But, then again, what if the hypothesis was wrong, weak, or misleading? Therein lies the potential. Still, it would be the quality of the hypothesis, not the data per se, that would lead to an advance.

It seems true that having more data can help some activities (selling Google AdWords), but having the right hypotheses and the right data means you can confirm that you’re on solid theoretical ground. Many scientific areas have followed the wrong hypotheses until data that wouldn’t fit anymore made enough people squirm. There is a dance between the two.

Why does the term “epistemology” spring to mind?

The amount of data generated is currently very field dependent, which might explain differences in how scientists think within this context. Organic chemistry generates far fewer data than a field like cosmology.

I was very disappointed with the model. You can collect all the data you want in biology (and people do). But it’s pretty meaningless without some underlying models (and there is a fundamental one which drives everything; evolution).

More data = better hypotheses. More data = different ways to approach a problem. I have yet to see someone solve a biological problem (however much data you have) where they do not include some basic biological assumptions underneath. When they don’t the results are usually very bad, and in most cases atrocious. So lots of data != no theory, under no circumstances.

Chris Anderson is interviewed by NPR’s Brooke Gladstone on the July 21st edition of On the Media:

http://www.onthemedia.org/episodes/2008/07/18/segments/103927

Induction, deduction, abduction. If you do not recognize the latter your basis for discussing the role of hypotheses is at best doubtful. Obviously, Chris Anderson is in a similar position. Read, and once you know more about the formation of hypotheses, visit the subject again. Computational science is yet another way to practice scientific inquiry. Nothing fundamentally new, unless you recognize that the means we use to represent knowledge affect our understanding. Representation of knowledge is also constitutive of knowledge.