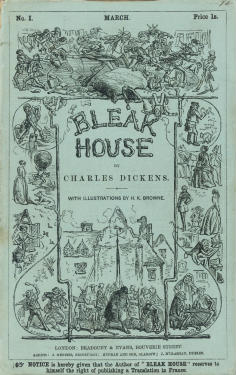

The Google book project is the neverending story. In the past week we have two new chapters: the Authors Guild filed a new lawsuit (significantly, without the publishers), whose aim appears to be to stop the HathiTrust from digitizing orphan works, and the publishers and Google told the judge at a hearing that they are continuing to negotiate a settlement but need more time. It’s now conceivable that by the time there is a resolution to these conflicts, all of the orphans under debate will have fallen into the public domain. Echoes of Jarndyce v. Jarndyce!

I am not going to rehearse the ins and outs of these legal steps, not all of which I pretend to understand, beyond recommending that people interested in this topic keep a close watch on James Grimmelmann’s Laboratorium blog, which somehow manages to present legal intricacies in plain English. I am myself a skeptic about the value of mass digitization, which puts me in a minority. From my point of view, this is a battle like the one described in Hamlet:

We go to gain a little patch of ground

That hath in it no profit but the name.

What I keep wondering is how all this came to be. It is not, as many believe, that the unbounded greed of publishers (and now authors) has driven everyone to the lawyers — it’s not greed because the orphans have no commercial value. This is a battle over principles, which are always the bloodiest.

How I long for a strategy built on foresight, preemption, and cooptation! There is an alternative universe where publishers saw that mass digitization projects were bound to come. They did indeed have decades of warning. The late Brett Butler, cofounder (1976) of Interactive Access Corporation, which lives on today as a service within the Gale/Cengage empire, told me about making the rounds to magazine publishers to acquire digital rights and of talking from the outset of the vision of eventually adding all other content types. That was over 30 years ago. Or there was Dialog and, later, ProQuest, JSTOR, and EBSCO, all of which moved analogue materials into searchable digital dabatases. For books there were NetLibrary, eBrary, Questia, and undoubtedly other companies whose names I cannot recall. And if these operations didn’t tip off publishers that sooner or later, there was going to be a call to digitize everything, attendance at any large library meeting would have exposed them to the librarians’ digital dreams.

It took Google to get this going, and it shouldn’t have. Publishers could have taken the lead with tightly focused projects; they could have marked themselves as innovators instead of litigators; they could have probed the technology and economics of digitization at a time when all this was under their control. They would not be fighting a rearguard action today, hoping to stuff the genie back into the bottle, praying for the retention of copyright. Incidentally, there was in fact an online service called GEnie (General Electric Network for Information Exchange), which launched in 1985. Litigation is what happens in the absence of foresight.

I am not making these remarks because I take the side of opponents of copyright. The point here is that copyright arguments are what come about when a business has not staked out ground early. A series of digitization projects, with publishers working hand in hand with libraries, would have encouraged Google to direct its voracious appetite elsewhere. (Think of how differently Google handled the journals in Google Scholar and the books in the mass digitization project.) A little bit of R&D money could have warded off a fearsome rival and may have yielded new revenue opportunities. A show of hands, please: How many publishers have R&D budgets?

One wonders as well whether publishers could have taken steps early in the game to preempt the Open Access movement. If a digital preprint service were already in place, would Paul Ginsparg have started arXiv? What kind of attention would open access have gotten from governmental and foundation officials if something along the lines of PLoS ONE was up and operating — under the control of the commercial publishers that have the most skin in the game? Failure to innovate leaves a space open for others, and when that space gets filled, it is not often with the publishers’ interests at heart.

There are several areas now where pressure is building for innovation. To pick just three:

- Ebooks sold on a subscription basis directly to consumers. I have been writing about this for some time, but was sad to see that Amazon and not publishers was taking the lead here. This particular battle is not over.

- Information services for research material marketed to independent knowledge workers. I am one such knowledge worker, who, without a university affiliation, struggles to get access to publications that I need. Open access advocates are trying to solve my problem, but this is ironic in that I would happily pay for such a service. Why won’t anybody take my money?

- “Overlay” services that organize information in a clear and accessible way. I will avoid providing a plug for any such service in the scholarly communications area, but you can get the idea by looking at Mint.com, a personal finance manager that aggregates all your bank and credit card accounts. One can imagine such a service, with its splendid navigation tools, sitting atop the infomation resources of a library collection.

If I were a publisher, I would not want Amazon to set up a “Netflix for books”; I would want to control such a service myself. I would not want a third party to control consumer access to my publications (unless I owned a piece of the joint venture), nor would I want the primary interface used by researchers, the window through which all content is organized and consumed, to be in the hands of a separate organization. But all these things can and will happen if publishers don’t plant a flag in the ground first.

Which brings me back to HathiTrust. I just attended a conference in which orphan works were the topic of discussion. What problem is HT solving? Some estimates suggest that 40% of books in academic library collections do not circulate. Even if that number is high, and even if that number would shrink further if full-text search were made possible, the fact is that HT is hardly looking to make commercially viable works openly available — because books become orphans for a reason, and that reason is that they don’t sell. For HT to make obscure academic titles from 50 years ago available to the specialists for whom such works are essential, a lot of eggs may get broken, which could include significant reinterpretations of copyright law, a dramatic expansion in the scope of fair use, the extension of the “first sale” doctrine to digital products, and perhaps mandatory licensing (as in the music business). This did not have to be. By not having an early vision of life-cycle publishing, publishers now face assaults on multiple fronts.

Publishers become their own worst enemy when they ask what is the market for a particular product. You can’t ask that question for new ventures; it only has a meaningful answer when a market has already been established. There is another step, an earlier step, in the process, and this is where publishers have been negligent. What kind of new capability can I create? If the capability has inherent interest, I will figure out a business model and create a new market for it later. This was ably summed up by Tim O’Reilly when he proposed that the goal is to create more value than you capture.

Discussion

9 Thoughts on "When Publishers Are Their Own Worst Enemy"

If every publishing house started a “Netflix for Books” (and not a “Qwikster for Books”), would that really work? Would readers be willing to go to hundreds, if not thousands of individual sites, to sign up for memberships at each site, to navigate the different terms of service, rates and technical requirements and to figure out which publisher publishes which book?

Would a Sony online music service which only offered music published by Sony be a hit with consumers?

Really, the key to the game you’re talking about here is aggregation. And that’s difficult for industry players who are competitors to do. Is Elsevier likely to license their books to a platform run by Wiley, and vice versa?

Often, it takes a neutral third party to negotiate terms with all of the participants and put them together. That’s why Apple was able to create iTunes where Universal couldn’t, and why Netflix has done something no individual movie studio could do.

I’m trying to think of examples where one content creator has succeeded in this sort of venture. Perhaps Hulu comes to mind, which is owned by NBC/Universal, Fox and Disney/ABC. It’s hardly the dominant player in the market though.

Examples: Copyright Clearance Center, Safari Books, Pubnet, CourseSmart, any direct marketer (e.g., Reader’s Digest).

Thanks Joe. Of these, only CourseSmart really fits the bill (as far as I can tell it’s a new collaborative company founded by six textbook publishers with distribution deals from a wide variety of publishers). CCC and PubNet are run by neutral third parties. Safari Books serves a niche that’s particularly amenable to these sorts of ventures, and while I admire them greatly, I’m not sure how well their practices would translate to other markets. Nor am I sure that the fading magazine industry is one to emulate (or that what they do translates well to books).

It’s good to see though, with CourseSmart, companies working together to keep things “in-house” as it were. It will be interesting to see how things shake out over time, and how well they are able to compete.

I keep coming back to the fact that the title that triggered this latest issue had not been checked out since 1993. If publishers and copyright holders insist on keeping their works secret in the age of discoverability, godspeed.

I tend to think many suits are worth pursuing since they protect, even in rare cases when the topic becomes an issue occasionally, if at all.

I have been uncomfortable with this suit since I do feel it holds back more than it offers, for all concerned.

Funny how “Aggregators” sounds so today and “Intermediaries” sound so yesterday. Some publishers can get away with direct selling in some of their markets–particularly higher ed, or STM or scholarly, or even particular children’s publishers such as Scholastic. I do feel the intermediaries have a place. They may manifest in new forms, but every product needs them on some level–visible or not, paid or not.

I do agree–almost all orphaned works are orphaned for a reason. At a talk on Google library scans at Harvard, it was mentioned that something obscure was brought up for study via Google and that students are locating useful information. On the other hand I recently heard a scholar at Emory say he found focus for his own study topic when he tripped over a carton in a hallway and then took noticed what was in the carton.

great quote – Litigation is what happens in the absence of foresight.

It would appear to sum up much of what we have seen to date and probably is understandable given the number of vested interests and inability to herd cats

Why won’t anybody take my money?

Of course, they _will_ take your money, but at an extortionate £15-30 “per-article” rate via a credit card.

I think Joe is overlooking some historical facts here. He wonders why publishers and libraries didn’t cooperate earlier to engage in mass digitization. But in fact Project Muse got started in 1995 as a joint project of the press and library at Johns Hopkins, initially focusing on current issues but eventually, like JSTOR, adding back issues as well, and also in 2000 opening up the Muse to other non-profit publishers. He forgets that, before Amazon came along, the AAUP set up its own online catalogue to sell university press books. Google, of course, changed the game entirely when it made possible the economics of the “long tail,” but once this became evident presses were quick to sign up for the publisher program. Presses like California and Penn State were cooperating with their libraries on book digitization projects even earlier. All of these initiatives happened without the whiff of any lawsuit. It was only when Google decided to put forward a claim of fair use as “opt out” in its library project that mass digitization headed to the courtroom. It did not need to happen, and if Google had been forthcoming about what it was doing with libraries, an agreement might well have been worked out short of litigation. The principle of “opt in,” however, is so fundamental to the structure of copyright law–as Judge Chin affirmed in his ruling–that authors and publishers could not stand by and let Google’s throwing down the gauntlet go unchallenged.