Editor’s Note: today’s post is a joint post by Tyler Whitehouse (CEO of Gigantum, and author of yesterday’s post – see yesterday for bio) and Isabel Thompson (Senior Strategy Analyst at Holtzbrinck Publishing Group). Isabel’s role focuses on strategy, investment, market development and innovation. Her primary passions are emerging technologies, startups, psychology, and strategy — and leveraging the increasingly important interplay between them, to better serve research. She previously worked in market research at Oxford University Press, and in strategic consultancy. She is on the Board of Directors for the Society for Scholarly Publishing (SSP), and won the SSP Emerging Leader Award in 2018. (Full disclosure: Gigantum is part of the Digital Science portfolio, and Holtzbrinck is the parent company of Digital Science.)

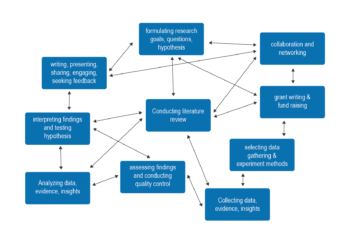

Yesterday’s post discussed current trends in the landscape of research and academic software. Today, we look at the implications of those trends. First, we look at the reproducibility crisis as a case study of how researcher-built tools can help to solve tough problems faced by the community. Second, we look at some of the broader possible implications for the scholarly communication space.

Computational Reproducibility as a Measure of Things to Come

The potential of these user-focused and tech-enabled approaches to change the current context is perfectly exemplified by recent developments around the reproducibility crisis. While the general problem of reproducibility in psychology and medicine is well known, many scientific disciplines also wrestle with a related form. Computational reproducibility adds the difficulties and fragility of research software to the existing issues of general reproducibility, such as human mistakes, oversights, and deception.

Computational reproducibility is both a human practice and a technical problem that has been essentially intractable since researchers began to consider it roughly twenty years ago. The intervening years have seen it rise as a concern for publishers and other stakeholders. Computational reproducibility is difficult because solutions must be able to manage complex software and be robust enough to overcome humans’ inability to properly capture, share, and then reconstitute that software.

In 2013, after more than a decade of research and no real solution in hand, the research world caught a break when Docker released an open source version of its “software container” engine (see yesterday’s post for an explanation of Docker and what it does), allowing researchers to leverage the tool as a piece of the computational reproducibility puzzle. While most approaches were aimed at software savvy researchers, by 2016 more general and user-friendly approaches began to emerge. From start to finish it took about two years to take a decades old intractable problem and develop a prototyped service for it.

While there are a variety of computational reproducibility approaches (e.g., Stencila, WholeTale, the Turing Way and the Carpentries), in the rest of this post we will look at just three: Binder, Code Ocean and Gigantum.

Binder

The first generally user-friendly reproducibility solution was developed by the Freeman group at Janelia Farms in early 2016. Binder is a web service that allows end users to temporarily interact with collections of Jupyter notebooks hosted on GitHub. All the service requires is that the GitHub repository be properly configured, and Binder does the rest. This lets unskilled users get access to highly customized work without any effort on their part.

While Binder’s functionality depends on how well the GitHub repository is configured and doesn’t work perfectly every time, it shows that a small group of researchers can quickly use new technology to deploy powerful and user-friendly services.

Since its launch, Binder has joined Project Jupyter and developed a decentralized do-it-yourself service called BinderHub, which enables individuals to create their own Binder service for various purposes. Researchers love Binder, and the related community is working hard to make it increasingly easy to deploy the service.

Binder is fully open sourced and the associated community has the goal of becoming an international federation for the purpose of offering a permanent, communally hosted service to the world. It is a great example of the decentralization trend discussed in yesterday’s post, and you can learn a lot just by following their twitter feed.

Code Ocean

Many in the scientific publishing world are already familiar with Code Ocean, which is a cloud-based reproducibility platform that officially debuted in 2017. Code Ocean spun out of an academic startup incubator to become the first company to offer computational reproducibility as a paid service to researchers in support of publishing their work.

Code Ocean is a centralized and managed Software-as-a-Service (SaaS) platform targeted at academics and journals for the purposes of demonstrating the reproducibility of code associated with manuscripts. The service is proprietary and designed to run in a cloud provided and maintained by Code Ocean, but the trade-off for the lack of end user control is that the interface provides a well-thought-out user experience for publishing and inspecting reproducible research in the cloud. Users can upload their code, do some configuration, and then check to see if it works and then the rest of the world can inspect it in Code Ocean’s portal.

Like Binder, Code Ocean relies on containers to make sure that software environments are properly captured and available to re-run computations in the cloud. It also provides some versioning capacities and the ability to use proprietary languages such as Matlab and Stata.

The company is notable for demonstrating the speed with which new business models can be developed, tested and successfully marketed to academics and publishers.

Gigantum

As mentioned, one author of this post (Tyler Whitehouse) is the CEO of Gigantum, an open core approach to computational reproducibility that was first announced in 2018. Like the approaches described above, it is container-based and manages and automates a variety of things so that the end user doesn’t have to. Gigantum aims to make collaboration during data analysis and interpretation easier and less error prone, and in doing so, to eliminate difficulties around reproducibility for the purposes of peer review and publication.

Gigantum offers a work environment, that is, a framework for developing and doing actual computation, rather than a post hoc approach to making completed work products reproducible. Gigantum integrates with popular development tools that a researcher uses every day, and offers additional functionality on top through its own UI. It combines automated file versioning with an interactive record of computations in an analysis to provide a robust and transparent route through “the garden of forking paths”.

The platform is highly decentralized in that it is open source and runs wherever an end user wants, e.g., on a laptop or the cloud, and its work products can be published and shared with a single click, which allows independent or collaborative use by other users. Gigantum uses a proprietary cloud service that functions similar to how GitHub works for software developers.

Many tools that “scholars” use to “communicate” on a daily basis are not integrated into traditional publishing-oriented thinking. This contributes to an increasingly artificial split between “research” and “publishing”.

Impact on the Broader Scholarly Communication Space

The exciting landscape of academic software tools is having a real impact on how researchers conduct and share their work. Naturally, this is already feeding through into the broader scholarly communication and publishing space. As more tools are developed, there are many ways that this is likely to affect other players. Below are some of the key examples, but there are many more.

Broader conception of users

A simple point, but one that is worth noting, is that the rise of data science has expanded the boundaries of who the end user of a “research” tool is, and it has done this rapidly and at scale. Data scientists are just as likely to be from industry, startups, and big corporations as from academia — and yet they all use, for example, Jupyter notebooks or RStudio (another platform with roots in academia). It is also worth noting that we see the same pattern of academic/non-academic boundary-blurring in the pharmaceutical industry. This means that it is more important than ever to keep a close eye on how your user base and its needs are evolving.

Evolving research fields

Many of the tools discussed in these posts lower the barriers to data science. This means we will see more and different types of researchers using data science tools. From digital humanities to neuroscience, researchers who previously might not have been able to grapple with certain technical complexities, or afford the cost of niche software, will now be able to utilize powerful tools – and to collaborate seamlessly with new partners across geographies, industries, and disciplines. This will influence the direction of existing research fields, and could even spawn whole new interdisciplinary areas.

Changing expectations of users

As described in Part 1 of this post, many of these tools have been created either by end users or by commercial tech players; and they prize a high quality user experience (UX) and adoption. This naturally changes researchers’ expectations of their digital experiences. It is likely that we will see increased expectations of seamless and beautiful UX, and of quick, integrated-but-decentralized workflows. In addition, the availability of free versions of many powerful tools will change users’ expectations of “value”. Experimentation with new business models will be a necessity.

Risk (just a risk!) of irrelevance

Without putting too fine a point on it, there are of course risks for the players that don’t keep abreast of the shifts happening in the research space. It’s great news that researchers and commercial tech players have developed workflows that meet researchers’ existing or new needs — but established players who are adjacent to these workflows will need to keep a careful eye on the space to see where they can add value, so they can benefit from, and be part of, a more efficient and user-friendly system for research communication.

Expansion of “Scholarly Communication”

The “Scholarly Communication Industry” as we know it (i.e., as usually conceptualized at industry conferences or on The Scholarly Kitchen) has been embracing a constellation of tools and services for many years. Platforms plug-in to multiple other platforms, publishers integrate or partner with startups, and data is pulled and shared through many APIs. However, there has historically been a tendency for researcher-used and -owned tools, such as many of those listed above, to be outside of the classic, publishing-oriented “Scholarly Communication Industry”. Of course, publishers and platforms are moving upstream, and researcher-born startups are part of the familiar “scholarly communication” space. Nevertheless, many tools that “scholars” use to “communicate” on a daily basis are not integrated into traditional publishing-oriented thinking. This contributes to an increasingly artificial split between “research” and “publishing”. In order to reduce friction for both creators and consumers of research, the industry will need to broaden its concept of scholarly communication, and be faster at adopting and integrating the tools of researchers’ daily lives. Publishers and platform providers have been supporting interoperable, multiplatform approaches for years: but now, both sustainability and competitive advantage will be determined by how rapidly and flexibly these can be deployed.

The world of researcher and academic software promises to continue to evolve rapidly over the coming years. It is exciting to see the innovative, personal-problem-led approaches that are supporting our friends and colleagues. As we move into a more distributed world that can harness a broader range of skills and backgrounds, we look forward to seeing what else can be done to create novel and valuable collaborations that support not just researchers, but programming, science, and R&D as a whole.

Discussion

2 Thoughts on "Guest Post — A Look at the User-Centric Future of Academic Research Software — And Why It Matters, Part 2: Implications"

This isn’t so much a comment as a “have you seen this? It might be related.”

A Code Glitch May Have Caused Errors In More Than 100 Published Studies

https://www.vice.com/en_us/article/zmjwda/a-code-glitch-may-have-caused-errors-in-more-than-100-published-studies

Hadn’t seen this, and it is a great example of what can go wrong. Nice find!