At the recent RAVE technologies annual publishing conference (#pubstech), I sat down with Digirati’s Paul Mollahan for an on-stage conversation about open source, its advantages, its challenges, and the potential for the open source movement to help change the way that academic and scholarly publishers use technology. We began by focusing on the importance of shared standards and workflows — we ended with a lively debate about sustainability and the nature of community.

What has open source got to do with Scholarly Publishing?

In 2011, Marc Andreessen said that software is eating the world, predicting that technology companies would continue to significantly disrupt an increasingly broad range of industries. Since then, publishers have embraced technology. Specifically, the internet — an infrastructure and platform set dominated by open source software.

Outside of technology communities, few realize the ubiquity of open source solutions. It spreads risk and reduces overall costs in everything from desktop computers and mobile phones to air traffic control systems. Meanwhile business models have matured, which has raised investor confidence. As an industry that has successfully adapted to Andreessen’s software-eaten world, we certainly need to pay attention to the importance of open source.

Over the past few months, I’ve been working alongside a colleague, Fiona Murphy, on a project for Knowledge Exchange — a collaboration of six national organizations across Europe who work together to support the development of digital infrastructure to enable open scholarship. The project is called the openness profile, which is intended to document an individual or groups’s contributions to open scholarship. To provide information to help shape the initiative, we have done a series of interviews with research contributors that practice openness. We have developed a sense of how they currently share everything from experimental protocols and computer code, to research data and articles. We also explored the barriers they face and what support they might need in order to do more. Although not the primary focus of our research, Fiona and I noticed that the concept of open source was a frequent topic of conversation.

There are increasingly noticeable connections between open source and open research. Both open research and open source are promoted as mechanisms to improve quality by creating faster and more robust feedback mechanisms, they’re both intended to reduce waste and unnecessarily duplicated effort (validation is not duplication of effort, they’re different things), and they both draw / are dependent on communities to be both valuable and sustainable.

As John Maxwell noted in his recent eBook on open source publishing solutions, which was reviewed here by Roger Schonfeld, open source isn’t just about making your code publicly available by sticking it up on Github, any more than posting something on WordPress constitutes publishing it. Both open research and open source are predicated on healthy, interactive communities. Open source software communities contribute to code, provide testing and contribute to discussions. Open researchers contribute to and reuse shared data resources like GenBank or the NERC datacenters, and use services like arXiv to share work in progress, often asking community members for feedback directly.

As we interviewed scholars, data stewards, librarians, administrators, and policy makers, we found that many saw open source as a mechanism to secure transparency and community governance around the tools that they use. In some cases, interviewees saw the use of open source tools and software to be inherent to an eventually fully open infrastructure for research.

The desire for community governance has parallels with autonomy concerns felt by many smaller publishers. As Kent Anderson noted in this piece in 2011, platform providers offer the promise of easy and cost-effective access to the latest technology, but once somebody else has control of how you access your market, you become critically dependent on another organization who have their own business needs and interests. Since 2011, we’ve seen the landscape evolve significantly to include supercontinents of vertical integration, a movement upstream in the research cycle and the potential for a loss of economic control for smaller publishers, as large ones offer a bundled deals of workflow services, platform, marketing, sales and integration into a big deal.

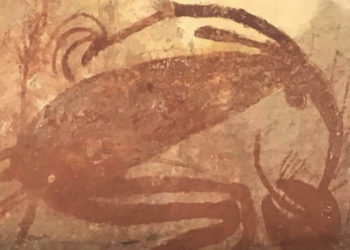

Sheep, common land…. etc., etc.

In the same way that open research advocates find themselves fighting against powerful network effects of embedded incentive structures in academia, technology infrastructures have their own network effects, as I personally discovered when I moved from physics to biology many years ago and tried to share a manuscript with colleagues that I’d written in LaTeX, only to be repeatedly asked if I had a Word version.

The reliance on community is both a strength, or even goal, of openness and a challenge; communities don’t just build themselves. They need to have a reason to exist and they need to be driven. As Ian Mulvaney of SAGE pointed out during the conference discussions on the merits of open source, there is an emergent sustainability issue. Mulvaney cited the cURL project, which is essentially supported by a single developer. With the best will in the world, relying on a piece of software with a bus factor of 1, is a risk for any organization. Open source projects require a critical mass of contributors to be sustainable. That can be tough because for any given project, the number of users outstrips the number of contributors by about two orders of magnitude. That raises the question of who, of all the people and organizations who benefit, is prepared to spend resources on maintaining any given project.

The most talked about open source project in the publishing space most recently has been Coko. At the end of last year, I wrote a post about the #AllThingsCoko meeting and wondered whether Coko would be the community project that offered publishers a third choice between buying into a publishing platform or building their own.

After publishing that post, I had the pleasure of seeing demos of three projects built using the Coko framework of components. Hindawi’s Phenom, UCP/CDL’s Editoria and eLife’s Libero. On the one hand, these projects have all taken a lot of work to get to their current level of maturity, and that might give some technology leaders pause before considering a similar strategy. On the other hand, what I saw were three well-made platforms that represent at the very least a proof of principle. Arguably, these early adopters have been the ones getting the grass into shape on the proverbial common land.

Now, it just needs a community. Oh, is that all?

The next challenge will be to build that much needed community, if the project is to expand beyond a small group of dedicated pioneers. Between eLife, Hindawi, University California Press and California Digital Library, there’s a lot of coverage in terms of influence in potential community groups. Also, looking at the community page on the Coko website, there are some interesting members.

Funders, open access publishers, university presses, and library publishing service providers seem like potential growth areas, if there are enough resources available to drive development and support its maintenance. Learned societies would be a valuable asset to the community. As of yet, nobody has connected the platform to a subscriber database and there aren’t yet any tools to help publishers with legacy data challenges. Some of those challenges may be addressed by service providers, but that market may still need to mature. With all the caveats mentioned, there is an interesting opportunity for coopetition that I think publishers would be well served to consider.

I would like to see open source continue to grow as part of our industry’s technology strategy. Part of my reasoning is admittedly philosophical. A wise developer by the name of Melvin Conway once said:

organizations which design systems … are constrained to produce designs which are copies of the communication structures of these organizations.

As our industry tries to adapt to the changing needs of academia, we’re going to need to support more collaborative and open workflows. In addition, we will likely face increasing calls to be more open ourselves as academics begin to see open source as a necessary part of transparency and perhaps even a sine qua non of open research. Changing the way we work will not be easy. It will mean forming new types of business relationships with different types of service providers, who in turn will have to actively nurture development communities to ensure that standards and good practice are met. As Paul Mollahan said:

…this is a key challenge for these emerging communities; without the right level of time and effort dedicated to these activities, divergence can quickly occur reducing the potential benefits for the wider communities. Ensuring this effort and cost is recognised and managed is often a barrier to long term success

In the end, however, there should be economic benefits. By standardizing further around use cases, using open standards, and common workflows, there’s an opportunity to share risk and bring significant efficiencies.

If we’re to figure out how to support researchers in being more open and collaborative, we need to better understand what it means to be open. By doing so, we can hopefully follow the example of other technology driven industries and collaborate in a way that shares risk, reduces costs and improves sustainability.

Discussion

8 Thoughts on "Open is Eating the World: What Source Code and Science Have in Common"

This is a great take and a pleasure to read.

I continue to wonder about the scale of some of the scholarly-publishing-specific projects versus something like Drupal (or name your major piece of infrastructure such as all-things-Apache). While this should be taken with a giant grain of salt, Drupal.org claimed 30,000 developers in 2013, but even a very modest number would suggest at least several thousand developers have contributed to both Drupal core and its many modules. Add to that the company Acquia, which has ~800 employees who support companies in Drupal development, deployment, and administration.

Now consider that Drupal is an imperfect system that many developers reject for a long list of architectural and deployment reasons. (Note I am not arguing for or against Drupal–just using it as an example and mention its limitations only as a cautionary tale.)

Do scholarly platforms need to reach the scale of something like Drupal? Are they doing enough already by adopting open-source tools as major elements of the platforms? Are they at risk of not reaching critical mass of use and/or development?

I don’t know, honestly, but it’s worth further discussion and research.

Thanks Bill. I’m glad you enjoyed the read.

I completely agree with you that there’s something of an open question with regards to scale. I’ve been told by people in the past that publishing can’t support industry specific open solutions either because there’s not enough money in the system or not enough developers who would contribute.

One issue that causes a lot of confusion is the question of why developers work on open source projects. Sometimes it’s because they can learn a lot about a given technology -Drupal being a good example- and then sell that expertise in the form of consulting. Sometimes companies hire people to work on open source projects because it’s in their strategic interest to have influence over the project or see it progress. With projects like Drupal, they’re of such a size that network effects drive this process. This sort of project is different. There needs to be a deliberate effort to embrace it.

On the face of it, our industry spends a lot of money on software and platforms, more than enough to cooperatively develop an infrastructure, but it’s more complicated than that. There’s a need for architectural oversight and shared standards, the community needs to be convened and driven, there are costs of transition, execution risks, and perhaps even a fear of being left holding the proverbial baby.

It’s like so many technology problems. It’s not really a technology issue that we’re struggling with, it’s a people question.

Thank you! Very interesting response. I would add that some companies adopt open source as means of attracting talent. Developers often like the tools.

hi all

An interesting article! I want to agree with your point about community Phill and to point at a common misconception about open source.

First, on community. It is indeed hard work to build and scale community. It is, I would argue, harder than building software. Which is pretty much why we prioritise community building at Coko and we lead with the much articulated statement “Software is a Conversation”. It was coined by friend, and advisory board member Charlie Ablett and we live by this sentiment. Community building skills are also very rare. We are fortunate enough to have a wealth of experience in community building in Coko, but we are very mindful to never take the eye of the ball. Community requires constant gardening and it never grows exactly how you anticipated so being ready for surprises and always remembering to cultivate, not command, is essential.

However, if you do it right it does work. At Coko we really only started building community in earnest just over 18 months ago. From that moment we have grown from one platform being built on PubSweet (Editoria) to over a dozen. That is pretty amazing growth. But its also very hard work 🙂

As for your question, Phill, about why open source devs would want to work on open source. I think it is a mistake to think about community as ‘free labor’. Infact, this myth is not only largely a myth, but it is also a very problematic myth because it supposes and proposes the dominant contributor / participant is, and should be, those that have the time and by implication, the money) to volunteer their skills to a project. This is problematic on many levels. Not in the least because those than can afford to donate their skills in this way is a very narrow and privileged demographic.

It is important, therefore, to steer open source away from this kind of ‘foundational’ myth. At Coko we pay professionals to do the work as do all of our community members. So why do open source devs like to contribute to open source projects like Coko – Hindawi, eLife, EBI, Editoria, Micropublications etc etc etc ? Because they are paid and paid well.

I think we need to break the link that suggests open source can only survive from volunteer efforts. Its not actually true and further, its a problematic myth if embraced. We don’t embrace it, and I’m pretty sure I would find agreement with this position with most, if not all, of the good folks in the Coko community.

Hi Adam,

Thanks for commenting. I completely agree that it’s harder work to scale a community than build technology. It’s always the social components of any innovation that represent the biggest challenges.

I’m not sure I can completely agree that people who donate skills are a narrow and privileged demographic. I know quite a few working developers that contribute to projects, often in evenings and weekends as well as hobbyist programmers, sometimes still in education, who do it to be part of a community and build skills. As I suggest in my post and the reply to Bill’s comment. There is a community of developers that take an entrepreneurial approach to participating in open source communities. There are also lots of companies who pay developers to work with open source technologies, so your point is well taken that open source isn’t primarily built on free labor.

Perhaps we agree that within scholarly communication, it’s important to get organisations to participate in the emergent open source infrastructure.

I think it’s also worth pointing out that there are many open source projects out there. The recent eBook from John Maxwell et al https://mindthegap.pubpub.org/ describe a number of them just within the publishing workflow. There are also organisations working on open data solutions and repositories, like Dryad, Zenodo and Center for Open Science. Beyond that there are annotation solutions, eLab notebooks, programming languages that are data science oriented eg. R, CRIS systems and various databases like GRID and OpenCitations.

hi Phill

I agree that the Schol Comms sector must participate in, and contribute towards, open source infrastructure. If anyone wants to know how to do this they are free to drop us a line at Coko anytime.

Mind the Gap is an awesome report. It highlights the rich diversity of the field. I also recommend anyone interested in this area to read it. Mellon, MITP, John Maxwell and his team all should be commended for this excellent work.

Who pays the copy editor?

haha 🙂