There are other important value metrics beyond the strength of a journal. This might come as a shock to some STEM publishers, who have flourished or floundered based on the performance of impact factor rankings published each June. While the value of the journal as a container is an important value metric and one that needs to continue, the rapidly evolving alternative metrics (altmetrics) movement is concerned with more than replacing traditional journal assessment metrics.

Like much of life these days, a key focus of our community has been on those qualities that are measured to ensure one “passes the test.” The coin of the realm in scholarly communications has been citations, in particular journal citations, and that is the primary metric against which scholarly output has been measured. Another “coin” for scholarly monographs has been the imprimatur of the publisher of one’s book. Impact factor, which is based on citations, and overall publisher reputation provide the reading community signals of quality and vetting. They also provide a benchmark against which journals have been compared and, by extension, the content that comprise those titles. There are other metrics, but for the past 40+ years, these indicators have driven much of the discussions and many of the advancement decisions during that time. Given the overwhelming amount of potential content, journal and publisher brands provide useful quick filters surrounding quality, focus, interests, or bias. There is no doubt that these trust metrics will continue. But they are increasingly being questioned, especially in a world of expanding content forms, blogs, and social media.

At the Charleston Conference last week, I had the opportunity to talk with fellow Scholarly Kitchen Chef, Joe Esposito about altmetrics and its potential role in our community. Joe made the point, with which I agree, that part of the motivation of some who are driving new forms of measurement is an interest in displacing the traditional metrics, i.e., the impact factor. Some elements of our industry are trying to break the monopoly that the impact factor has held on metrics in our community so that newer publications might more easily flourish. Perhaps the choice of the term “altmetrics” to describe the movement implies the question, “Alternative to what?” — which leads one back to the impact factor and its shortcomings. The impact factor is an imperfect measure, a point even Eugene Garfield acknowledges. We needn’t discuss its flaws here; that is well-worn territory.

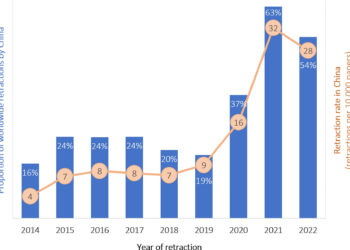

The inherent problem with that focus is that it misses a key point of assessment about the actual impact of a particular piece of research (or ultimately its contributor) that is represented by one or more individual articles that may have been published in multiple journals. Our current metrics in scholarly publishing have been averages or proxies of impact across a collection (the journal), not the item itself, or the impact across the work of a particular scholar or particular research project. The container might be highly regarded, and the bar of entry might have been surpassed, but that doesn’t mean that any particular paper in a prestigious journal is significantly more valuable within its own context than another paper published in a less prestigious (i.e., lower impact factor) title. The fact that there are a growing number of papers that get rescinded is a signal of this even within the most highly regarded titles.

Assessment is increasingly important to the communities directly related to, but not part of, the publishing community. Yes, libraries have been applying usage-based assessment and impact factor for acquisitions decisions for some time, but there is more they could/should do to contribute to scholarly assessment. But beyond that, the academe and the administration of research institutions rely on publication metrics for researcher assessment, promotion and tenure decisions. Grant funding organizations have used the publication system as a proxy for assessing grant applicants. However, these are only proxy measures of a researchers impact, not directly tied to the output of the individual researcher.

Scholarly communications is also expanding in its breadth of accepted communications forms. Scholars are producing content that gets published in repositories and archives, blogs, and social media — separately from or in addition to journals. Some researchers are publishing and contributing their data to repositories such as ChemBank and GenBank. Others, such as in the creative arts, are capturing performances or music in digital video and audio files that can be shared just like journal articles. Traditional citation measures are not well-suited to assessing the impact of these non-traditional content forms. If we want to have a full view of a scholar’s impact, we need to find a way to measure the usage and impact of these newer forms of content distribution. Addressing these issues is the broader goal of the altmetrics community and it is perhaps clouded by the focus on replacing the impact factor.

In order to make alternative metrics a reality, there must be a culture and an infrastructure for sharing the requisite data. Just as there was opposition by segments in the publishing community to the creation of standards for gathering and sharing of online usage data in the late 1990s, there exists opposition to providing data at the more granular level needed for article-level metrics. It is instructive to reflect on the experience of our community with COUNTER and journal usage data. When Project COUNTER began, usage data reporting was all over the map. In addition to wide inconsistencies in how data was reported between publishers, there were many technical issues — such as repeated re-loading of files by users, double-counting the download of a PDF from an HTML page, and downloads by indexing crawlers — that skewed the statistics, making them unreliable.

Fortunately, tools are quickly falling into place to provide metrics on the things that the larger scholarly community really needs: individual items and individual scholars. The broader scholarly community has been aware of these needs for some time, and we are making considerable progress on implementation. There has been a great deal of progress in the past decade in increasing the granularity of assessment metrics, such as the h-index and the Eigenfactor. These metrics are gaining traction because they focus on the impact of a particular researcher or on particular articles, but they are still limited by dependence solely on citations. Newer usage-based article-level metrics are being explored such as the UsageFactor, Publisher and Institutional Repository Usage Statistics (PIRUS2), both led by COUNTER, as well as other applications of usage data such as the PageRank and the Y-Factor. Additionally, infrastructure elements are becoming available that will aid in new methods of assessment, like an individual researcher ID through the ORCID system that officially launched in October. Other projects described in Judy Luther’s posting on altmetrics earlier this year are examples of infrastructure elements necessary to push forward the development of new measures.

Our community needs to move these pilots and discussions of alternative metrics into the stage of common definitions and standards. We need to come to consensus on what should be included and excluded from calculations. For example, we need clear definitions of what constitutes a “use” of a dataset or software code and how to quantify the applications of data from within a larger collection. Some of these determinations might be domain specific, but many of these issues can be generalized to broader communities. Because these resources can and often do reside in multiple repositories or resources, thought needs to be given to how metrics can be consistently reported so that they can be universally aggregated. Additionally, we will need commitment to be a level of openness about the automated sharing of usage data so that network-wide measures can be calculated. Efficient methods of real-time (or nearly real) data collection should also be considered an important element of the infrastructure that we will come to expect. While a central repository of data for anonymization purposes or for more robust analytics might be valuable, it probably isn’t a necessity, if we can reach agreement on data supply streams and open data-sharing tools and policy guidance.

By all means, it is early days in developing alternative metrics and the technical and cultural support structures needed. Some have argued that it might be too early to even begin establishing best practices. However, if alternative assessment is to really gain traction, agreeing at the outset on the needed components of those metrics will solve many downstream issues and lead to more rapid adoption by everyone who needs better assessment of the value of scholarly communications.

Discussion

33 Thoughts on "Altmetrics — Replacing the Impact Factor Is Not the Only Point"

Hi Todd,

Great post. I’m on the “it’s probably too early” side of the fence, but only marginally so. There are a spectrum of sources of ALM data, and right now some of them are pretty robust, and are probably at exactly the right moment where they would benefit greatly from discussions around standardisation. Other signals are far more evanescent, and yet other signals are known to be coming online, but are really there yet.

We discussed some of these issues at the ALM workshop just over a week ago that was hosted by PLOS: https://sites.google.com/site/altmetricsworkshop/.

One of the actions coming out of that workshop is to look at building a registry, not for collecting the data, but rather descriptions of sources. I think that could help a lot. I use the term registry, but the fist pass will likely be the simplest thing that can be done quickly, a shared spreadsheet, or suchlike. The key point is to get visibility on the sources.

Another area that has a lot of potential is the creation of tools that help people. It would seem that it might be a waste of effort to build standardisation infrastructure around objects that are not used. The associated hackathon https://sites.google.com/site/altmetricsworkshop/altmetrics-hackathon came up with one such potential tool http://rerank.it/ to help with filtering PubMed search results.

– Ian

My reaction to the thought that it’s too soon is threefold. First, under the best-case scenario it will take at least a year to undertake any truly broad-based consensus project, so let’s start the clock as soon as possible. Second, there is a spectrum of “standards” that range from the very informal agreed definitions, though best practices to formal standards. We can move from early-stage definitions to more formal specifications along the spectrum over time and make things more formal and more strict over time as agreement coalesces. Finally, I agree with your perspective that altmetrics includes a spectrum of approaches and a suite of growing definitions that apply to different aspects at different times would probably be a useful approach. A central repository/registry would be a useful project and something that could easily be moved forward.

Unfortunately, I wasn’t able to attend the ALM workshop in SF, but my colleague Nettie Lagace was. I heard it was a great discussion and am looking forward to some of the outputs.

I think one sign that it’s “early days” at the community level is that these are still called “alt.” Within specific organizations measuring their own performance, there is nothing “alt” about usage data, advertising or marketing click-throughs, time on site, percent registered for alerts or other features, percent activated, and so forth. There are core metrics being used every day to measure the effectiveness of online businesses, and these are not alternative, but necessary.

I think you’ve also hit the nail on the head on another reason “alt” is a problem — it traps the community against the impact factor. “Impact” is a very specific concept around a very specific reaction to published works. By being “alt,” I think there’s a tendency to look over the shoulder and see how well the alt-metric aligns with the impact factor. That’s a mistake. Metrics like “time on site” or “PDF downloads” measure different things. They are not alternatives, but unique measures all their own. Dispersion, engagement, social sharing, use in education — all measure behaviors that are important but not “acknowledgement in subsequent scholarly works.” That’s a different way of integrating the literature, the Ouroboros Factor.

I would vote for discussing metrics, not alt-metrics, which the community should begin to standardize around. That’s a better lit path without the spectre of the impact factor looming everywhere.

I completely agree, but unfortunately altmetrics as a name people recognize as a term that encompasses this work might be a horse that’s already left the barn. As you note, the problem is that the variety of things that fall under this rubric is quite large and diverse set of assessment measures.

You may be surprised to learn that I agree with you, and in fact made the same argument to Dario Taraborelli and Jason Priem almost a year ago. However, that horse done left the barn. The value, in these early days, of alt is that it points to all the other sorts of impact we’ve been missing out on through the focus on citations. So not a replacement of, but an addition to.

Do those other Alt factors have any relevance to the science being presented? For instance, 3,000 people viewed the article. What does the 3,000 mean?

Harvey, in general this is what people are beginning to try and work out. Nobody has concrete answers yet and there are in any case more interesting ways to look at things (for example characterizing data rather than taking simple counts).

Looking at simple views is plainly not sufficient, but what if you knew that 3 people had written a F1000 review of an article? Or that it had been saved to a reference manager 3,000 times? Is that indicative of something? This is where more research is required.

However alt-metrics don’t all need to be relevant to the quality of an article. A robust measure of quality is always going to be difficult to achieve. Citations certainly aren’t the answer (even though that’s the best we have). It’s arguably more useful / valuable to be measuring other things: has data in the paper been reused? Has it reached the right audience? What did that audience think about it?

EUAN:

The audience for a given article in any given journal is very small. I am not a scientist but I am a publisher and I know audience size. What additional value is there to the audience that an Alt can give. Will the Alt impact the science. Will the scientist change what s/he is doing if they had more information on the audience? Has IF changed what scientists do? The answers to these questions I posit are no it will not and has not changed what the scientists do. If I am doing research on the homeobox gene (did I get it right David) will I change what I am doing because 500 more people read articles on some other gene?

Harvey, Euan hits a key point here. While you are right in that the audience for most articles is small, the research community as a whole is much larger, and altmetrics have potential to better serve that broader audience beyond just those working in a particular area and reading a particular paper.

Technology has reached a point where we can track an enormous amount of data about what happens to each paper after it is published. We can better use that data to help different parts of the community do their jobs better, rather than just relying on one metric, the Impact Factor, and trying to shoehorn it into every purpose, no matter how poorly it fits.

Think of a graduate student interested in keeping up with the literature. For that student, knowing what other researchers are bookmarking gives an immediate benefit, an ability to stay current, rather than waiting for a few years to see what citations will tell us. For the development officer at a university, charged with bringing in donations, knowing about the media coverage and social media discussion that a paper engenders is much more valuable than citation. That job requires letting people know what your university is accomplishing and that it’s important, reaching beyond the journal to the donating public. For a grant officer or a tenure committee, they may want to measure a researcher’s “impact”, knowing whether their work inspired progress and new experiments in the field. So for them, citation may be the best way to answer their questions.

The idea is that we have the ability to provide all sorts of information to all sorts of different people.

And yes, homeobox, exactly right, but note that it is a DNA binding motif, with most species having many genes that could be classified as “homeobox genes”, so really it’s more a gene family, there is no one “homeobox gene”.

David thank you for the info, you are the first to put meat on the bone and not talk about Alt in the abstract.

It is making more sense now.

If it’s helpful, I wrote a piece about altmetrics for the Biochemical Society’s magazine here:

http://www.biochemist.org/bio/default.htm?VOL=34&ISSUE=4

I’d like to toss my two cents in on this discussion if I may.

I am a senior scientist working for a small chemical manufacturer in Ohio. Most of our customers are pharmaceutical and medical diagnostic companies, but we also have corporate and academic customers engaged in many other fields of research and product development. Like most of our senior scientists, I spend lots of time reading journals looking for (a) authors who use our products to see how they use them, (b) authors who do interesting things without our products to see if/how they could benefit from our products and to develop new product ideas, and (c) publications containing procedures (usually synthetic procedures) that will help me in whatever new product I am developing. We scour all types of journals, open access and paid subscription, covering a variety of subject areas.

None of us in our company, so far as I know, really care about journal metrics. Our publications have been patents and two editorials written by the company president. We haven’t published our research in peer-reviewed journals. Journal metrics don’t affect what we do or do not do. What we care about in the literature are things that enhance our company’s bottom line — nifty applications of products we sell or bright ideas for new product development. A journal’s metrics don’t help our bottom line. A journal’s content might help us make money.

Having said that, I do see some value in citations as a metric. Measuring the number of citations that a specific journal has isn’t really very useful to me, but measuring citations for each published article can be useful. In my reading of synthetic procedures, I have run across quite a few published preps that are less than helpful, if not outright garbage. If I find a procedure that looks like it might be helpful, I look to see if the publisher has listed citations of that article on the publisher’s website, and I look to see if the citations are mostly from the same research group or from different groups. A paper containing a prep that has no citations raises a cautionary flag in my mind. I also treat cautiously a paper that is cited only by the same research group that originally published it. A paper containing a prep that is cited by different research groups is welcome, because it suggests that others have tried this procedure and found it to work.

None of the preceding paragraph is meant to say that I use only preps that have citations. I have to use my own, and my colleagues’, knowledge of chemistry to determine if a prep is worth pursuing. I can’t just turn my brain off and rely on the publication. Citations by article (not by journal), though, assist me in ranking the relative worth of various procedures and help me prioritize what procedures I want to try first.

As for the alt factors, I honestly admit that I don’t know enough about them to say what will be useful for me as a bench scientist. As much as I love open access journals and would like to see more of them, open access vs. paid subscription is not going to be a useful metric to me. Content is useful. How I get that content is not so useful. If the metrics community wants to help the bench scientist, you need to have some way of telling me that the content of the article (not the journal overall) has a measurably useful value to me. Has someone else looked at the prep or the application and found it to be good, not-so-good, or downright bad? Has someone else been able to build productively on the work done in a particular paper? That is what is useful to me as a metric. How well the journal is doing in terms of overall citations isn’t really that helpful.

Thanks for taking the time to read this. I hope it has been helpful and not just noise.

As a data-driven guy, I feel that the alt-metrics community is making two fundamental errors:

1. It is driven by anti-hegemonic values (i.e. attempting to dethrone the Impact Factor), and

2. Being aligned with open access publishing

Metrics are proxies for some abstract concept, like quality. When one attempts to construct a new metric, it is important to understand what it stands for. From my perspective, the alt-metrics community is more focused on gathering and reporting data and not on their interpretation. Responding to critique with the response, “well, it’s still too early” is a failure to grapple with the difficult work in constructing metrics.

Secondly, there is really no reason why the alt-metrics community needs to be so heavily aligned with the open access community, and I believe that this relationship is a liability (not an asset) to the development of new metrics.

Yeah, I have never quite figured out the connection between OA and altmetrics. I’ve seen metrics that included the “openness” of the journal in the calculation of the measurement of that article’s quality. Or there’s this gem, where the increasingly shady Guardian let the CEO and an employee of Mendeley write what is essentially an advertisement for their own company under the guise of an editorial:

http://www.guardian.co.uk/higher-education-network/blog/2012/sep/06/mendeley-altmetrics-open-access-publishing?CMP=twt_fd

Midway through an article about altmetrics, they shift gears and start trying to make the case that the goal of altmetrics should be to drive the uptake of OA journals over outlets like Science and Nature. Huh? I thought the goal was to measure and understand the research being done, not to prop up a particular business model or ideological crusade.

I think you meant to say, “the increasingly awesome Guardian”. 😉 The point being made there is that for quite some time, obsession with the IF among authors and publishers did hold back the progression of open access. That’s still true to some degree, but open access has found it’s way nonetheless. More broadly, the lack of good ways to measure the different kinds of impacts different kinds of works have has left some researchers shoe-horning those works into the paper format just to get credit, and freeing researchers from that is the larger goal, along with getting more rapid feedback than can be obtained via citation analysis. The open access crowd just got there first because they’re really the “modernize research practices” crowd. Open Access is just one component of this, better metrics is another, modernizing the format of publications is another, etc. That said, altmetrics are now on Nature, and Elsevier is getting into the game as well, so even the more conservative players are coming around.

If by “awesome” you mean “awesome at losing money,” then I agree with you. They are said to be losing around £150,000 per day, around £54 million last year. They’ve recently asked the UK government to tax internet users and that the resulting funds should be used to prop up their failing business. If they are the leaders in experimentation with new business models for publication, then we’re all in big trouble.

I would describe their science coverage as having gone from being some of the best in the business to devolving into something of a content farm, allowing anyone willing to write for free to vent their spleen in order to fill pages at low cost. Do you not see the inherent conflict of interest in allowing the founder and an executive at a company to write an article about their own company? Would it be okay for the Guardian to allow the owner of a homeopathic medicine company to write articles about how effective his bottles of water were at curing disease? Isn’t this the exact same conflict of interest, allowing Pepsi to write articles about how nutritious Pepsi is, that brought down ScienceBlogs?

But to get to your point, the obsession with the Impact Factor affects all journals, regardless of delivery model. Any new journal struggles until it can prove its value and quality standards. This is not something that only hurts OA journals. Anything new has to go through these same growing pains. To be truly effective measures of quality, altmetrics must address all publications and can not, in any way, provide biased results toward certain publications that employ certain business models. Tilting the field destroys the credibility of altmetrics and further cements the frustrating dominance of the Impact Factor.

There is a lot of overlap between the people driving both OA and altmetrics. But the two must be separated out and not depend on one another. OA journals must be responsive to the needs of the community in order to thrive. Altmetrics must fairly reflect the real world performance of the research in question and not offer unfair bonus points that can be bought by paying the right group of publishers.

David I have to agree with you. The OA model, regardless of what its proponents say, does not live outside of the economic model of all publishers. Someone pays and someone will only pay if there is a return. The Alt model at this time seems to be pandering to the OA journals and giving them a sense of worth which may or may not exist.

OA publishers aren’t getting bonus points because of something intrinsic in the metrics, and no one I know is arguing they should. The overlap is just incidental to the more progressive mindset of the more progressive publishers. However, with Nature and Elsevier joining in, that initial “first-mover” advantage is going away.

Is it incidental? First there is a move back to page charges and now there is a move to a new ALT system to measure the IF of OA. It too, is part of an economic model to give worth to OA. If Alt gets established a company of some sort will follow and it will make money off of Alt. Nothing wrong with that, but lets recognize what it is. And what is it, a competitive model to ISI’s Impact Factor model. That Elsevier is beginning to play in this market could very well be so that it can offer a product that competes with ISI.

It is important to realize that there are lots of people starting up companies with the goal of making money from the research community through these altmetrics. There is a conflict of interest that must be recognized from many of the promoters. I worry that we’re just going to trade off the dominance and control of Thomson-Reuters for the dominance and control of some other new for-profit company instead.

Some of the more interesting efforts, particularly Jason Priem’s proposal, is to instead follow the example set by CrossRef, and put these important measurements under the roof of a not-for-profit that’s supported by both the research and the publishing community. That offers a better promise of control for the community itself, and hopefully a freedom from potential abuse. The downside is that by taking profit out of the equation, you may slow progress, as some of the motivation for the best and brightest minds to participate is removed.

Harvey, several companies have already started around #altmetrics. See Impact Story, Plum Analytics, Altmetric.com, and others. None of them are explicitly trying to compete with or displace the IF.

Many of the questions you’re asking here are the questions I often get from someone encountering the concept for the first time, and that’s good. I’m glad you’re interested. Unfortunately, I don’t have time to go over the answers, so may I give you some homework? Read Jason Priem’s earlier writing in the subject, much of which is linked to from the horribly biased but informative guardian article David refers to in another comment here, and check out the business models of the altmetrics startups I mentioned above. I think you’ll find answers to most of your questions there and then feel free to comment again or email me directly if you want to know more.

I have seen metrics that do exactly this. I’ll try to dig out the proposed metric that included a ranking of “openness” of the journal as part of the measure of the article’s quality. I know it’s in our Twitter stream somewhere.

That overlap, whether coincidental or not, harms the credibility of altmetrics. One of, if not the biggest flaws with the Impact Factor is that it measures the journal, not the individual article or the work of the individual scientist. If the outlet that the article appears in, and its access policy are determining factors in the metric, then you’re just repeating that same mistake.

Neutrality and fairness are critical. I do think that the best altmetrics efforts are aware of this and do offer a level playing field. But if we want acceptance and validation of these metrics by the research community, they have a lot to prove, and they can’t be seen as merely a means to a different end, as something meant to promote a particular social agenda.

That may just be an image problem, but it is an important one. There’s a high hurdle that must be cleared in order to overcome inertia and become established.

DAvid:

You bring up a good point re: what is the author impact factor and how can one measure it? I guess that comes down to who gets tenure and who gets grants. Also, it comes down to who gets published in the highest IF journals. Lastly, one goes to peers in the discipline and asks: Who is good and who will be good?

Tracked down that example:

The Journal Transparency Index, a numerical metric proposed to rate the reliability and trustworthiness of journals:

http://www.the-scientist.com/?articles.view/articleNo/32427/title/Bring-On-the-Transparency-Index/

For some reason, the proposed calculation of this metric includes:

How much does it cost someone without a subscription (personal or institutional) to read a paper?

That strikes me as being completely irrelevant and off topic for what this metric attempts to offer, and provides a powerful advantage to OA journals.

Yet another example of how far the Guardian’s science coverage has plummeted:

https://genotripe.wordpress.com/2012/11/19/cancer-does-not-give-us-view-of-a-bygone-biological-age/

Yeesh.

Phil, I am not sure I agree with your second point. A growing number of the people engaged in the altmetrics discussions are not engaged in open access publishing, or even publishing at all. This was my point talking with Joe in Charleston. “Traditional” publishers, i.e., not leaders in open access, are extremely interested and active in their sharing of these metrics. I note that Nature just announced (on October 31) that they would also be providing enhanced metrics . Elsevier has also been a leader in advancing some of this work. These metrics have much broader application and interest from funding organizations, university administrations and libraries are all engaged (or becoming more engaged). That said, some of those that have been leading the project are supporters of the OA community perspective, but not OA publishing community per se.

There is a bias toward openness, because traditional publishers have been unwilling to provide this information. However, I don’t expect that this will continue well into the future. Nature’s move in this direction is a perfect example of where the industry is headed. This is part of the reason why discussions about how, why and under what terms this information will be shared need to begin.

(disclaimer: I run Altmetric LLP)

To be fair Phil the alt-metrics community isn’t some shadowy cabal, or at least not any more of one than the STM publishing community 😉

I think there are probably as many primary motivations as there are groups involved so I’m not sure generalizing works.

That said I’m not going to deny there are probably shared anti-hegemonic values – though (1) they’re not aimed at the IF particularly, more the idea that citations are a sufficient way to measure every type of impact and (2) I’m not sure ‘hegemony’ is the right word, give that not most researchers seem to agree.

“Responding to critique with the response, “well, it’s still too early” is a failure to grapple with the difficult work in constructing metrics.”

I take your point, but personally feel that the whole point of alt-metrics is to look at the issue of impact in lots of different ways.

Some of the raw data is demonstrably useful already (ask, say, authors able to quickly see feedback on their work, or to identify a particular kind of uptake). Why hide it away until we’ve got a bunch of specific new metrics?

I can’t see why we shouldn’t produce tools to help with qualitative assessments now as well as (perhaps) quantitative ones in the future.

Furthermore constructing robust metrics *is* difficult and advertising the ideas and data gets people talking, which in turn can help persuade smart metrics researchers (like you) to take a look at the field even if it’s to cast a necessarily critical eye over things.

“Secondly, there is really no reason why the alt-metrics community needs to be so heavily aligned with the open access community”

I’d echo Todd’s comment below.

I’m aligned with OA in that I think it’s a generally a good idea and a model suitable for many journals. Don’t most people in the industry nowadays? I can’t say it’s affected my alt-metrics thinking in any way.

Hi Todd,

I think your post is spot-on. Moving to an article level for assessment of that particular article is important.

There are many things worth measuring, and each tells us something different. But “usage” or “popularity” is not the same as “impact” or “quality”. There’s some interesting work being done along the same lines in the world of journalism:

“Metrics are powerful tools for insight and decision-making. But they are not ends in themselves because they will never exactly represent what is important. That’s why the first step in choosing metrics is to articulate what you want to measure, regardless of whether or not there’s an easy way to measure it. Choosing metrics poorly, or misunderstanding their limitations, can make things worse. Metrics are just proxies for our real goals — sometimes quite poor proxies.” Jonathan Stray, Neimen Journalism Lab

That’s really the key as far as I’m concerned, clearly defining what you want to measure, why it is important, and to whom it has importance. I worry that too much of the altmetrics world starts with a measurement and then works backward from that. Here’s something I can measure, now I have to figure out whether it means anything, and that nearly always results in looking for a correlation with citation or impact factor as the deciding factor in whether the metric is meaningful.

That’s a poor scientific methodology–one must design experiments to answer a question, not the other way around. Social media engagement, usage, citation, all of these things can tell us something that’s useful to a different audience. If I’m a graduate student wanting to keep up with what my peers are currently reading, I need a different set of metrics than a grants officer at a funding agency, looking for a researcher with a long track record of effectiveness in driving a research program that advances my agency’s goals.

I think Dave and Phil have defined the problem. Namely, what is the purpose of Alt? Who will use Alt and why. As for OA’s association with Alt especially in STEM publishing. I don’t think there is any effort to deny these journals inclusion in the ISI database. If memory serves, it is quality and the impact of the journal on the science.

As for author recognition, that is determined by the quality of the work they do not the number of blogs or tweets they post.

Further, I think that OA in some form is becoming more and more the province of traditional publishers. Just look at Springer. Elsevier and Wiley are creating more and more OA or what I prefer to call author funded publishing ventures.

I agree: if we let altmetrics simply mean “alternatives to the Impact Factor,” we’ll have settled for much less than we ought.

Of course, the term “altmetrics” belongs to the community at this point, and the community will have to decide what it’s an “alt” to. That said, when I came up with the term, the Impact Factor was not my chief concern; I was thinking of alt as “an alternative to traditional, unidimensional, citation-only approaches.” For what it’s worth, I talk to a lot of folks about this, and my sense is that this understanding still represents the consensus view.

After all, there are tons of cool alternatives to the Impact Factor that aren’t typically included under the banner of altmetrics, including journal-level metrics like SNIP, author-level metrics like the h-index, and article-level metrics like citation counts. These are still looking exclusively through the lens of citation-counting. Nothing at all wrong with that; there’s lots of awesome things that can be seen through that lens.

But there’s also a lot of really important and exciting knowledge the citation-lens simply can’t resolve. Astronomy emerged as a scientific field with the optical telescope, and astronomers still use optical telescopes today. But they also use radio, infrared, and more exotic instruments, gathering information Galileo couldn’t have dreamed of. My hope for altmetrics is that soon scientometricians, and the decision-makers who rely on them, could have a similarly expansive set of tools, sampling from all over the impact spectrum.

I think part of developing those tools is certainly initiating conversations about ways we can work together as a community of practice and establish reasonable best-practices, share knowledge, and work together. Some of this, like the ways we report usage data, will require working pretty closely with publishers who provide the data, paving paths blazed by COUNTER, PIRUS, MESUR, and other usage-oriented projects.

Most altmetrics data, though, actually exists in a world quite independent of publishers: in databases curated by Wikipedia, Mendeley, CiteULike, Twitter, and so on. Here the field may benefit from robust and healthy competition, as independent providers compete based on transparency, comprehensiveness, and features like visualization, normalization and contextualization. Even these, though, will benefit from continued development of the community and continued discussion, sharing, and (especially) research into validating what are still very new and unproven data sources.