At the Digital Book World (DBW) conference last week, I wandered around asking the question I always do: How much of publishing is generic–that is, with application to all publishing segments–and how much is specific to one segment? In the STM world there are many things that have no application elsewhere–for example, open access mandates from funding agencies–but it’s good to look for generic items. These can provide clues as to how certain things will develop, in effect allowing the publishers of one segment to do their R&D on the backs of publishers from other segments. An example of this kind from several years ago would be Google’s mass digitization of books without publishers’ permission, a prelude to the tech industry’s assault on copyright, which now includes such hard-to-believe policy statements as declaring that text-mining is not a copyright violation. If only we had seen the dark heart at the center of Google earlier! As for understanding in the early days where Amazon was headed, I doubt there is a book publisher on the planet who does not regret having supported Amazon wholeheartedly. Hmmm. When does Amazon decide to go after STM?

The generic item that was being talked about widely at DBW this year is the collection of end-user data. For the trade publishers that make up the bulk of DBW’s audience, end-user data is almost like a piece of moon rock: foreign, unexpected, unintelligible. This is because trade publishers historically have sold books indirectly through such channels as bookstores and wholesalers, and thus have had little or no knowledge of how their books are actually used. College publishers–almost all book publishers–have had the same problem. In the journals world the situation is a bit different. Professional societies have always had some idea of end-user activity because of the subscriptions sold to members and a few other interested parties, but in institutional markets, end-user data was always harder to come by, as librarians are pretty much united behind the idea that the collection of any data is a woeful violation of privacy. And maybe it is.

Enter digital media, and everything is different. At this point we all know about the revelations of Edward Snowden. We know that Google and Facebook are capturing our every keystroke. We know that in the world of the Internet, someone might feel lonely, but no one is ever alone. I am as creeped out about this as anybody who is not employed by the CIA or the NSA, but I do wonder if some data collection may be benign, that some of the surveillance economy may in fact be in my interest and in the interests of others, that it might indeed have a progressive component. Orwell gave us the fearsome Big Brother; Cory Doctorow gave us the chilling Little Brother; but now we may have the prospect of a Big Sister, a benign force that should not be tossed out as we attempt to flee from the depredations of government spying and commercial invasiveness.

By the way, in case you are not familiar with it, the 1984 of our time is The Circle by Dave Eggers, (discussed here) which is at times as disturbing as Orwell’s original. Eggers’s target is not a Stalinesque totalitarian society but the groupthink of social media. This book should be taught in high schools.

A contrary view was eloquently expressed by Francine Prose on a recent blog of The New York Review of Books. It’s clear that Prose is not anti-tech; she carries a Kindle and recognizes that technology can enhance lives. But she does feel uncomfortable that “someone” (is a machine someone?) is “watching” her as she reads Thackeray’s Vanity Fair; she resents having to share her private experience with Becky Sharp with whoever is reviewing her reading logs. Now, I know Becky Sharp and if I had to spend any time with her, I would welcome some company, and all the better if these observers came packing. But I pretty much share Prose’s point of view. I don’t want anyone to know that I stopped reading a book halfway through and I was both discomfited and amused when Prose asked if a reader would like “someone” to know that he or she had reread certain passages of Fifty Shades of Gray.

What really troubles Prose, though, is that all this new data–what we read, when we read, how far do we read–is going to be used against writers. If the data shows that readers turned off at a certain point, would writers be urged to write differently, to find a way around that point? Here is where we part company. I would like very much to have feedback on how readers respond to things I write. Is this Big Brother or the kind of information that helps us to grow?

End-user information for publishers comes in two varieties: information about individuals (Joe stopped reading Thinking Fast and Slow after he was 35% of the way through the book; Joe has purchased all of the books on this year’s Booker Prize shortlist) and aggregate data (50% of all readers stopped reading when they were 40% of the way through The Goldfinch; people who purchased The Second Machine Age also purchased Race Against the Machine and The Lights in the Tunnel–examples culled from Amazon). Publishers can use the aggregate data for planning, for forging marketing campaigns, and for wheedling authors to focus on some things instead of others. The application for trade authors, fiction writers in particular, is obvious, but it also has extensions into textbooks (no one can figure out the examples in that textbook) and journals publishing (readers are more likely to read an entire article if the abstract includes quantitative information–a made-up example). My anecdotal observation is that few people are terribly concerned about the collection of anonymized aggregate data. Publishers are thus likely to collect and mine this data intensively in the years ahead. Publishers that don’t do this, or that are too small to do this meaningfully, will be at a disadvantage. Big Sister will lead to more efficient product development, better curation of texts, more productive discovery services, and a lower cost basis for the enterprise.

Collection of data on individuals is another matter. Few of us like this; some of us oppose it very strongly. But even here there is a benign dimension. One mail-order company has figured out that I am tall and sends me catalogues with extra tall sizes. Do I feel violated by that or do I welcome the opportunity to shop from home instead of making my way to the nearest Rochester men’s store? A book publisher or online retailer notes my propensity for reading science fiction and sends me offers for more: Where is the Satan in this?

It is in educational and training materials, though, where the collection of user data may be most valuable. Adaptive learning materials adjust themselves to an individual user’s needs. Need a few more exercises in trigonometry? Well, here they are. Stumped by that chapter on organic chemistry? Let’s take it more slowly this time, with a series of examples to show you the way. Here again Big Sister is not studying my political leanings with the aim of throwing me in jail or snooping on some illicit reading pleasures with the intention of committing blackmail. No, here Big Sister is helping me learn more about a subject, helping me to get a better grade. And if we subscribe to the view, as I certainly do, that a better educated population makes for a better society, Big Sister is a positive contributor to civil society.

The most interesting illustration at DBW of the application of end-user data was offered by an executive of a college publishing company. His argument was that “name” authors would become less important in the future as each text comes to include a number of feedback loops. Thus an author would create a text; the text would be sold for classroom instruction; and then students would begin to use it. But over time the students’ experience with that text would be fed back to the publisher. Hmmm. It looks like we need more examples here, a more extensive description there. The text would continually be revised in the laboratory of the marketplace. Over time the contribution of the original author would become a smaller and smaller component of the text that the students use. Authors, in other words, would originate a text, but the feedback mechanisms would refine it.

So here is my prediction not for 2015 but for 2018: the publishers, regardless of segment, that will demonstrate the strongest proclivity for growth will be those that (a) gather end-user data (b) feed the implications of that data back into their products and services, and (c) develop business practices that derive value from that data. I encourage everyone to think long and hard about why Elsevier purchased Mendeley, a company with almost no revenue and with little prayer of ever securing much revenue. We should expect to see more companies acquired on the basis of the end-user data they accumulate. How to value such entities will be part of the art of managing a publishing company and will provide handsome bonuses for the bankers that lurk among us.

Discussion

28 Thoughts on "Big Sister: Some Beneficial Aspects of Collecting User Data"

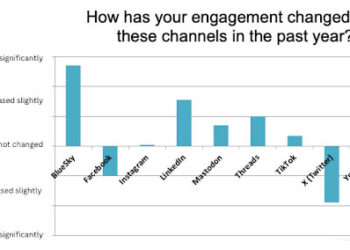

I would throw altmetrics into the pot as a potentially major source of user (and potential user) information, esecially with the addition of message content analysis. Blog articles and their comments, tweets and retweets, etc., are can be valuable user reviews. And as you point out, discussion of existing writing can shape future writing (as well as future thinking and research). In fact I am presently exploring the concept of distinctive patterns in systems of altmetric tweets. When these tweets are about a document they are a form of user data, even if some of the users have not read the document being discussed.

Joe. As usual, a very thoughtful post. While agree with the premise of using data to provide feedback to the publisher or author, I’m not sure that it necessarily means a feedback loop is inevitable–even desirable–when it comes to text. Feedback loops would work well if we had a notion of an “ideal” text (think a Platonic version of the ideal story), and were satisfied with revisiting that Platonic structure over and over and over again, as all texts begin to converge on that ideal text.

Readers like novelty, meaning something that we’ve never seen before. So, while data can create a force to move authors toward a generic text form, there will be other forces moving authors away from that ideal toward new forms for which there are no data. Originality may work best by insulating the author against the data that comes with a feedback loop. For publishers who don’t see a future of Danielle Steel novels may be better off turning off the feedback loop; or at least, keeping the data hidden from their authors.

As Henry Ford famously said, “If I’d asked customers what they wanted, they would have said ‘a faster horse’.”

Interesting to see that Amazon, one of the leaders at putting user data to use, completely disregards it when it comes to their new creative ventures:

http://grantland.com/hollywood-prospectus/up-a-river-navigating-amazons-strange-occassionally-successful-borderline-incoherent-tv-strategy/

This is why Amazon Studios’ biggest innovation — its willingness to post every pilot it orders in order to be seen and rated by the public — is actually a misdirect. The wisdom of crowds is famously unhelpful when it comes to art and, as critic Tim Goodman pointed out a few days ago, networks have been focus-grouping pilots for decades with little certainty to show for it. (Seinfeld, for example, was one of the lowest-testing pilots in television history.) In fact, it’s not clear if Amazon is even really listening to the feedback it so zealously solicits: According to Goodman, Transparent was the lowest-rated pilot in its entire season. The truth is, Amazon isn’t much interested in what casual viewers think at all.

David:

I tend to agree. While in college publishing I worked on “managed or developed” books. Some made it big some did not and some which were just put together by the author and editor became best sellers and are now in their 10th editions!

From the above I learned that people are fickle and at best publishing is a gamble.

Ford’s quip is probably empirically wrong, given that there were over a thousand US car makers when he started. I am pretty sure that a proper survey would have found a cheaper car way ahead of a faster horse. More broadly I find the general argument that data stifles creativity hard to accept.

Also, Joe’s point about a feedback loop being important in training (and reference) materials is well recognized. I used to design compliance manuals for Federal regulations and it is impossible to anticipate all the confusions that others can find in one’s writing. Different people are simply confused by complex issues in many different ways. That is where my taxonomy of 126 different kinks of confusion originated. People are complex critters. See http://scholarlykitchen.sspnet.org/2013/02/05/a-taxonomy-of-confusions/. Moreover, in some cases actually presenting the feedback dialog is very helpful.

For those that serve the library market, I hope that growth is also a result of sharing the data with their library customers and helping libraries develop their services and community loyalties. Though others in the library community disagree with me, I think data analytics with strong policies and practices on data management and protections are adequate for balancing privacy concerns and service quality concerns (see presentation at Fall CNI Meeting). But, since in the library market, collecting that data is enabled by the library itself, the library should also gain value from it. Another approach would be to discount the price of the product by the market value of the data that is collected. I’d prefer the former – that the publisher and library are able both able to add value.

P.S. I can’t help but add a footnote here that the gendered aspects of juxtaposing “Big Sister” and “Big Brother” are really distracting from the otherwise very thought-provoking commentary.

Because I feel it might interest to those following the comments on this post – the session that Andrew Asher and I did at the Fall 2014 CNI on analytics and privacy is now available on video at http://www.cni.org/news/video-analytics-and-privacy/ The companion draft framework can be seen here: https://www.evernote.com/shard/s22/sh/3d95fca3-eba3-4902-8117-17aedd89dc19/474dfbb91811d4cd Happy to hear any feedback – we have a follow up session at ALA Annual 2015.

I worry that the abuses will outweigh the good here (see the recent scandal over an Uber executive suggesting he could use collected data to smear any journalist that writes a negative article about the service http://www.theverge.com/2014/11/18/7240215/uber-exec-casually-threatens-sarah-lacy-with-smear-campaign).

I maintain that privacy is the new luxury, and expect to see a continuing market develop of customers willing to pay for a service that preserves one’s privacy. As the other famous quote goes, if you’re not paying for it, you’re not the customer, you’re the product being sold.

So Ms. Smith shops in her local shoe store. The clerk, with whom she has shopped for years, knows that Ms. Smith likes a certain style and color of shoe and a) let’s her know when there is something new in stock and b) let’s the store’s buyer know to be on the lookout for those kinds of shoes. Ms. Jones shops at Zappos.com. Zappos knows that Ms. Jones likes a certain style and color of shoe and lets her know when there is a new shoe she might like in stock.

The local shoe store is commended for its excellent customer service. Zappos is criticized for being intrusive and not respecting privacy. I’m just confused.

For me the difference is that my local shoe clerk is unlikely to sell my purchasing history to spammers looking to fill my inbox, not to mention information about my purchase of orthopedic shoes to insurance companies looking to avoid covering my condition.

Let’s not assume that all uses of end-user data will be done poorly or invasively. Privacy is important, as is personal choice. But let’s also not stop thinking whenever the topic comes up.

On Thu, Jan 22, 2015 at 6:22 PM, The Scholarly Kitchen wrote:

>

I do see the potential benefits. But I want companies to earn my trust, rather than having the default that I be a sitting duck for abuse. As I said above, I’m willing to pay more to have some control over what’s done with my data. I do think those willing to throw themselves to the wolves should receive a discount, given the other revenue channels that they’re driving.

Very interesting post. I particularly like that you’re a fan of Eggers. I, too, am wondering if Amazon will ever take on scholarly publishing.

Joe, I’ve got to say I’m somewhat surprised at your statement “…which now includes such hard-to-believe policy statements as declaring that text-mining is not a copyright violation.” All of the uses I’ve seen of the practice, at least in academia, seem to fall under fair use. It’s a pretty transformative use, it’s typically non-commercial, it’s usually in service of an academic investigation. Maybe I’m too sheltered in these ivory towers, but can you provide examples of where you think it either isn’t fair use or where it might be skirting the boundaries of fair use? To be clear, I’m not and wasn’t a fan of Google’s scanning project, but that’s not the only corpus that’s used for text mining, and even when it is used, most non-Google uses seem to me to fall under fair use.

From a university press perspective, I’d like to share some user data that’s recently changed my thinking about one of our major platforms. Project MUSE is an amazing platform, and I think for journals it’s been a godsend for most UPs, but on the books’ side, the user data I’ve been getting is causing me to seriously rethink our participation. One of the things that I noticed right off the bat once receiving chapter download reports was that books that we suspected might have text adoption potential or trade potential had the highest number of chapter downloads and typically the most downloads of all chapters of particular books. Since those reports came with information about which institutions were sites of the greatest downloads, I did some digging and was easily able to correlate reduced numbers of print sales at bookstores associated with institutions where the book was available at that institution’s library through Project MUSE. I was then even able to track down the rather large course where one particular book was being used and sure enough, the instructor was encouraging students to use the Project MUSE edition (unlimited downloads and no DRM) of the book rather than purchasing the book. So at this point I’m very hesitant to include any book on the platform that has any adoption potential at all. And the revenue damage that the inclusion of that book has done is irreversible as there’s no way to stop that book from cannibalizing all future adoption revenue—I can’t unsell it from those libraries—and so far Project MUSE won’t allow the removal of a title from future sales. Lesson learned, the very hard way.

And while I was writing this comment, the answer to the question about when Amazon will get into at least the educational publishing arena entered my inbox. Introducing KDP EDU

Finally, I also have to admit that I too was a bit puzzled by the Big Brother/Big Sister dichotomy. Other than those issues I really enjoyed this post.

I find that statement troubling as well. If I subscribe to a journal, download all the articles and then go through them by hand and make a list of all that contain the word “cancer”, I have just text-mined the journal. I don’t see any copyright violation in this, nor in doing the exact same thing via a machine.

What matters is what one does with the results of the text-mining. If Google scans in orphan works and makes a search engine (displaying only small snippets), that’s a transformative use. If Google tries to sell the entire corpus or the full text of any individual book, then there’s a copyright problem.

To understand why this is not a simple issue, read this letter I sent in November 2013 to Judge Denny Chin, who is the judge presiding over the suit the Authors Guild brought against Google:

Dear Judge Chin,

I have just read your decision to grant Google summary judgment in this case and, as a fellow Princetonian, I must express my disappointment. You have evidently bought into the Ninth Circuit’s line of reasoning about “transformative” fair use, which I believe to be seriously mistaken.

No one questions the social utility of Google Books. Indeed, publishers recognized its utility early on. The university press I headed at Penn State at the time was the first university press to sign up for the Google Partner Program, and Google itself cited our participation as a model case in further promoting the program. But the Library Program is another matter.

You forget that Judge Newman recognized the social utility of photocopying in the Texaco case, yet nevertheless found copying in that case not to be fair use. As the judge argued:

“We would seriously question whether the fair use analysis that has developed with respect to works of authorship alleged to use portions of copyrighted material is precisely applicable to copies produced by mechanical means. The traditional fair use analysis, now codified in section 107, developed in an effort to adjust the competing interests of the authors – the author of the original copyrighted work and the author of the secondary work that ‘copies’ a portion of the original work in the course of producing what is claimed to be a new work. Mechanical ‘copying’ of an entire document, made readily feasible by the advent of xerography . . . , is obviously an activity entirely different from creating a work of authorship. Whatever social utility copying of this sort achieves, it is not concerned with creative authorship (italics added).”

In your analysis, you quote from Pierre Leval’s classic article suggesting that a new work is “transformative” if it “adds something new, with a further purpose or different character, altering the first with new expression, meaning, or message.” You go on to say:

Google Books does not supersede or supplant books because it is not a tool to be used to read books. Instead, it “adds value to the original” and allows for “the creation of new information, new aesthetics, new insights and understandings.”

The key mistake here is located in the words “allow for.” That is NOT what Leval said. He said that the act of fair use itself must consist in “altering the first with new expression, meaning, or message,” and Google’s computer-created indexing does not do that; there is no creativity in the functioning of Google’s computer algorithm. It is, as Judge Newman said, merely a “mechanical” procedure.

Why is this so important? It is important because there are those who will exploit the notion of re-purposing, which captures one part of Leval’s argument, to do real damage to the Constitutional purpose of copyright. Like the Ninth Circuit, they will ignore the idea that the new work must itself come from an act of human creativity that adds new meaning, etc.

I have written at length about this problem, pointing out that the ARL, in its Code of Best Practices on Fair Use (2012), uses the idea of re-purposing to argue that, because scholarly monographs and journal articles were originally written for the benefit of other scholars, not students, their use in the classroom for student instruction is fair use since the use is for a different purpose. That, effectively, means that entire articles and books can be justifiably digitized and used in the classroom without the publisher’s permission. One likely result is that the entire market for paperbacks published by university presses for classroom use will be wiped out.

Well, you might say, the fourth factor will come to the rescue there. But then why is the fourth factor not applicable to the Google case also? Google’s supplying the participating libraries with scans of the books in their collections displaced a potential market for the publishers supplying those scans. This is of some practical importance. Not long after the Google Library Project was announced, Penn State Press approached the University of Michigan Library with a proposal for the Library to have a greater range of rights to use the scanned version as licensed by the Press in exchange for the Library’s giving a copy of the Google scan to the Press so that the Press itself would not have to go to the expense of digitizing all of these books again. The Library was happy to agree to this proposal; Google was not and prevented the Library from doing so. Here, then, the “social utility” of this enterprise was directly undercut by Google itself. As a result, the Press and all other publishers whose books had been scanned by Google had to go to the expense of digitizing their own backlists. How does that kind of obstruction advance the progress of knowledge?

I have just scratched the surface here in offering this rebuttal. My arguments are laid out in much more detail in the attachments I provide. Two of these are also accessible at the web site where most of my writings about copyright are available: http://www.psupress.org/news/SandyThatchersWritings.html. The third is the gist of a talk I gave at a meeting of the Copyright Society of the USA last February in Austin, TX. It is there that I explore the deleterious implications of what I have called the “Ninth Circuit disease.”

As for the social utility of mass digitization, which most of us publishers are happy to recognize, it seems far better to have Congress legislate on that issue with a statute focused on it, rather than try to bring it under the ever expanding umbrella of fair use, where in my view it can only further contribute to the conceptual confusion that is already rampant.

I speak here, by the way, only for myself and not as a representative of any organization.

I suspect the objection to text mining not being a copyright violation is that text mining nearly always requires that a copy be made of the entire text. Assuming the original from which the copy was made was legally obtained the question then comes down to a fair use analysis and no one would suggest that something like a word cloud or an article gained from the insights of the mining is either a violation or a substitute for the original work.

My understanding is that text mining typically requires an index of the text, which is very different from a copy (although the text can be reconstructed from the index). If so then the first question is whether indexing is a copyright violation? Given that most search engines do indexing it would seem not, but has this question actually been settled?

It was not the indexing itself that has been at issue in the Google case. Rather, it was that Google did not own the copies of the books that it used for its indexing, but instead borrowed them from libraries and made copies of them. The making of the copies is the alleged copyright infringement. In this sense, although Google may have “legally obtained” the copies, it was not legal for Google, so some authors and publishers argued, to make copies of them, even for just the purpose of indexing. Normal indexing itself could not be considered a violation because it does not reproduce anything verbatim in a way that is not de minimis (i.e., just individual words or phrases).

This is an issue that worried me right from the start of PDA purchasing models, as Rick Anderson knows, and it is a problem for the aggregate book licensing models also, as Tony points out here. Paperback sales at Penn State Press, where we both formerly worked, were about 45% of overall revenue, and any serious threat to this income can have very serious consequences for university press finances. As Tony well knows, however, it is very difficult to predict which monographs will succeed as adoptions in the classroom. Who, for example, would have predicted that a revised dissertation about multinational corporations in a single Latin American country would sell over 20,000 copies as a paperback? (This was Peter Evans’s Dependent Development, Princeton, 1979.) So, any presses worried about this downside are likely to be pretty conservative in keeping books out of Muse and like aggregations–which of course will make them less valuable as aggregations. There is no easy answer here–unless a press decides to adopt a full OA model.

Information about use of chapters, however, can be valuable. E.g., if certain chapters are used a lot, they can be given DOIs and put into programs like the CCC’s successful Get It Now service, which so far has been limited to journal articles.

The prospect of a digital text that phones home is a disturbing one for many people. Cite all of the benign examples you want but an equally long list of counter-examples could be and will be generated.

This discomfort also applies to tracking what was purchased and making inferences from that. There are intermediaries such as libraries who are loath to becoming the information gathering agents of publishers and governments. Perhaps we’ll see other services that act as the “plain brown wrapper” for digital works.

Of especial concern are eTextbooks in the humanities and social sciences and the analytics derived from them. Unlike most STEM titles, there are socio-political agendas that could be and probably will be pursued.

This is an amazingly interesting can of worms.

“Do I feel violated by that or do I welcome the opportunity to shop from home instead of making my way to the nearest Rochester men’s store? A book publisher or online retailer notes my propensity for reading science fiction and sends me offers for more: Where is the Satan in this?”

I think there’s an interesting discussion to be had around where the line is drawn. A catalogue tailored to your tastes or attributes may seem pretty innocuous, but there are documented cases of this kind of marketing effort crossing a line into something slightly more intrusive…:

I think there’s bound to be a place for end user data in the future, but I think it’s important to consider the boundaries of this kind of data collection and use before we end up too far down the rabbit hole!

For many years, I’ve heard debate over “who is the customer”? Is it the library? The author? The researcher? Frankly, it always surprised me that a $10b industry didn’t know who its customer was. Related to Joe’s post, recently I’ve begun hearing variations of ‘researcher operating system” or “ROS” thrown around with greater frequency. This is the strategy to gather big data about the individual researcher to best support them with personalized tools and services. Setting aside the ‘how’ this will be accomplished, perhaps this signals that at least some subset of the industry is planting a flag in the ground and saying “the researcher is the customer”? If so, that is an important statement, whether rightly or wrongly, b/c it allows organizations to clarify and prioritize resources, goals and metrics.