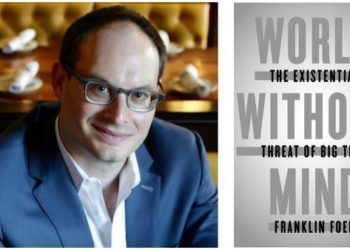

Why are Alexa, Cortana, and Siri (and likely your car’s GPS) all female? Because a cultural stereotype has been baked into new technology by engineers who tend to be male and privileged, and who are probably more accustomed than most to having women in service roles (mother, teacher, housekeeper). This is just one of the interesting observations in Sara Wachter-Boettcher’s recent book, Technically Wrong: Sexist Apps, Biased Algorithms, and Other Threats of Toxic Tech. Reading this well-written little volume might make you more aware of how technology is picking up our sloppy thinking, lazy stereotypes, and unchecked biases and baking them into the future, all because there isn’t enough diversity in the technology space to stop it from happening.

There has been a spate of excellent books outlining the risks of unquestioned technology and bro-centric engineering cultures, as well as books analyzing how algorithms inherit biases of the past they seek to understand and therefore recreate. Wachter-Boettcher’s book is a welcome addition, as she brings many practical insights.

One passage that gripped me early on talks about how using personas in marketing and product development exercises is fraught with conceptual conflations that can introduce and perpetuate stereotypes. Personas, if you haven’t used them, are meant to help product teams understand and empathize with various customer types — executives, students, busy parents, late-shift workers, what have you. It varies by product and market. The typical design incorporates goals the customer may have with the product as well as demographics, including a representative picture of the customer to increase empathy. This is where things go awry, as introducing demographics and pictures means introducing stereotypes. The visual aspects are particularly prone to this. Wachter-Boettcher’s sensible practice has become to focus on the goals and to eliminate the demographics and photos, as they can be misleading while introducing stereotypes.

There are arresting examples of problems users encounter which are alienating and diminishing, such as forms that perpetuate racism and foment harassment, data entry fields that don’t accommodate real names (e.g., names with diacriticals, hyphenated names, long names), code that keeps women out of certain rooms for no good reason, and even an insistence on real identity when people have assumed identities for work (e.g., actors and other performers).

Of course, social media comes under scrutiny — in a detailed way and with stories I either hadn’t seen or fully appreciated before. Wachter-Boettcher’s chapter “Built to Break” is a tour de force on its own, tackling Twitter, Facebook, and Reddit, and the design blindness and arrogance flowing from a lack of diversity among the engineers and founders, and how the resulting problems have been exploited and weaponized. The book is quite recent, having been published at the very end of 2017, so the stories feel fresh.

Regarding Reddit, journalist Sarah Jeong is quoted describing it “a flaming garbage pit,” while calling out the platform for one particularly disingenuous stance:

Reddit’s supposed commitment to free speech is actually a punting of responsibility. It is expensive for Reddit to make and maintain the rules that would keep subreddits orderly, on-topic, and not full of garbage (or at least, not hopelessly full of garbage).

Wachter-Boettcher offers an alternative to the famous exhortation from Mark Zuckerberg (“move fast and break things”), urging technologists to “slow down and fix stuff.” This means not prioritizing programming skill over everything else. Rather, it means elevating people with editorial and ethical judgment, media literacy, and historical context into roles where they can oversee what the engineers produce and the effects these products and platforms are having.

It means elevating people with editorial and ethical judgment, media literacy, and historical context into roles where they can oversee what the engineers produce and the effects these products and platforms are having.

To the initial point about the proclivity for technologists to use female voices in service applications, Waze thought harder about it, and introduced two non-sexist solutions — allowing users to record their own voices, and using celebrity voices now and then (Shaquille O’Neal’s version was particularly delightful). You can slow down and fix stuff, or at least avoid blatant social faux pas.

There is also a useful history of diversity in tech buried in the narrative. As Wachter-Boettcher writes:

Originally, programming was often categorized as “women’s work,” lumped in with administrative skills like typing and dictation (in fact, during World War II, the word “computers” was often applied not to machines, but to the women who used them to compute data). As more colleges started offering computer science degrees, in the 1960s, women flocked to the programs: 11 percent of computer science majors in 1967 were women. By 1984, that number had grown to 37 percent. Starting in 1985, that percentage fell every single year — until, in 2007, it leveled out at the 18 percent figure we saw through 2014. That shift coincides perfectly with the rise of the personal computer.

If that last sentence felt like the start of an epiphany, then you should read this book, because it’s full of such moments.

From social media inadvertently reminding people of traumas and tragedies to the hoofbeats of data mining companies now working as quasi-government sources, Technically Wrong is worth reading. Coming in at a sprightly 200 pages and well-referenced and well-indexed, Wachter-Boettcher’s book is an economical read that’s solidly sourced and useful to have on your shelf for future reference. Buy a copy for your favorite coder or technologist or C-suite decision-maker, so they can start fixing stuff.

Discussion

12 Thoughts on "Book Review: “Technically Wrong,” by Sara Wachter-Boettcher"

“…elevating people with editorial and ethical judgment, media literacy, and historical context” …so maybe my Humanities degree will come in handy! 😉 Just requested from the library!

Just put it in my “want to read” list on Goodreads!

Same here!

My husband bought an Echo during some lightening deal on Amazon. The minute I heard my 8-year old son shout an order to a female voice designed to please and that voice returned a polite response despite his lack of “please” or “thank you”, I knew we had a problem.

The book is on my list. Thanks, Kent.

The first opportunity I had to change Siri’s voice, I changed it to an Australian male’s –

firstly because I didn’t want to feel like Joaquin Phoenix in “Her,” secondly, because who doesn’t want to get driving directions from Crocodile Dundee?

I know what my son in engineering school is getting for his birthday.

Another Sara highlighting the implicit and explicit biases built into publishing, tech apps, all kinds of digital systems. Looking forward to picking up this book. Great review.

Demonstrating causality requires more than “observation” and Just-so Stories. Yesterday, NPR reran a story about how “A Scientist’s Gender Can Skew Research”. While that was apparently caused by hormones, who says that most humans, women and men, don’t find voices with certain timbres less stressful than others? What is Wachter-Boettcher’s *proof* that the gendering of intelligent personal assistants’ voices is based on oppressive views of women rather than, say, market research?

Observations can be evidence. If you observe that every time some mostly male engineers design a system, that system fails to accommodate lifestyles or gender balances the engineers typically don’t encounter — and when you see the engineering culture filled with misogyny and racism (GamerGate, Google letter, etc.) — it’s not hard to connect the dots.

I’d recommend you read the book to see if you think you really need a randomized controlled trial to detect racism, sexism, and plain old short-sightedness in your technology.

Clearly, the best option is to not bake in biases. Waze’s solution (allow the user to record their own voice, have a rotating set of voices, some celebrity) avoids the problem and turns the challenge into a feature, a differentiator.

The internet risk imbalanced is. Unintended the consequences many. Decrying people a lot, solutions posit few.

Thank god for the female voices. At least I can hear them. The baritones of the male commentators on NPR are inaudible if there is any noise in the room, as there almost always is. The politicization of all analysis is deadly and anti-empirical. As for the idea that young boys are being trained to give orders to women because they are speaking to a female-voiced robot, I can only say that my (female) Alexa almost never does what I tell her to.

You imply the author has politicized analysis and that the work is anti-empirical. Have you read it? If not, your comment could be interpreted as suffering from the same maladies.