Even a casual reader of the scientific literature understands that there is an abundance of papers that link some minor detail with article publishing — say, the presence of a punctuation mark, humor, poetic, or popular song reference in one’s title — with increased citations. Do X and you’ll improve your citation impact, the researchers advise.

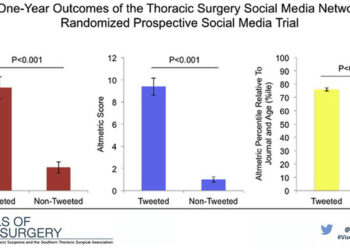

Over the past few years, the latest trend in X leads to more citations research has focused on minor interventions in social media, like a tweet.

There is considerable rationale for why researchers would show so much enthusiasm for this type of research. Compared to a clinical trial on human subjects, these studies are far easier to conduct.

First, there is no need to seek and secure significant research funds, enroll willing human subjects, or train a cadre of clinicians to run a trial. Further, there are no Institutional Review Boards to placate, protocols to register, or fundamental worries that your intervention has the remote possibility of doing real harm to real patients. As a social media-citation researcher, all you need is a computer, a free account, and a little time. This is probably why there are so many who publish papers claiming that some social media intervention (a tweet, a Facebook like) leads to more paper citations. And given that citation performance is a goal that every author, editor, and publisher wishes to improve, it’s not surprising that these papers get a lot of attention, especially on social media.

The latest of these claims that X leads to more citations, “Twitter promotion is associated with higher citation rates of cardiovascular articles: the ESC Journals Randomized Study” was published on 07 April 2022 in the European Heart Journal (EHJ), a highly-respected cardiology journal. The paper, by Ricardo Ladeiras-Lopes and others, claims that promoting a newly published paper in a tweet, increases its citation performance by 12% over two years. The EHJ also published this research in 2020 as a preliminary study, boasting a citation improvement by a phenomenal 43%.

Missing Pieces

Media effects on article performance are known to be small, if any, which is why a stunning report of 43% followed by a less stunning 12% should raise some eyebrows. The authors were silent about the abrupt change in results, as they were with other details missing from their paper. They were silent when I asked questions about their methods and analysis, and won’t share their dataset, even for validation purposes. Sadly, this is not a unique experience.

According to the European Heart Journal’s author guidelines, all research papers are required to include a Data Availability Statement, meaning, a disclosure on how readers can get authors’ data when questions arise. In addition, EHJ papers reporting the results of a randomized controlled trial are required to follow CONSORT guidelines, which include an explicit statement on how sample sizes were calculated. Both Data Availability and Sample Size Calculation statements were omitted from this paper. If this paper were about a pharmaceutical intervention for atrial fibrillation, I suspect that these omissions would raise red flags before publication.

Just another underpowered, over-reported study?

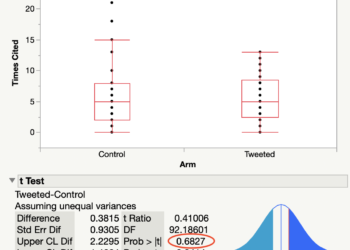

Based on my own calculations, I suspect that the Twitter-citation study was acutely underpowered to detect the reported (43% and 12%) citation differences. Based on the journals, types of papers, and primary endpoint described in the paper, I calculated that the researchers would require at least a sample size of 6693 (3347 in each arm) to detect a 1 citation difference after 2 years (SD=14.6, power=80%, alpha=0.05, two-sided test), about ten-times the sample size reported in their paper (N=694). With 347 papers in each study arm, the researchers had a statistical power of just 15%, meaning they had just a 15% chance of discovering a non-null effect if one truly existed. For medical research, power is often set at 80% or 90% when calculating sample sizes. In addition, low statistical power often exaggerates effect sizes. In other words, small sample sizes (even if the sampling was done properly) tend to generate unreliable results that over-report true effects. This could be the reason why one of their studies reports a 43% citation benefit, while the other just 12%.

I contacted the corresponding author, Ladeiras-Lopes, by email with questions about sample size calculation and secondary analyses (sent 5 May 2022) but received no response. One week later, I contacted him again to request a copy of his dataset (sent 10 May 2022), but also received no response. (I do have prior correspondences from Ladeiras-Lopes from earlier this year.)

Abdication of editorial responsibility?

After another week of silence, I contacted the European Heart Journal’s Editor-in-Chief (EiC) on 16 May 2022, asking for the editorial office to become involved. I asked for a copy of the authors’ dataset and for the journal to publish an Editorial Expression of Concern for this paper. The response from the editorial office was an invitation to submit a letter to their Discussion Forum, outlining my concerns. If accepted, EHJ would publish my letter along with the author’s response in a future issue of the journal. The process could take a long time and there was no guarantee that I would end up with a copy of the dataset. I asked for clarification that the editor’s response implied that they were not getting involved in this issue and that this was simply a matter to be discussed between the reader and author. I finally received a response on 7 June 2022 reiterating that a formal Discussion Forum contribution is the first step in the process, and if the authors fail to respond, the editorial office “could escalate [the issue] to the ESC Family Ethics Committee requesting further investigation.”

The European Heart Journal has clear rules for the reporting of scientific results and even clearer rules for the reporting of randomized controlled trials. The validity of the research results described in the paper is irrelevant. Required parts of the paper are missing. Even if my analysis had shown that the conclusions were justifiable, the authors clearly violated two EHJ policies.

Requiring a concerned reader to submit a formal article for publication in the journal seems an inappropriate response to a simple question raised about journal policies. Requiring the reader to jump through time-consuming hoops that involve escalating such a violation to a society-level committee is a clear abdication of editorial responsibility. Either the EiC needs to explain why this paper was excluded from EHJ rules or take action to uphold the editorial standards of the journal by issuing a retraction or, at least, a correction. If the experience I went through was normal, I imagine that most readers with concerns about EHJ papers would simply give up trying. If science is a self-correcting process, this journal is not making the correction process easy.

When I step back and put this Twitter study in perspective, I have to remind myself that it represents little real-world risk: No patients will be harmed or die because of these results; a few researchers even got two publications in a top-tier medical journal. The worst outcome may be a lot of collective time wasted by researchers convinced that X (in this case, tweeting) is going to make a difference in their papers’ citation performance. Sadly, this blog post will add to the article’s Altmetric score and put another colored swirl in its performance donut.

Update (15 June 2022): In the next post, I receive the authors’ data and reveal evidence of p-hacking.

Discussion

7 Thoughts on "Fill in the Blank Leads to More Citations"

Thank you for another great article Phil!

Citations (and derivative metrics) have become totally and utterly fetishized.

If “X” really equals anything other than high-integrity research the metric is counterproductive and worse than useless.

What amazes me is that research funders and universities are mostly happy to go along with a currency that has become so debased. Journals and researchers are just responding to the incentives put in place by funders and employers.

Ironically, one of the most significant “blanks” to get to more citations appears to be the open sharing of research artifacts (see for example this recent article: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9044204/). Even if I and others mishandled the statistics and it turns out that sharing your data does not increase your citations, the net benefit to science is hard to dispute, as exemplified by this blog post.

A further issue with the classic letter and author response, is that it’s usually cut off after the author’s response.Thus, the author is typically given the last word. Maybe worth the effort to adapt the blogs into an article calling out this paper, the Jessica Luc paper, and perhaps others who make big claims based on data they won’t share.

Indeed–I’m skeptical of the science in the article, but I’m really disappointed in the editorial process at the European Heart Journal. As you say, they really are abdicating their responsibility here. We don’t need journals to publicize research anymore–Blogs and social media and old-fashioned word-of-mouth can handle that. The value that journals add now is to regulate the quality of the research, and EHJ is failing to do this or even support third parties like yourself who are trying to do this for them.

I am surprised that you are having such difficulty in getting the dataset from the authors. The last author (Thomas Luscher) is a previous Editor-in-Chief of the EHJ and currently Secretary/Treasurer of ESC, another (Michael Alexander) is Head of Publications at ESC. Have you written to them?

My correspondences with the journal have been with Amelia Meier-Batschelet (Lead Managing Editor), and Susanne Dedecke (Managing Editor). In my experience, the managing editors are the ones who know all the rules and get things done.

I have to assume that this paper was handled in the same way that any other research paper would be handled in the EHJ and that power and politics are not affecting the way the editorial office has been communicating with me. Nevertheless, I have had recent problems with a similar twitter study in which the authors were also members of the editorial board:

As of 11 June 2022, the authors have made their data publicly available at https://github.com/rll12345/ESC_journals_randomised_study/blob/main/working_dataset_sept2021.dta